Reading Robot Minds with Virtual Reality

Figuring out what other people are thinking is tough, but figuring out what a robot is thinking can be downright impossible. With no brains to peer into, researchers have to work hard to dissect a bot's point of view.

But inside a dark room at the Massachusetts Institute of Technology (MIT), researchers are testing out their version of a system that lets them see and analyze what autonomous robots, including flying drones, are "thinking." The scientists call the project the "measureable virtual reality" (MVR) system.

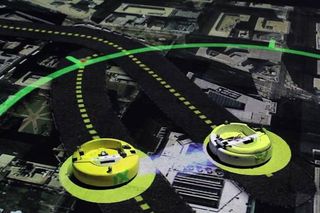

The virtual reality portion of the system is a simulated environment that is projected onto the floor by a series of ceiling-mounted projectors. The system is measurable because the robots moving around in this virtual setting are equipped with motion capture sensors, monitored by cameras, that let the researchers measure the movements of the robots as they navigate their virtual environment. [5 Surprising Ways Drones Could Be Used in the Future]

The system is a "spin on conventional virtual reality that's designed to visualize a robot's 'perceptions and understanding of the world,'" Ali-akbar Agha-mohammadi, a post-doctoral associate at MIT's Aerospace Controls Laboratory, said in a statement.

With the MVR system, the researchers can see the path a robot is going to take to avoid an obstacle in its way, for example. In one experiment, a person stood in the robot's path and the bot had to figure out the best way to get around him.

A large pink dot appeared to follow the pacing man as he moved across the room — a visual symbolization of the robot's perception of this person in the environment, according to the researchers. As the robot determined its next move, a series of lines, each representing a possible route determined by the robot's algorithms, radiated across the room in different patterns and colors, which shifted as the robot and the man repositioned themselves. One, green line represented the optimal route that the robot would eventually take.

"Normally, a robot may make some decision, but you can't quite tell what's going on in its mind, why it's choosing a particular path," Agha-mohammadi said. "But if you can see the robot's plan projected on the ground, you can connect what it perceives with what it does, to make sense of its actions."

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

And understanding a robot's decision-making process is useful. For one thing, it lets Agha-mohammadi and his colleagues improve the overall function of autonomous robots, he said.

"As designers, when we can compare the robot’s perceptions with how it acts, we can find bugs in our code much faster. For example, if we fly a quadrotor [helicopter], and see something go wrong in its mind, we can terminate the code before it hits the wall, or breaks," Agha-mohammadi said.

This ability to improve an autonomous bot by taking cues from the machine itself could have a big impact on the safety and efficiency of new technologies like self-driving cars and package-delivery drones, the researchers said.

"There are a lot of problems that pop up because of uncertainty in the real world, or hardware issues, and that's where our system can significantly reduce the amount of effort spent by researchers to pinpoint the causes," said Shayegan Omidshafiei, a graduate student at MIT who helped develop the MVR system. [Super-Intelligent Machines: 7 Robotic Futures]

"Traditionally, physical and simulation systems were disjointed," Omidshafiei said. "You would have to go to the lowest level of your code, break it down and try to figure out where the issues were coming from. Now we have the capability to show low-level information in a physical manner, so you don't have to go deep into your code, or restructure your vision of how your algorithm works. You could see applications where you might cut down a whole month of work into a few days."

For now, the MVR system is only being used indoors, where it can test autonomous robots in simulated rugged terrainbefore the machines actually encounter the real world. The system could eventually let robot designers test their bots in any environment they want during the project's prototyping phase, Omidshafiei said.

"[The system] will enable faster prototyping and testing in closer-to-reality environments," said Alberto Speranzon, a staff research scientist at United Technologies Research Center, headquartered in East Hartford, Connecticut, who was not involved in the research. "It will also enable the testing of decision-making algorithms in very harsh environments that are not readily available to scientists. For example, with this technology, we could simulate clouds above an environment monitored by a high-flying vehicle and have the video- processing system dealing with semi-transparent obstructions."

Follow Elizabeth Palermo @techEpalermo. Follow Live Science @livescience, Facebook & Google+. Original article on Live Science.

Elizabeth is a former Live Science associate editor and current director of audience development at the Chamber of Commerce. She graduated with a bachelor of arts degree from George Washington University. Elizabeth has traveled throughout the Americas, studying political systems and indigenous cultures and teaching English to students of all ages.

Most Popular