Killer Robots Need Regulation, Expert Warns

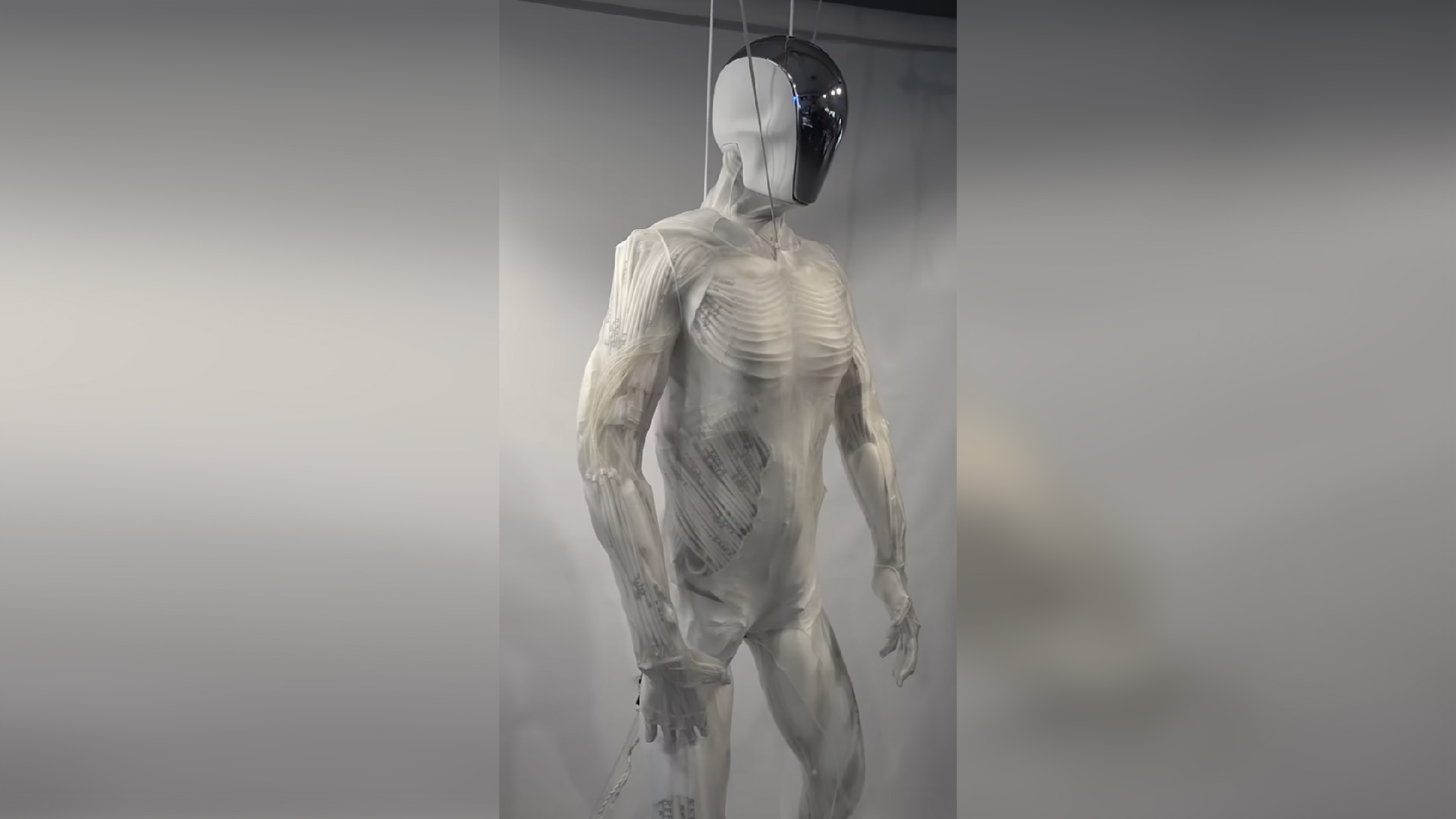

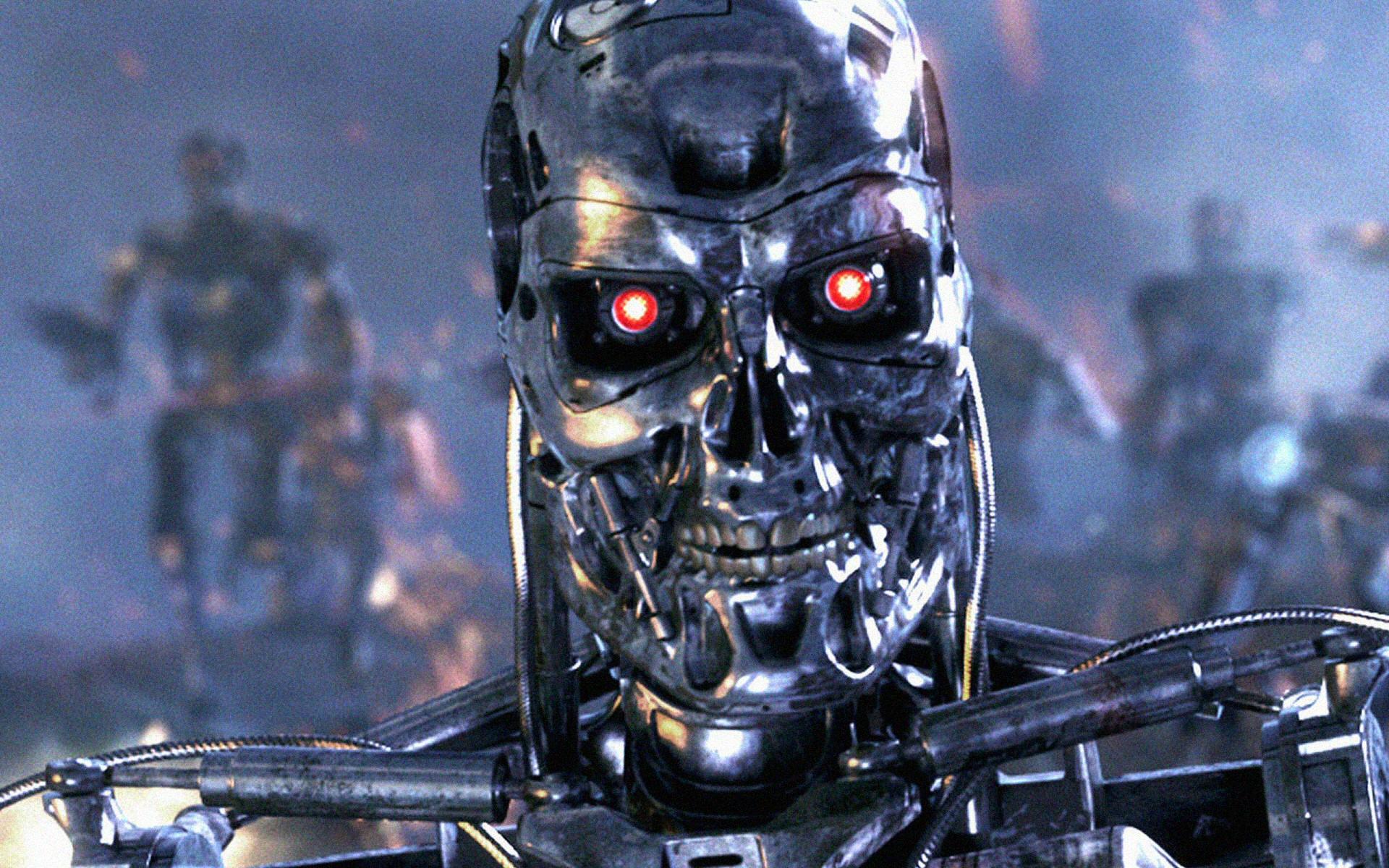

It's a familiar theme in Hollywood blockbusters: Scientist develops robot, robot becomes sentient, robot tries to destroy humanity. But with seemingly sci-fi technological advances inching closer to reality, artificial intelligence and robotics experts face an important question: Should they support or oppose the development of deadly, autonomous robots?

"Technologies have reached a point at which the deployment of such systems is — practically, if not legally — feasible within years, not decades," Stuart Russell, a computer scientist and artificial intelligence (AI) researcher of the University of California, Berkeley, wrote in a commentary published today (May 27) in the journal Nature. These weapons "have been described as the third revolution in warfare, after gunpowder and nuclear arms," Russell wrote.

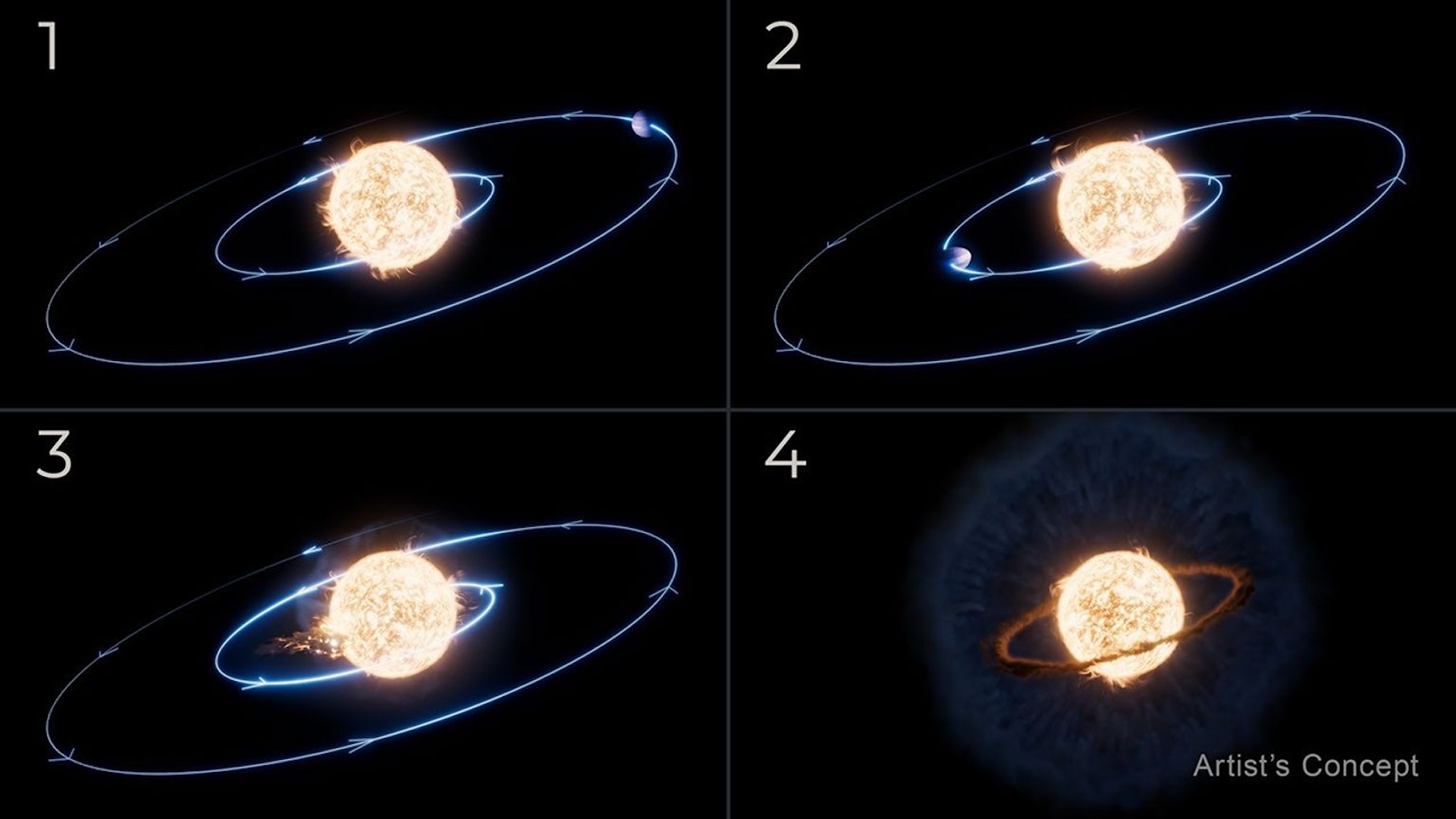

Lethal autonomous weapons systems could find and attack their targets without human intervention. For example, such systems could include armed drones that are sent to kill enemies in a city, or swarms of autonomous boats sent to attack ships. [The 6 Strangest Robots Ever Created]

Deadly robots

Some people argue that robots may not be able to distinguish between enemy soldiers and civilians, and so may accidentally kill or injure innocent people. Yet other commentators say that robots may cause less collateral damage than human soldiers, also aren't subject to human emotions like aggression. "This is a fairly new moral ground we're getting into," Russell said.

There are already artificial intelligence systems and robots in existence that are capable of doing one of the following: sensing their environments, moving and navigating, planning ahead, or making decisions. "They just need to be combined," Russell said.

Already, the Defense Advanced Research Projects Agency (DARPA), the branch of the U.S. Department of Defense charged with advancing military technologies, has two programs that could cause concern, Russell said. The agency's Fast Lightweight Autonomy (FLA) project aims to develop tiny, unmanned aerial vehicles designed to travel quickly in urban areas. And the Collaborative Operations in Denied Environment (CODE) project involves the development of drones that could work together to find and destroy targets, "just as wolves hunt in coordinated packs," Jean-Charles Ledé, DARPA's program manager, said in a statement.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Current international humanitarian laws do not address the development of lethal robotic weapons, Russell pointed out. The 1949 Geneva Convention, one of several treaties that specifies humane treatment of enemies during wartime, requires that any military action satisfy three things: military necessity, discrimination between soldiers and civilians, and weighing of the value of a military objective against the potential for collateral damage.

Treaty or arms race?

The United Nations has held meetings about the development of lethal autonomous weapons, and this process could result in a new international treaty, Russell said. "I do think treaties can be effective," he told Live Science.

For example, a treaty successfully banned blinding laser weapons in 1995. "It was a combination of humanitarian disgust and the hardheaded practical desire to avoid having tens of thousands of blind veterans to look after," he said.

The United States, the United Kingdom and Israel are the three countries leading the development of robotic weapons, and each nation believes its internal processes for reviewing weapons make a treaty unnecessary, Russell wrote.

But without a treaty, there's the potential for a robotic arms race to develop, Russell warned. Such a race would only stop "when you run up against the limits of physics," such as the range, speed and payload of autonomous systems.

Developing tiny robots that are capable of killing people isn't easy, but it's doable. "With 1 gram [0.03 ounces] of high-explosive charge, you can blow a hole in someone's head with an insect-size robot," Russell said. "Is this the world we want to create?" If so, "I don't want to live in that world," he said.

Other experts agree that humanity needs to be careful in developing autonomous weapons. "In the United States, it's very difficult for most AI scientists to take a stand" on this subject, because U.S. funding of "pretty much all AI research is military," said Yoshua Bengio, a computer scientist at the University of Montreal in Canada, who co-authored a separate article in the same journal on so-called deep learning, a technology used in AI.

But Bengio also emphasized the many benefits of AI, in everything from precision medicine to the ability to understand human language. "This is very exciting, because there are lots of potential applications," he told Live Science.

Follow Tanya Lewis on Twitter. Follow us @livescience, Facebook & Google+. Original article on Live Science.