New Artificial Intelligence Can Tell Stories Based on Photos

Artificial intelligence may one day embrace the meaning of the expression "A picture is worth a thousand words," as scientists are now teaching programs to describe images as humans would.

Someday, computers may even be able to explain what is happening in videos just as people can, the researchers said in a new study.

Computers have grown increasingly better at recognizing faces and other items within images. Recently, these advances have led to image captioning tools that generate literal descriptions of images. [Super-Intelligent Machines: 7 Robotic Futures]

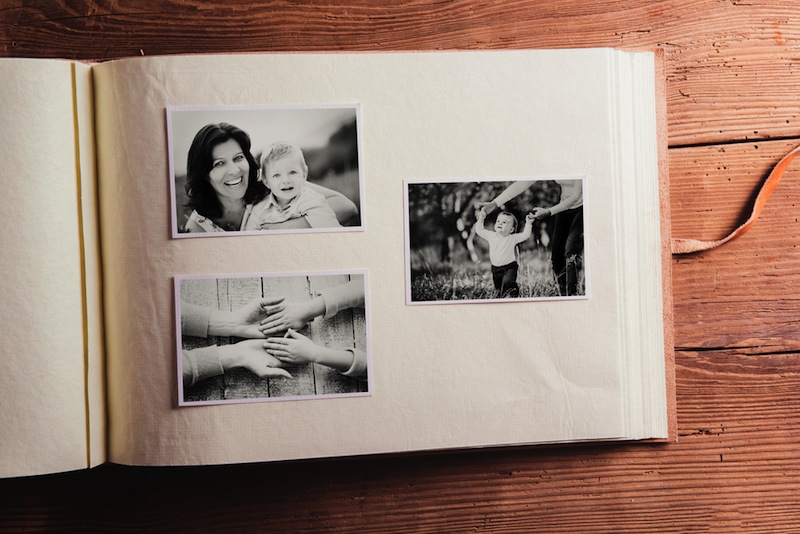

Now, scientists at Microsoft Research and their colleagues are developing a system that can automatically describe a series of images in much the same way a person would by telling a story. The aim is not just to explain what items are in the picture, but also what appears to be happening and how it might potentially make a person feel, the researchers said. For instance, if a person is shown a picture of a man in a tuxedo and a woman in a long, white dress, instead of saying, "This is a bride and groom," he or she might say, "My friends got married. They look really happy; it was a beautiful wedding."

The researchers are trying to give artificial intelligence those same storytelling capabilities.

"The goal is to help give AIs more human-like intelligence, to help it understand things on a more abstract level — what it means to be fun or creepy or weird or interesting," said study senior author Margaret Mitchell, a computer scientist at Microsoft Research. "People have passed down stories for eons, using them to convey our morals and strategies and wisdom. With our focus on storytelling, we hope to help AIs understand human concepts in a way that is very safe and beneficial for mankind, rather than teaching it how to beat mankind."

Telling a story

To build a visual storytelling system, the researchers used deep neural networks, computer systems that learn by example — for instance, learning how to identify cats in photos by analyzing thousands of examples of cat images. The system the researchers devised was similar to those used for automated language translation, but instead of teaching the system to translate from one language to another, the scientists trained it to translate images into sentences.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

The researchers used Amazon's Mechanical Turk, a crowdsourcing marketplace, to hire workers to write sentences describing scenes consisting of five or more photos. In total, the workers described more than 65,000 photos for the computer system. These workers' descriptions could vary, so the scientists preferred to have the system learn from accounts of scenes that were similar to other accounts of those scenes. [History of A.I.: Artificial Intelligence (Infographic)]

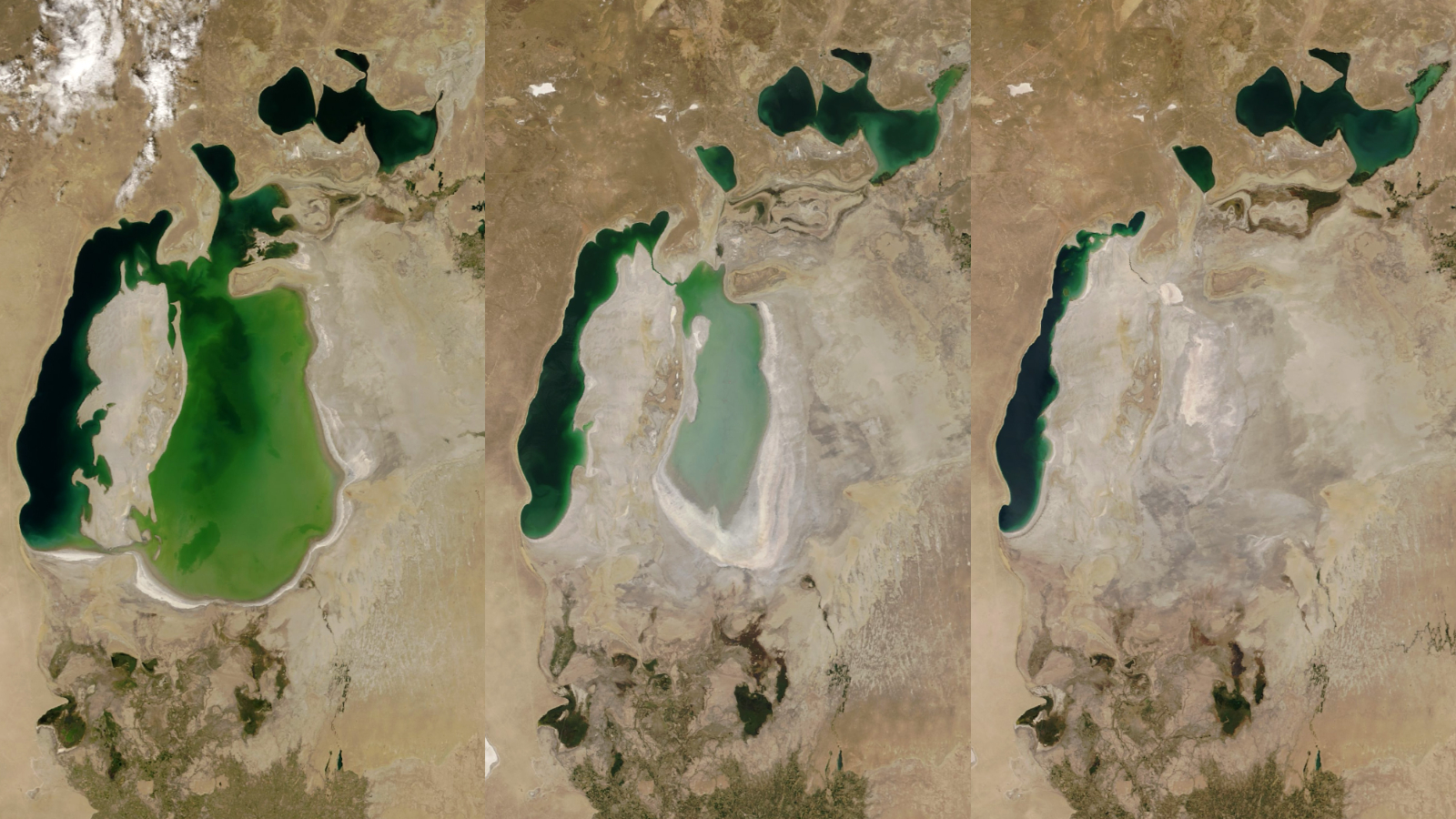

Then, the scientists fed their system more than 8,100 new images to examine what stories it generated. For instance, while an image captioning program might take five images and say, "This is a picture of a family; this is a picture of a cake; this is a picture of a dog; this is a picture of a beach," the storytelling program might take those same images and say, "The family got together for a cookout; they had a lot of delicious food; the dog was happy to be there; they had a great time on the beach; they even had a swim in the water."

One challenge the researchers faced was how to evaluate how effective the system was at generating stories. The best and most reliable way to evaluate story quality is human judgment, but the computer generated thousands of stories that would take people a lot of time and effort to examine.

Instead, the scientists tried automated methods for evaluating story quality, to quickly assess computer performance. In their tests, they focused on one automated method with assessments that most closely matched human judgment. They found that this automated method rated the computer storyteller as performing about as well as human storytellers.

Everything is awesome

Still, the computerized storyteller needs a lot more tinkering. "The automated evaluation is saying that it's doing as good or better than humans, but if you actually look at what's generated, it's much worse than humans," Mitchell told Live Science. "There's a lot the automated evaluation metrics aren't capturing, and there needs to be a lot more work on them. This work is a solid start, but it's just the beginning."

For instance, the system "will occasionally 'hallucinate' visual objects that are not there," Mitchell said. "It's learning all sorts of words but may not have a clear way of distinguishing between them. So it may think a word means something that it doesn't, and so [it will] say that something is in an image when it is not."

In addition, the computerized storyteller needs a lot of work in determining how specific or generalized its stories should be. For example, during the initial tests, "it just said everything was awesome all the time — 'all the people had a great time; everybody had an awesome time; it was a great day,'" Mitchell said. "Now maybe that's true, but we also want the system to focus on what's salient."

In the future, computerized storytelling could help people automatically generate tales for slideshows of images they upload to social media, Mitchell said. "You'd help people share their experiences while reducing nitty-gritty work that some people find quite tedious," she said. Computerized storytelling "can also help people who are visually impaired, to open up images for people who can't see them."

If AI ever learns to tell stories based on sequences of images, "that's a stepping stone toward doing the same for video," Mitchell said. "That could help provide interesting applications. For instance, for security cameras, you might just want a summary of anything noteworthy, or you could automatically live tweet events," she said.

The scientists will detail their findings this month in San Diego at the annual meeting of the North American Chapter of the Association for Computational Linguistics.

Original article on Live Science.