Data Overload: Scientists Struggle to Streamline Results

For those studying neuroscience, the challenges that come with merging results across areas of investigation can be striking. Recent advances in research techniques capable of churning out data automatically mean that scientists face ever-growing datasets that boggle even the smartest of minds. Now, they are calling for increased funding, research endeavors and tools to help integrate results across research areas and make them publicly available to researchers and policymakers alike.

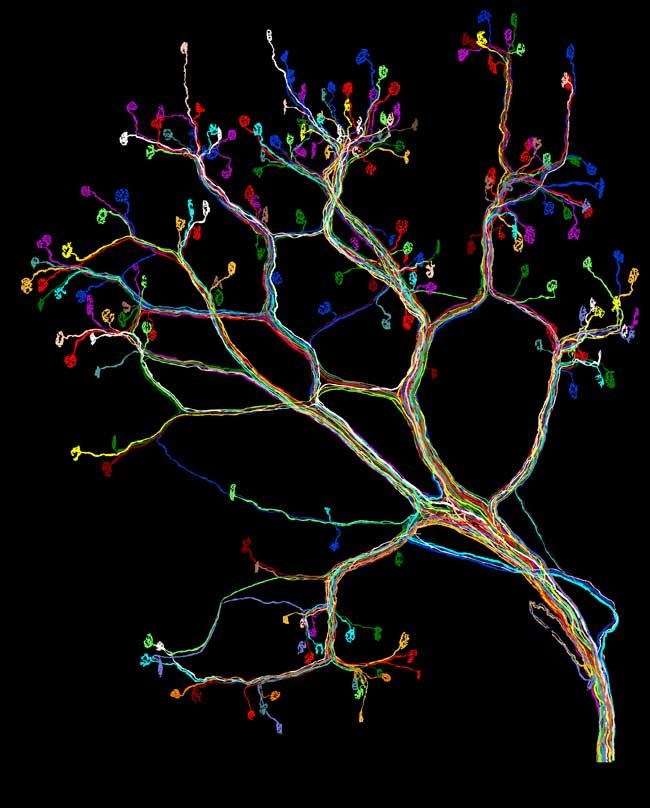

The challenges of merging results across areas of investigation are perhaps most striking in the field of neuroscience, which spans small and large spatial scales and timeframes, from the near-instantaneous firing of a neuron (the human brain has about 80 billion neurons) to the behavior of people across their life spans.

Uniting these diverse areas of study will likely be necessary to achieve a deep understanding of brain disorders, but several hurdles stand in the way, according to a trio of neuroscientists who reported their concerns today (Feb. 10) in the journal Science.

Gaining momentum

Neuroscience has made some headway in unifying different research areas. The goal of the Human Connectome Project (HCP), for instance, is to map connections among neurons using evidence from brain-imaging studies and link the findings to behavioral tests and DNA samples from more than 1,000 healthy adults. Researchers will be able to navigate the freely available data to come up with new ideas about the brain.

"The insights emerge from comparing across a whole collection of studies looking at similar, but not identical, questions," said David Van Essen, a commentary co-author and neuroscientist at Washington University in St. Louis who is involved in the HCP and who wrote a commentary in the journal Science. "In the long run, suitable investments in the data-mining and informatics infrastructure will actually accelerate progress in understanding and treating diseases."

Data mining involves extracting patterns from large datasets, and informatics refers to using computers and statistics to accumulate, manipulate, store and retrieve knowledge. These approaches have allowed researchers, for example, to figure out the structure of proteins based on DNA sequences and predict drug responses in patients.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Another government-funded initiative, the Neuroscience Information Framework (NIF), was launched in 2005 to gather scattered neuroscience-related resources — including about 30 million records — onto one Web-based catalog that uses standardized terminology meant to smooth the process of data mining and unite disparate fields.

Long march ahead

But these efforts are just the beginning, said Maryann Martone, a commentary co-author and neuroscientist at the University of California, San Diego, who is leading the current phase of NIF. Scientists need to be more willing to share their data, and funding agencies should support refined technology that can integrate and search rich datasets, she said.

"Neuroscience now has to really grapple with what to do with all the data and start to think about it less like we used to as individuals and more like a community," Martone told LiveScience.

These steps will become more important as datasets expand and reach a level of complexity that is beyond human comprehension, said Huda Akil, a neuroscientist at the University of Michigan who co-wrote the Science article.

"What we're seeing is the limits of the human mind in dealing with information," Akil said. "We have to use these informatics strategies and data-handling strategies to supplement our minds and bring all of that within a framework that our minds can handle."

Climate conundrum

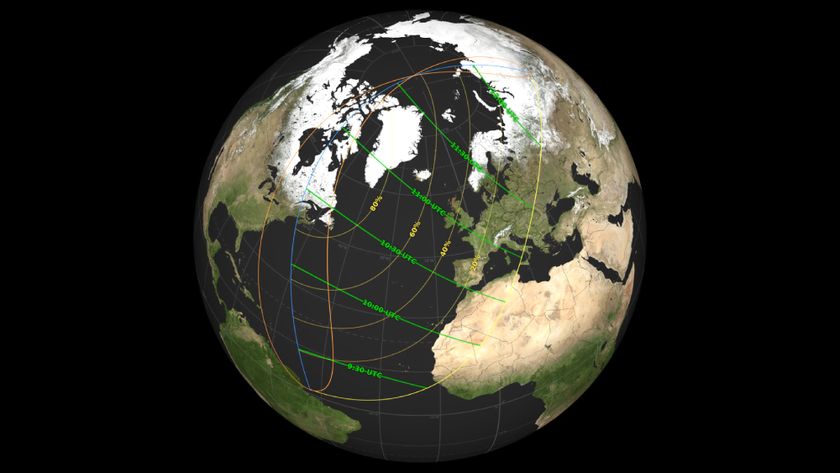

But the information overload isn't limited to one discipline. Climate scientists are also wrestling with linking distinct types of data, such as satellite observations of clouds and computer-model outputs. Bringing together details regarding the atmosphere, ocean, land, sea ice and the carbon cycle is essential for creating realistic climate simulations, said Jerry Meehl, a co-author of a separate Science commentary and a climate researcher at the National Center for Atmospheric Research (NCAR) in Boulder, Colo.

Meehl co-chairs a working group of the Coupled Model Intercomparison Project (CMIP), which has invited international teams to archive, compare and improve climate model output since 1995. CMIP provides free access to data generated by a variety of models — some that focus on a few types of observations, and others that incorporate the carbon cycle. As measurements and models become more complicated, it will be crucial to develop better data-processing procedures and user-friendly repositories, Meehl said.

But a large amount of climate data is still behind closed doors, due to government interest in selling intellectual property for commercial applications. For example, governments can sell satellite data to shipping or insurance companies, whose activities and business decisions are affected by environmental conditions. To enlighten policymakers interested in mitigating the effects of global warming, the information will need to reach a wider audience, Meehl told LiveScience.

"As a taxpayer, I want to know that we’re making the most of our investment in science and using it to benefit society," Meehl said.

Akil agreed, adding that "dealing with that amount of information is necessary for us to use science to help society at many levels, whether we're talking about global warming or brain disorders."