Supercomputer 'Titans' Face Huge Energy Costs

Warehouse-size supercomputers costing $1 million to $100 million can seem as distant from ordinary laptops and tablets as Greek immortals on Mount Olympus. Yet the next great leap in supercomputing could not only transform U.S. science and innovation, but also put much more computing power in the hands of consumers.

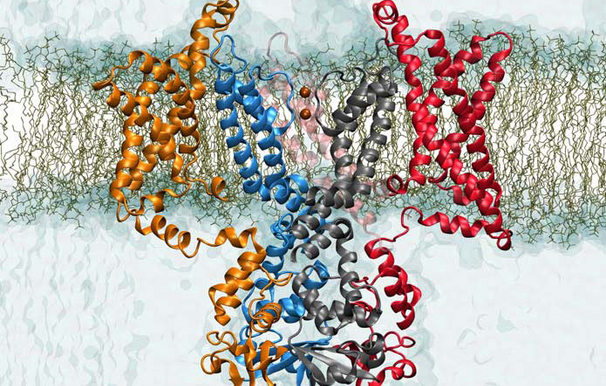

The next generation of "exascale" supercomputers could carry out 1 billion billion calculations per second — 1,000 times better than the most powerful supercomputers today. Such supercomputers could accurately simulate internal combustion engines of cars, jet plane engines and even nuclear fusion reactors for the very first time. They would also enable "SimEarth" models of the planet down to the 1 kilometer scale (compared to 50 or 100 kms today), or simulations of living cells that include the molecular, chemical, genetic and biological levels all at once.

"Pretty much every area of science is driven today by theory, experiment and simulation," said Steve Scott, chief technology officer of the Tesla business unit at NVIDIA. "Scientists use machines to run a virtual experience to understand the world around us."

But the future of supercomputing has a staggering energy cost — just one exascale supercomputer would need the power equivalent to the maximum output of the Hoover Dam. To get around that problem, computer scientists and mathematicians must dream up an entirely new type of computer architecture that prizes energy efficiency.

Researchers gathered to discuss those challenges during a workshop held by the Institute for Computational and Experimental Research in Mathematics at Brown University in January.

"We've reached the point where existing technology has taken us about as far as we can go with present models," said Jill Pipher, director of ICERM. "We've been increasing computing power by 1,000 fold every few years for a while now, but now we've reached the limits."

We can rebuild them

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Computer engineers have managed to squeeze double the number of transistors into the same microchip space every few years — a trend known as Moore's law — as they kept power requirements steady. But even if they could squeeze enough transistors onto a microchip to make exascale computing possible, the power required becomes too great.

"We're entering a world constrained not by how many transistors we can put a chip or whether we can clock them as fast as possible, but by the heat that they generate," Scott told InnovationNewsDaily. "The chip would burn and effectively melt."

That requires a radical redesign of computer architecture to make it much more energy efficient. The U.S. Department of Energy wants to find a way to make an exascale supercomputer by 2020 that would use less than 20 megawatts of power — about 100 times less than the Hoover Dam's maximum power capacity of 2,074 megawatts that would be needed today.

Changing computer architecture also requires a rewrite of the software programs that run on today's computers. The job of figuring out that puzzle falls to applied mathematicians.

"When code is written, it's written for computers where memory is cheap," Pipher explained. "Now, if you're building these new machines, you're going to have to try writing programs in different ways."

You say CPU, I say GPU

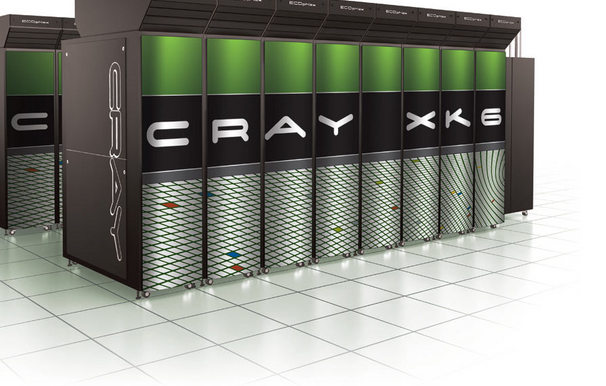

Today's fastest supercomputers resemble hundreds of fridge-size cabinets packed inside huge rooms. Each of those cabinets can house more than 1,000 central processing units (CPUs), where one CPU is roughly equivalent to the "brain" that carries out software program instructions inside a single laptop.

The latest generation of petascale supercomputers (capable of 1 quadrillion calculations per second) has gotten by using thousands of CPUs networked together. But each CPU is designed to run a few tasks as quickly as possible with less regard for energy efficiency, and so CPUs won't do for exascale supercomputers.

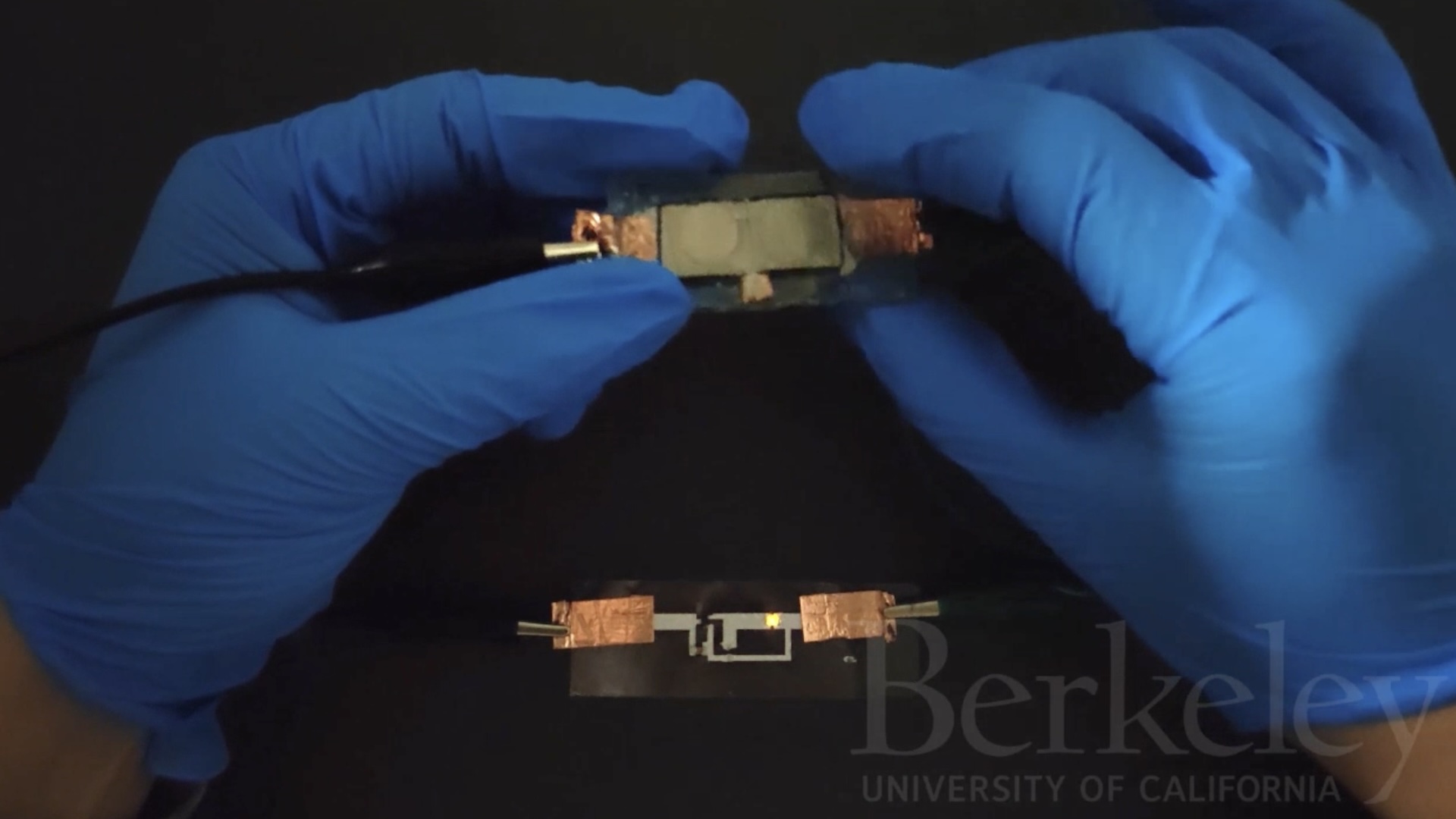

A promising solution comes from a company well known among PC gamers. About a decade ago, NVIDIA created graphics processing units (GPUs) that focus upon running many tasks efficiently — a necessity for creating the rich graphics of a video or game playing on a computer.

The energy savings can be huge when a GPU uses almost 8 times less energy than a CPU per computer calculation or instruction.

"GPUS were designed with power efficiency in mind first, not running a single task quickly," Scott said. "That's why they're uniquely qualified for this challenge. We have to much more efficient about how much more work we can do per watt [of energy]."

NVIDIA GPUs already reside within three of the world's fastest supercomputers, including China's Tianhe-1A in second place. GPUs will also boost the $100 million Titan supercomputer scheduled for installation at the Oak Ridge National Laboratory in Oak Ridge, Tenn. — a petascale supercomputer that could once again make the U.S. home to the world's fastest supercomputer.

Better computers for all

The road to exascale computing won't be easy, but NVIDIA has a timeline for creating new generations of GPUs that can lead to such a supercomputer in 2018. The company's "Kepler" GPU is expected to run 5 billion calculations per watt of energy when it debuts in 2012, whereas the next generation "Maxwell" GPU might carry out 14 billion calculations per watt by 2014.

But NVIDIA didn't invest in high-performance computing just to build a handful of huge supercomputers each year — especially when each generation of GPUs costs about $1 billion to develop. Instead, it sees the supercomputing investment leading to more powerful computers for a much bigger pool of customers among businesses and individuals.

The same microchips inside supercomputers can end up inside the home computer of a gamer, Scott pointed out. In that sense, each new generation of more powerful chips eventually makes more computing power available for cheaper — to the point where the rarest supercomputers today can become more ordinary tomorrow.

That result is less ordinary than extraordinary for moving science and innovation forward.

"When you can build a petascale system for $100,000, it starts becoming very affordable for even small departments in a university or even small groups in private industry," Scott said.

This story was provided by InnovationNewsDaily, a sister site to LiveScience. You can follow InnovationNewsDaily Senior Writer Jeremy Hsu on Twitter @ScienceHsu. Follow InnovationNewsDaily on Twitter @News_Innovation, or on Facebook.