Why Seeing Around Corners May Become Next 'Superpower'

Superman had X-ray vision, but a pair of scientists has gone one better: seeing around corners.

Ordinarily, the only way to see something outside your line of sight is to stand in front of a mirror or similarly highly reflective surface. Anything behind you or to the side of you reflects light that then bounces off the mirror to your eyes.

But if a person is standing in front of a colored wall, for example, she can't see anything around a corner, because the wall not only absorbs a lot of the light reflected from the objects around it, but scatters it in many directions as well. (This is especially true of anything with a matte finish.)

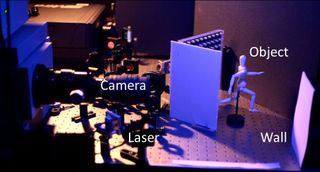

MIT researchers Ramesh Raskar and Andreas Velten got around this issue using a laser, a beam-splitter and a sophisticated algorithm. They fired a laser through the beam-splitter and at a wall, with pulses occurring every 50 femtoseconds. (A femtosecond is a millionth of a billionth of a second, or the time it takes light to travel about 300 nanometers).

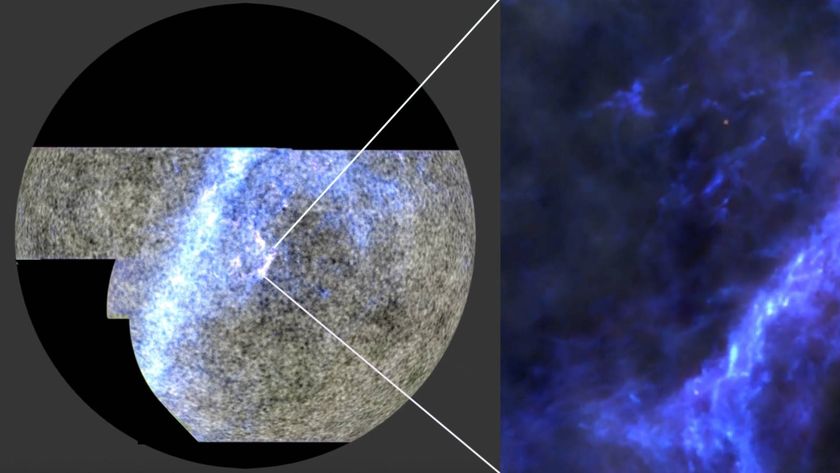

When the laser light hits the splitter, half of it travels to the wall, and then bounces to the object around the corner. The light reflects off the object, hitting the wall again, and then returns to a camera. The other half of the beam just goes directly to the camera. This half-beam serves as a reference, to help measure the time it takes for the other photons (particles of light) to return to the camera.

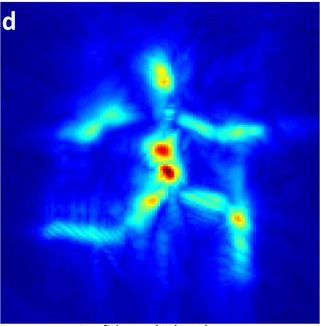

Using a special algorithm to analyze when the returning photons arrive and checking them against the reference beam, the scientists were able to reconstruct an image of the object they were trying to see.Velten noted that when analyzing the photons, the ones that hit an object in a room will return sooner than the ones that bounce off a rear wall, and the algorithm accounts for that. They could even see three-dimensional objects, such as a mannequin of a running man used in the experiment.

The resolution isn't, of course, anywhere near as good as a human eye. It can pick up centimeter-size details at a distance of a few meters, so it can only resolve relatively large objects. Raskar noted that a shorter exposure time could boost resolution; the camera is currently using exposures measured in picoseconds. But even so, it is a useful method for detecting things that for whatever reason are not directly in the line of sight. Velten also noted that you could use a similar algorithm in reconstructing images of the insides of a backlit object – something he wants to explore in medical imaging using visible light, which doesn't have all the bad effects of X-rays or the limitations (such as not being able to "see" soft tissues well). [Vision Quiz: What Animals Can See]

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Raskar and Velten are no strangers to playing with photons. In December 2011, they demonstrated a camera that could capture frames a trillion times every second.

Robert Boyd, a professor of optics at the University of Rochester, wrote in an email to LiveScience that he is familiar with the duo's "seeing around corners" work and that it is fundamentally sound. How useful it ends up being he isn't sure, though he added that there is no reason it couldn't be implemented in the real world outside of a lab.

For his part, Raskar has always been fascinated with the unseen. "WhenI was a teenager, it has always bothered me that the world is created around me in real time, that it doesn't exist if I do not look at it," he said. "And so I started thinking about that — ways to make the invisible visible."

The team foresees the technique's applications including anything that requires seeing out of the line of sight. "It really changes what we can do with a camera," Raskar said. "All of a sudden, the line of sight is no longer a consideration."

The work is being published online Tuesday (March 20) in the journal Nature Communications.

Follow LiveScience for the latest in science news and discoveries on Twitter @livescience and on Facebook.