Touch Photography: Giving Computer Users a Feel for Things

This Behind the Scenes article was provided to LiveScience in partnership with the National Science Foundation.

A new type of camera that captures how a surface feels is exactly the kind of technology mechanical engineer Katherine Kuchenbecker believes will change the way humans and computers interact. And she’s helping make that happen.

With support from the National Science Foundation, Kuchenbecker, a mechanical engineer at the University of Pennsylvania, is working to capture how objects feel and recreate the sensation on the screens of computers and other devices. She calls this approach haptography, or haptic (touch) photography.

"If you can see something on your computer, why shouldn't you be able to feel it?" she asks. "Touch is an important part of the sensory experience of being a human."

Applications

Making everyday human-computer interaction richer, beyond just the visual and auditory senses, allows for some really exciting applications.

An online shopper, for example, might feel the texture of a pair of pants before deciding whether to buy them. A museum visitor might experience haptic impressions of a rare archeological artifact without having to hold the actual object. And digital artists might relish the ability to feel virtual drawing surfaces as they create masterpieces.

Get the world’s most fascinating discoveries delivered straight to your inbox.

Beyond these uses, Kuchenbecker’s work on haptography is particularly motivated by medical training and simulation.

"We hope the technology, the hardware and the software that we're creating will eventually feed back into improved training for doctors and dentists and other clinicians," she said. "We want to let them feel what an interaction is going to feel like, whether it's surgery, inserting an epidural needle, drilling a cavity in a tooth, or practicing some other psychomotor skill with their hands. I want to let them practice these tasks in a safe, rich and challenging computer environment so they can learn those skills before they go work on a real patient."

How haptography works

When it comes to creating haptographs of a surface, such as a piece of canvas or wood, the key instrument is a tool equipped with sensors that measure tool position, contact force, and high-bandwidth contact accelerations. The haptographer repeatedly drags this tool over the actual surface of the object while the computer records all the signals.

"We make haptic sensory recordings of what you feel as you use this sensorized tool to touch the real surface," said Kuchenbecker. "We then process those recordings using computer algorithms to pull out the salient features."

According to Kuchenbecker, a user can later experience a virtual version of how the surface felt by dragging a stylus equipped with a voice coil actuator (a type of motor that can shake back and forth) across the surface of a computer screen.

Measuring touch

"We measure how hard you're pushing and how fast you're moving the stylus, and then we use that motor on the stylus to shake your fingers in just the same way as they would have moved when you touched the real surface," she said.

The virtual surfaces feel real because with any change in movement, the computer automatically adjusts the sensations it plays back.

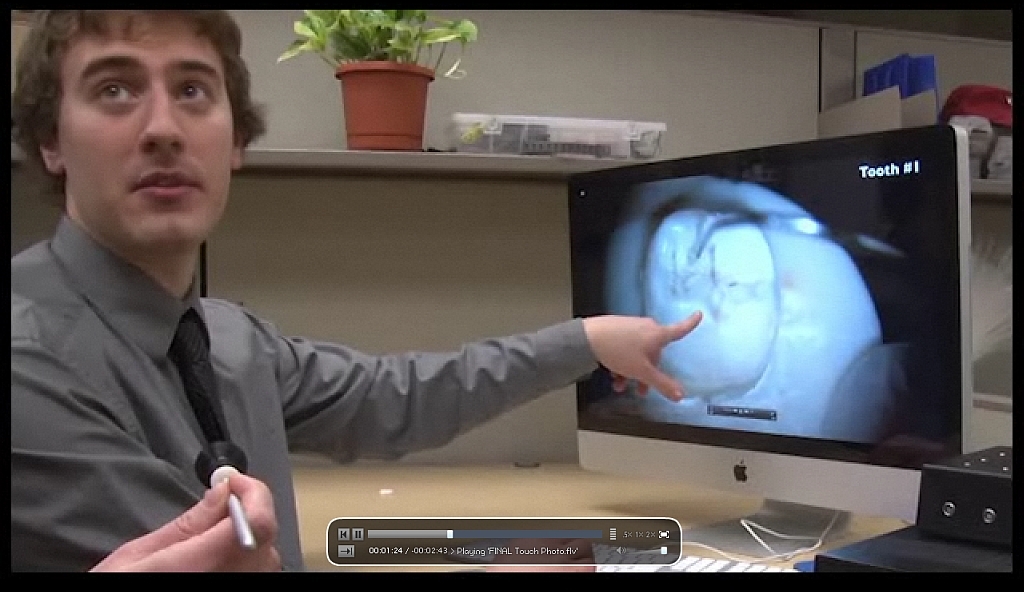

Kuchenbecker’s team is also exploring how the technology can be combined with training videos in areas such as dentistry.

"We attach a tiny high-bandwidth accelerometer onto the probe used by the dentist. As they explore a tooth, we record everything they feel, and we put it along with the video, in what I call the touch track," explains Kuchenbecker. "There's the video that you see, the sound track that you hear, and then the touch track that you feel."

"We can play all three back together for a learner to experience," she said. "In this way, a trainee can see what the dentist saw, hear what the dentist heard, and, holding another tool that has a motor inside of it, feel what the dentist felt."

The goal of enhancing virtual reality is among the National Academy of Engineering’s Grand Challenges for the 21st century. Kuchenbecker and her team of students have their hands all over this one, so to speak.

"This challenge of enriching human-computer interaction is one that many, many people are working on from many avenues," she said. "We hope to make contributions in terms of widening and broadening the sensory feedback you can receive."

Editor's Note: The researchers depicted in Behind the Scenes articles have been supported by the National Science Foundation, the federal agency charged with funding basic research and education across all fields of science and engineering. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author and do not necessarily reflect the views of the National Science Foundation. See the Behind the Scenes Archive.