Baseball Helps Humanize the Supreme Court

This Behind the Scenes article was provided to LiveScience in partnership with the National Science Foundation.

Gotta love the Cubbies. Thanks to them, a database of U.S. Supreme Court audio recordings is now freely available to the public. Too much of a stretch? Not really, because the tool grew out of one man’s love of the Chicago Cubs, technology and the study of law.

One sunny afternoon at Wrigley Field 20 years ago, Jerry Goldman, then a political science professor at Northwestern University, was sitting in the bleachers enjoying a game with a couple of students. They considered ways that baseball is a metaphor for the U.S. Supreme Court: nine players, nine justices. One game turns on great pitches and amazing catches; the other on oral arguments and thoughtful rulings.

If baseball cards explained vital details about a player’s career, Goldman figured, why not create cards for the justices and add video and audio? The project seemed achievable, given the advent of HyperCard, an application and programming tool for early Apple computers. “My colleagues thought I was crazy [to pursue these technology projects],” says Goldman, now a professor at the Illinois Institute of Technology (IIT) Chicago-Kent College of Law. “But I believed information technology was going to change the way the world worked.”

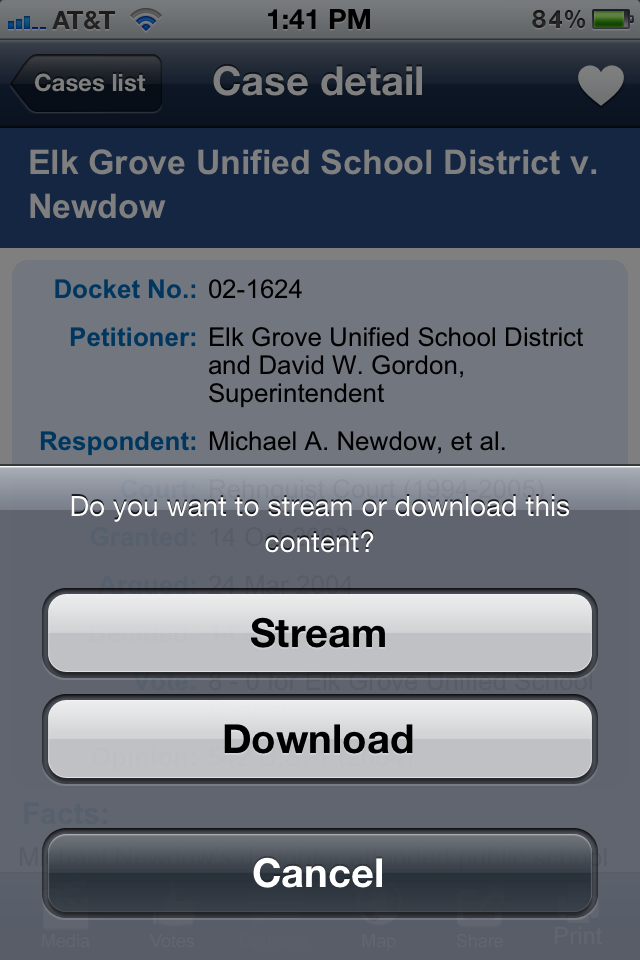

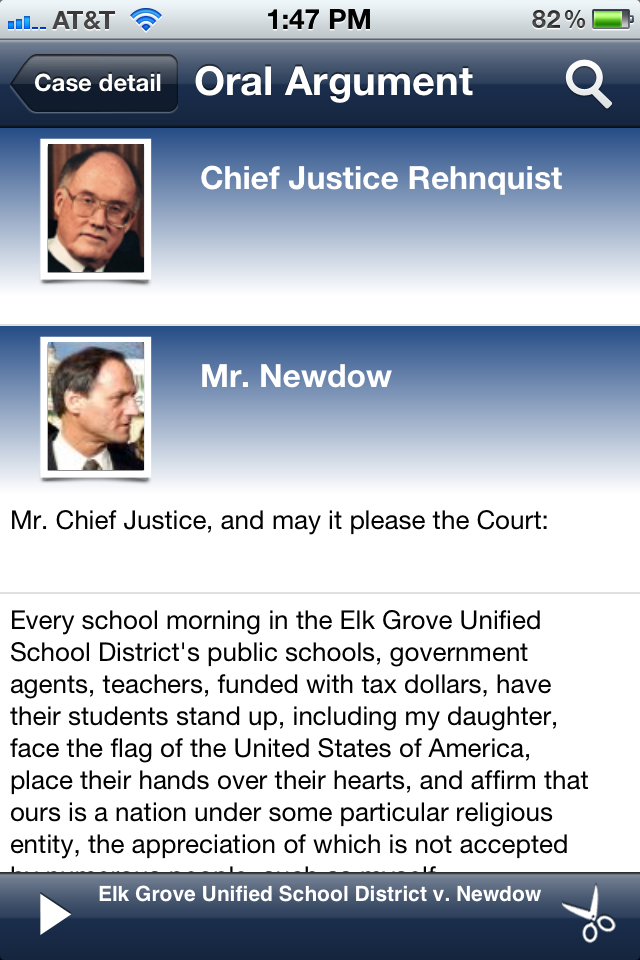

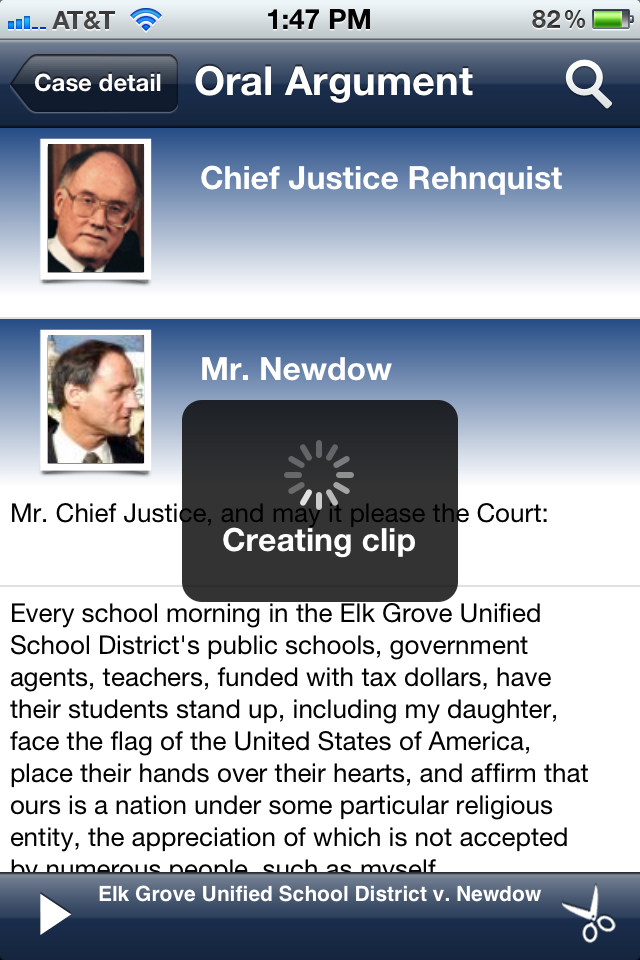

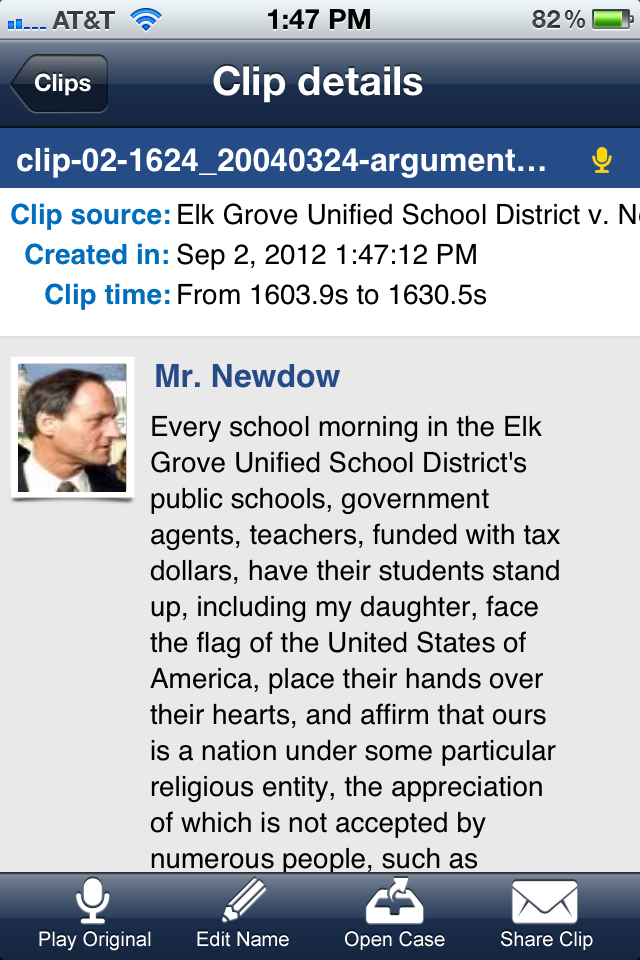

Goldman’s quest to “really humanize the Supreme Court” led to the development of the NSF-funded Oyez Project, a multimedia archive that includes a searchable trove of oral arguments that the court has heard since 1955. An app for mobile devices, ISCOTUSnow is also available.

“The principal objective was to take the court down from exalted status and bring it to the public,” says Goldman. “We also wanted to make available the vast amount of data associated with the court.”

Creating searchable audio and video

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

To bring the Supreme Court to life, Goldman first persuaded the National Archives, which stores the court’s audio files, to permit him to copy the tapes for transcription and digitization. To make the newly digitized audiotapes searchable, Goldman collaborated with Mark Liberman, a computational linguistics professor at the University of Pennsylvania. Liberman adapted an algorithm that can match sounds on audiotapes with written transcripts. This work eventually led to the development of the Penn Forced Aligner, a tool now commonly used to align spoken sounds with written text.

“We essentially made a Google-like search engine for audio and video recordings,” says Liberman, who was drawn to the task because of the archives’ value for scholars and the public. He also welcomed the opportunity to create a search technique applicable to the growing collections of audio and video recordings available from a myriad of sources.

“[W]e were able to set up a model for how to approach searches in a cost-effective way. This may seem like a large project, but it is small compared to what’s now available online and what will be in the future,” says Liberman.

(Recently, Liberman’s colleagues at Oxford University and the British Library used the alignment tools to decipher recordings of the British National Corpus, an archive with a spoken portion of 100 million words gathered from participants who recorded their speech on Sony Walkmans.)

Analyzing the data

Next, Goldman analyzed almost 14,000 hours of audio of oral arguments from the Supreme Court. “There are countless questions you can ask about the dataset,” he says. “However, this is an unusual dataset, because it has multiple speakers and is spontaneous.” One of the first tasks was identifying each speaker in each oral argument — a challenge, since roughly 11 speakers could be involved in an argument. In addition, for many years the transcripts did not tag questions with justice’s names.

While taking on these challenges, Goldman and his collaborators — who included colleagues from Carnegie Mellon University and the University of Minnesota — compiled a number of interesting facts about the court’s workings since 1955:

- 32 justices over 58 years

- 8,600 advocates, 70 percent of whom appeared before the court only once

- 66 million words spoken

- More than 6,100 cases and more than 2,300 opinion announcements

- Longest argument — 1300 minutes

- Shortest argument — 14 minutes

Justice Antonin Scalia, who has served 27 years on the court, holds the record for most talkative, with 7,200 minutes, while Felix Frankfurter, who served 23.5 years, comes in a close second at 7,000 minutes. The most restrained justices are Sherman Minton and Clarence Thomas. Although Minton served on the court for seven years, only his last year is on record. During his final term he is heard for just 17 minutes. Thomas, on the court since 1991, clocks in at 23 minutes.

While the Oyez Project provides legal scholars with a wealth of material to mine, linguistics researchers also analyze the recordings for various studies.

Taking the court to the people

To ensure the public and academics can probe the data with ease, Goldman’s team continues to make refinements and develop the interface. In the fall of 2013, search capabilities will be added to the data system to help users delve more deeply into the material. This new search capability will, for example, enable users to “search on the term ‘strict scrutiny,’ see it in the transcript, listen to it, and then do whatever listeners want to do with it,” explains Goldman.

Chicagoans are fond of saying, “Make no little plans.” Goldman is true to this statement. He wants to apply the tools developed in the Supreme Court project to all U.S. appellate courts. The plan is to develop web sites and mobile device applications. Recently, the Knight Foundation awarded the Oyez Project $600,000 to undertake this work for the state supreme courts in California, Florida, Illinois, New York and Texas.

“The apps are the coolest part,” says Goldman. They will follow the design of ISCOTUSnow, which is a collaborative effort between Goldman and Caroline Shapiro, also a professor at IIT Chicago-Kent College of Law. ISCOTUSnow provides access to everything on the current Supreme Court docket, and includes audio and transcripts. With a simple motion, a user can flip through a transcript, search it and share a section with colleagues. “The best part?” says Goldman. “All this information is free.”

The scale of the Oyez project was one Goldman never imagined. “Without NSF support, we would still be struggling,” he says. “The NSF’s backing gave me the courage to think no little thoughts.”

Editor's Note: The researchers depicted in Behind the Scenes articles have been supported by the National Science Foundation, the federal agency charged with funding basic research and education across all fields of science and engineering. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author and do not necessarily reflect the views of the National Science Foundation. See the Behind the Scenes Archive.