Numbers That Become Memes Can Be Dangerous to Society (Op-Ed)

This article was originally published at The Conversation. The publication contributed the article to LiveScience's Expert Voices: Op-Ed & Insights.

Some numbers are both memorable and incorrect. Take the idea that we only use 10% of our brains. Despite there being no medical evidence for the remarkably low percentage, many still believe it.

Part of the reason the myth has been so persistent – it first popped up in 1907 – is that it suggests we can improve ourselves, that we have unused potential. This is an appealing idea, so it spreads.

Repetition helps numbers take hold in the popular consciousness. Some values, like the 10% brain usage, are flawed to start with. Other numbers might be correct in a specific context, but come with important caveats, which are lost over time as the meme spreads.

In his book Outliers, Malcolm Gladwell used several case studies to explore the amount of time it takes for people to become world-class at activities like chess or music. Noting that researcher K. Anders Ericsson had in many cases found the average to be around 10,000 hours, Gladwell called this time-expertise trade off the “10,000-Hour Rule”.

Calling something a rule makes it catchy, and many people who read the book were left with the idea that “you can achieve mastery in any task by practising it for 10,000 hours”. That sounds like an inspiring and motivating concept: try hard enough and you can be good at anything.

But the anecdotes in Outliers didn’t support such a strong claim. As Gladwell later clarified, those 10,000 hours were an average, and the “rule” was only relevant for certain activities.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Risky counts

Once numbers become part of common parlance, it can be tricky to reattach the necessary subtleties. This can be a particularly big problem during a crisis. In 2009, a report in Australia suggested that the newly emerged swine flu virus could go on to kill 10,000 people in New South Wales.

Health agencies soon criticised this number, which was based on figures from the deadly 1918 pandemic, as alarmist. The situation in 2009 was different, they said, and death toll for the whole country was unlikely to top 6,000. A 2012 study estimated the final total to have been between 400 and 1600.

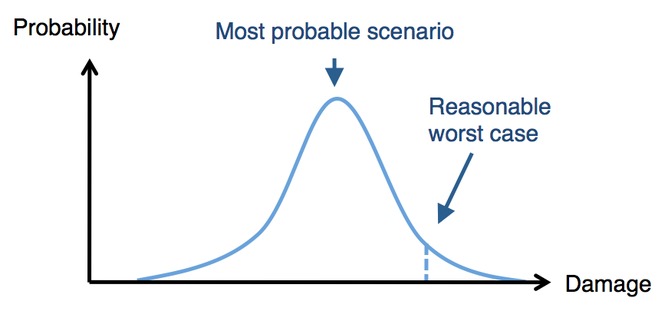

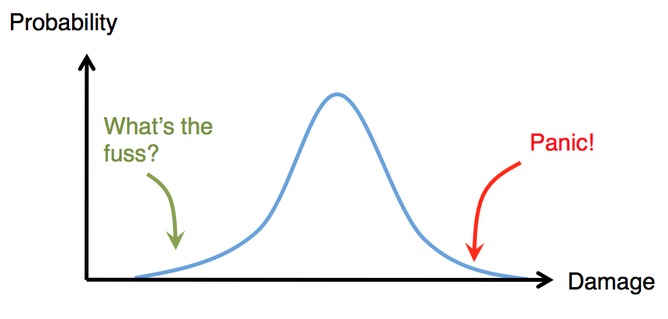

From disease outbreaks to nuclear emergencies, governments have to consider a number of possible outcomes. They might look at the “reasonable worst-case scenario”, which is not the absolute worst outcome, but the worst of those that are reasonably likely to occur. Or they might explore the “most probable scenario”: a likely, but not necessarily certain, outcome.

But the most likely outcome doesn’t necessarily make for the most exciting story. The temptation for emergency services is therefore to latch on to the more extreme (but far less likely) events on either side.

As well as numbers being misinterpreted as they become more popular, values can also change. Like in the game of telephone, numbers can become distorted each time they pass from one person to another.

When the numbers in question are related to health, they can cause serious problems. Take the example of researchers at Johns Hopkins University, who looked at the incubation period of certain infections and found worrying anomalies.

The incubation period for a disease measures the time between getting infected and the appearance of symptoms. Having an accurate estimate of this value is important for disease control. After a Canadian influenza H5N1 case was identified earlier this month, health officials were particularly vigilant over the following three to four days. Anyone who came into contact with an infected patient would likely develop symptoms during this time.

Knowing the incubation period can also help researchers assess how infections like influenza H7N9 – which currently struggle to transmit between humans – might spread if they were to mutate and become more transmissible. The smaller the incubation period, the less time before one case can cause another.

Yet when the researchers at Johns Hopkins looked at published estimates for different respiratory infections, they discovered several discrepancies. Half of the time, publications didn’t even say where their numbers came from. Others misquoted original medical evidence – or referenced papers that had misquoted this evidence – which led to incorrect estimates.

The researchers noted that in a well-known 1967 study, the incubation period of human coronavirus – the family of viruses that SARS and MERS belong to – was estimated to be between two to four days. When subsequent papers cited the value, though, some quoted it as exactly two days; one even said it was three to five days.

They found the same problems when looking at RSV virus, which is responsible for many childhood chest infections. One textbook said it had an incubation period of four to eight days. But one in three people infected with RSV will show symptoms within four days. The difference between textbook and reality could potentially lead clinicians to make incorrect conclusions about infections.

From medicine to music lessons, it is crucial to know where numbers come from, and the context surrounding them. Such caveats are easily lost if a value is particularly memorable or appealing. As such values propagate, the problem often gets worse. It is tempting to forget about the original evidence when retelling a good story or citing a well-known source. But just because a number is popular, it doesn’t mean it is always correct.

Adam Kucharski does not work for, consult to, own shares in or receive funding from any company or organisation that would benefit from this article, and has no relevant affiliations.

This article was originally published at The Conversation. Read the original article. The views expressed are those of the author and do not necessarily reflect the views of the publisher. This version of the article was originally published on LiveScience.

Measles has long-term health consequences for kids. Vaccines can prevent all of them.

100% fatal brain disease strikes 3 people in Oregon