Killer Robots: Natural Evolution, or Abomination?

Ask one technologist and he or she might say that lethal autonomous weapons — machines that can select and destroy targets without human intervention — are the next step in modern warfare, a natural evolution beyond today's remotely operated drones and unmanned ground vehicles. Others will decry such systems as an abomination and a threat to International Humanitarian Law (IHL), or the Law of Armed Conflict.

The U.N. Human Rights Council has, for now, called for a moratorium on the development of killer robots. But activist groups like the International Committee for Robot Arms Control (ICRAC) want to see this class of weapon completely banned. The question is whether it is too early — or too late — for a blanket prohibition. Indeed, depending how one defines "autonomy," such systems are already in use.

From stones to arrows to ballistic missiles, human beings have always tried to curtail their direct involvement in combat, said Ronald Arkin, a computer scientist at Georgia Institute of Technology. Military robots are just more of the same. With autonomous systems, people no longer do the targeting, but they still program, activate and deploy these weapons. [7 Technologies That Transformed Warfare]

"There will always be a human in the kill chain with these lethal autonomous systems unless you're making the case that they can go off and declare war like the Cylons," said Arkin, referring to the warring cyborgs from "Battlestar Galactica." He added, "I enjoy science-fiction as much as the next person, but I don't think that's what this debate should be about at this point in time."

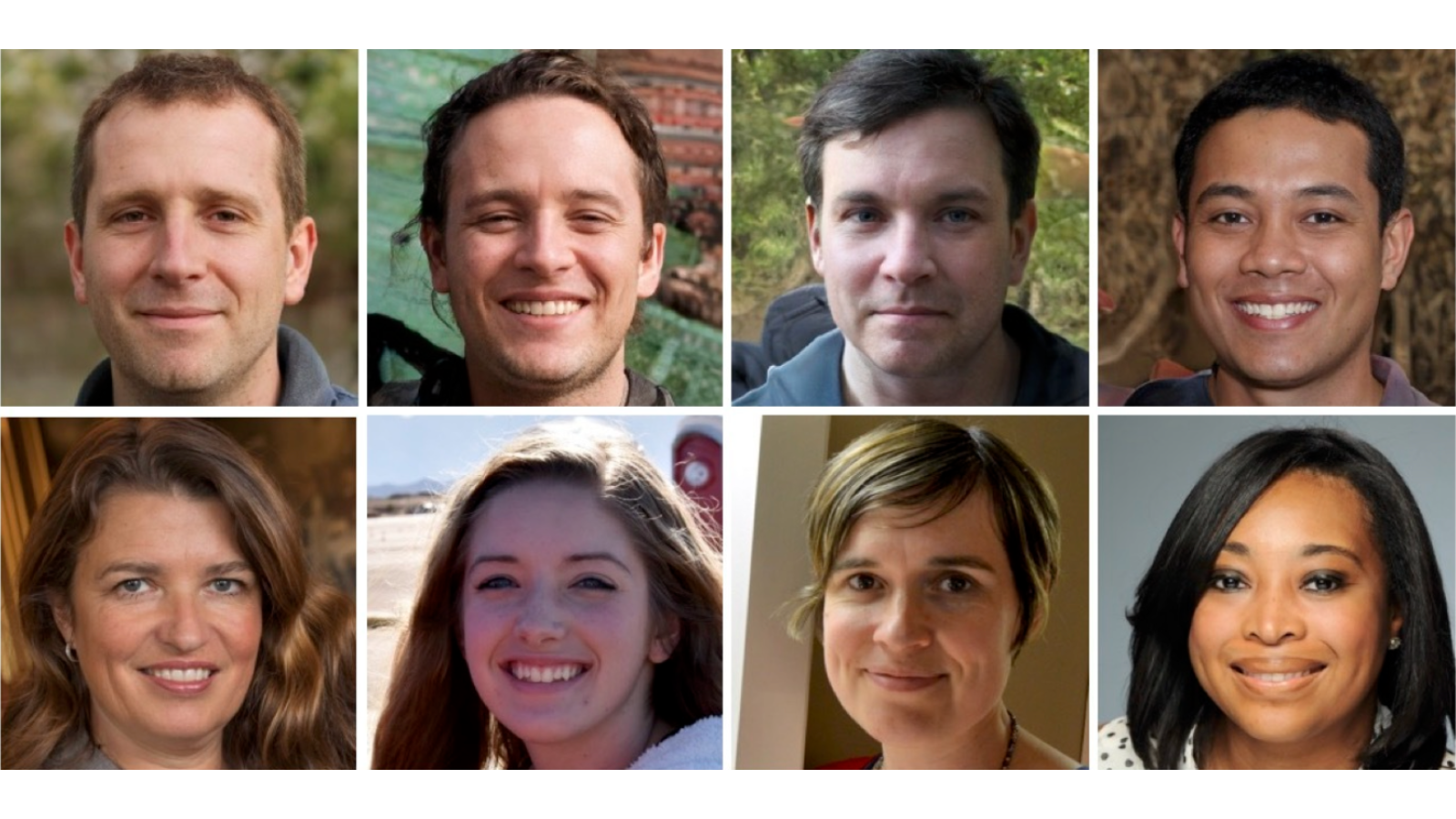

Peter Asaro, however, is not impressed with this domino theory of agency. A philosopher of science at The New School, in New York, and co-founder of ICRAC, Asaro contends robots lack "meaningful human control" in their use of deadly force. As such, killer robots would be taking the role of moral actors, a position that he doubts they are capable of fulfilling under International Humanitarian Law. That's why, he says, these systems must be banned.

Choosing targets, ranking values

According to the Law of Armed Conflict, a combatant has a duty to keep civilian casualties to a minimum. This means using weapons in a discriminating fashion and making sure that, when civilians do get killed in action, their incidental deaths are outweighed by the importance of the military objective — a calculation that entails value judgments.

Get the world’s most fascinating discoveries delivered straight to your inbox.

In terms of assessing a battlefield scene, no technology surpasses the ability of the human eye and brain. "It's very aspirational to think that we'll get a drone that can pick a known individual out of a crowd. That's not going to happen for a long, long, long, long time," said Mary "Missy" Cummings, director of MIT's Human and Automation Laboratory, and a former F-18 pilot. [Drone Wars: Pilots Reveal Debilitating Stress Beyond Virtual Battlefield]

Still, a fully autonomous aircraft would do much better than a person at, say, picking up the distinctive electronic signature of a radar signal or the low rumbling of a tank. In fact, pilots make most of their targeting errors when they try to do it by sight, Cummings told Live Science.

As for a robot deciding when to strike a target, Arkin believes that human ethical judgments can be programed into a weapons system. In fact, he has worked on a prototype software program called the Ethical Governor, which promises to serve as an internal constraint on machine actions that would violate IHL. "It's kind of like putting a muzzle on a dog," he said.

As expected, some have voiced much skepticism regarding the Ethical Governor, and Arkin himself supports "taking a pause" on building lethal autonomous weapons. But he doesn't agree with a wholesale ban on research "until someone can show some kind of fundamental limitation, which I don't believe exists, that the goals that researchers such as myself have established are unobtainable."

Of robots and men

Citing the grisly history of war crimes, advocates of automated killing machines argue that, in the future, these cool and calculating systems might actually be more humane than human soldiers. A robot, for example, will not gun down a civilian out of stress, anger or racial hatred, nor will it succumb to bloodlust or revenge and go on a killing spree in some village.

"If we can [develop machines that can] outperform human warfighters in terms of ethical performance … then we could potentially save civilian lives," Arkin told Live Science, "and to me, that is not only important, that is a moral imperative." [Fight, Fight, Fight: The History of Human Aggression]

This argument is not without its logic, but it can be stretched only so far, said Jessica Wolfendale, a West Virginia University associate professor of philosophy specializing in the study of war crimes. That's because not every atrocity happens in the heat of battle, as in the case of U.S. Marines killing 24 Iraqi civilians in Haditha, in 2005.

Sometimes war crimes result from a specific policy "authorized by the chain of command," Wolfendale said. In such a case — think of the torture, rape and mistreatment of prisoners at Abu Ghraib in 2003-2004 — the perpetrators are following orders, not violating them. So it is hard to see how robots would function any differently than humans, she said.

Asaro also has his doubts that one could empirically prove that lethal robots would save lives. But even if that were the case, he insists allowing "computers and algorithms and mechanical processes" to take a human life is "fundamentally immoral."

This stance, while emotionally appealing, is not without its conceptual difficulties, said Paul Scharre, project director for the 20YY Warfare Initiative, at the Center for a New American Security, in Washington, D.C.

Autonomous weapons, in one sense, already exist, Scharre said. Mines blow up submarines and tanks (and the people inside) without someone pulling the trigger. The Harpy drone, developed by Israel Aerospace Industries, hunts and eliminates fire-control radars all by itself. And even the Patriot air and missile defense system, used by the United States and several other nations, can be switched into automatic mode and be used against aircraft.

Is auto-warfare inevitable?

Peter W. Singer, director of the Center for 21st Century Security and Intelligence at The Brookings Institution, a nonprofit think-tank based in Washington, D.C., doubts that ICRAC's proposed ban will succeed, because it will "be fighting against the trends of science, capitalism, and warfare too."

Another major sticking point is enforcement. Autonomy is not like a chemical weapon or blinding laser; it is software buried in an otherwise normal-looking drone. Furthermore, the technology has non-violent civilian applications. The National Science Foundation, for example, is backing an effort to develop the Ethical Governor as a tool to mediate relationships between Parkinson's patients and caregivers.

Supporters of the ban grant that it won't be easy to enact, but they say such challenges shouldn't be a reason to put the ban aside. "People build chemical and biological weapons too; you don't get 100 percent conformity to the rules, but when people violate it, that's cause for sanctions," Asaro said.

Editor's note: This story was updated at 5:37 p.m. Eastern to correct the spelling of Peter Asaro's last name.

Follow us @livescience, Facebook & Google+. Original article on Live Science.