Decoding Music's Resonance: Researcher and Performer Parag Chordia (Op-Ed)

Jessica Gross is a freelance writer in New York City. She has contributed to the New York Times Magazine, The Paris Review Daily, Kirkus and other publications. This article was provided to Live Science in partnership with the National Endowment for the Arts for Live Science's Expert Voices: Op-Ed & Insights.

Parag Chordia has spent much of his life thinking about music, first as a performer then as a researcher at Georgia Tech and now as a music app developer. This combination of experiences has led Chordia to pursue questions that most listeners, and even most performers, simply take for granted.

"Most of us are musicians or deeply touched by music," said Chordia of the researchers in his field. "And we also have this kind of engineering or scientific drive to understand why."

Music became a central part of Chordia's life during his high school years in South Salem, N.Y., when his father took him to his first Indian classical music concert. The experience so moved Chordia that by college, he'd decided to pursue Indian classical music performance, and took a year off from school to live in India and study the sarod, a fretless, stringed instrument. (Chordia eventually returned to school, receiving a B.S. in mathematics from Yale and a Ph.D. in artificial intelligence and music from Stanford University.)

Years later, and after a decade spent studying with renowned sarod teacher Pandit Buddhadev Das Gupta, Chordia is now an experienced performer. What's more, his intense connection to music has blossomed into a career off stage as well. Prior to taking on his current role as chief scientist for music app developer Smule, which he began last year, Chordia founded and directed the Music Intelligence Group at the Georgia Institute of Technology.

Chordia's work, partly funded by the U.S. National Science Foundation, has focused on a number of questions: How is sound produced? How can it be manipulated? How is it perceived?

Those questions have, in turn, led to further questions focused on the brain. "How does the brain organize sound, and why does it elicit the types of responses and emotions that it does?" Chordia asked. At Georgia Tech, Chordia and his colleagues wanted to better understand the connection between music and the voice. [From Dino Brains to Thought Control — 10 Fascinating Brain Findings]

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

"We said, OK, when a person is happy, their speech sounds different than when they're sad," he explained. A sad person speaks softly, slowly, often mumbles and has a darker tone. A happy person speaks more quickly and brightly. "We started to wonder, is music bootstrapping off of the same processes? In other words, are those fundamental acoustic cues being used to signify happiness and sadness in music?"

Chordia's team created an artificial melody, then shifted it to sound either slightly higher or slightly lower in pitch. One group of participants heard the higher melody, followed by the original. The second group heard the lower melody, followed by the original. So both groups heard exactly the same melody in the second position. The surprising results: The participants experienced that identical melody differently.

Those in the first group, who heard the higher melody first, described the second melody as sad, presumably because it was lower than the first sample they heard. Meanwhile, those in the second group described the second melody as happy, presumably because it was higher than the first sample they heard. The upshot was that pitch does confer emotion in music in a way that mimics people's response to vocal expression. This, Chordia explained, is why a tremolo in music registers as intense — it reminds people of the way an angry, adrenaline-spurred voice shakes.

Those findings help explain some of the power of Indian classical music, Chordia said. This kind of music overlaps with human vocal properties, which is part of what makes it "so emotive and expressive," he said.

In another takeaway, the study also showed that people's experience of music is relative to what they've heard before; that is, a person's perception of music isn't static.

Neither is music itself. Chordia explained that music strikes a remarkable balance between predictability and novelty. Humans are simultaneously attracted to both elements. On the one hand, evolutionarily speaking, accurately predicting what's to come offers a reward: If people can anticipate threats, they're in better shape than if they can't. On the other hand, the drive toward novelty is vital: If people never sought out new sources of food or new social connections, they'd be less successful.

As a result, people's reward systems kick in — that is, they experience pleasure — in both instances.

"I think what's really interesting about music is that it plays off of both these things," said Chordia, who has studied this phenomenon through computational and statistical modeling of music's structure. "One of the ways that we describe music is 'safe thrills.' It's like a roller coaster. On the one hand, you know nothing really bad is going to happen, but there are all these pleasant surprises along the way. A lot of music is like that: you set up a pattern and expectation, and then you play with it."

That might mean slightly varying the drumbeat, changing the chord pattern, or adding or removing instruments. "Those little surprises, it turns out, can be very pleasurable." They result in what Chordia calls a "supercharged stimulus."

The surprises don't just occur the first time someone hears a song, either. "If you play a segment of music 10 times," Chordia said, "at points of high surprise, there's a distinct pattern you can see in the brain, and what's interesting is that that low-level surprise doesn't disappear." Some habituation does occur, but a piece of music can give people that little jolt of surprised pleasure even if they know the tune very well.

As a performer, Chordia isn't just interested in how people perceive music. His research also investigates what happens to individuals while they play music. In one study, Chordia and his colleagues hooked trained musicians up to an EEG machine, which measures electrical activity in the brain, while the musicians played simple, familiar songs, and then improvised.

Based on preliminary data, it appears that when the musicians improvised, certain areas of their brains were actually muted. That is, rather than requiring more activity across the brain, a highly creative state benefits from fewer active areas, so that more-disparate regions can communicate with each other and create unexpected new insights. (This is perhaps one reason, Chordia suggested, that alcohol and music often go hand-in-hand.)

But making music doesn't just enable new kinds of communication within the brain; it also enables an incredible level of synchronicity among people. If you've ever sung in a chorus, attended a concert or played in a band, you probably recall the camaraderie. Chordia and his colleagues wanted to figure out if there was neurological basis for this sensation.

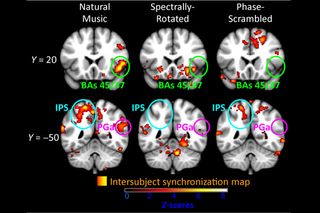

Using fMRI scans, which measure changes in neural blood flow, the researchers found that people who listened to the same piece of music had activity in similar areas of the brain at the same time. "If you think about it, this is pretty amazing," Chordia said, pointing out that fMRIs of two people talking or writing or gazing out the window together wouldn't yield this kind of coordinated brain activity. "I think our powerful intuition [about music] that it is a shared experience is true."

In recent years, Chordia's interest in the roles of performer and audience, and how the two overlap, has led to his latest endeavor: creating apps that turn listeners into performers.

In his current role at app-maker Smule, Chordia aims to encourage people who don't think of themselves as musicians to sing and play, and to help people to connect with each other through music. He works to accomplish both goals using smartphones, creating app-based answers to the question,"How can we create a 21st-century folk music through technology?"

Yes, there's the irony of fighting isolation via the devices that enable it. But in another sense, Chordia's work represents a natural next step in musical evolution: every instrument is a kind of technology. Smartphones are simply a digital kind.

LaDiDa, one of Smule's apps that grew out of Chordia's academic research, creates background music for users' vocal samples, a sort of reverse karaoke. Smule's Songify app turns speech into a song, while the company's AutoRap program turns speech into rapping. Creating each app involved extensive research into the fundamentals of how music works (answering questions like, "What is rap, exactly, and how can a computer create it?").

The broader from these kind of apps is that everyone can sing — you included.

Other apps help advance the collaborative-music piece of Smule's mission. Sing! Karaoke allows users to perform karaoke with their friends, while logged into smartphones far away from one another. On Guitar! lets users can create the background music for other people's vocal samples.

Given Chordia's academic discoveries, as well as his experience playing Indian classical music, his passion for reviving shared music-making experiences isn't surprising. "Playing classical music is less about performing and more about immersing yourself in it," Chordia said.

But regardless of his work with music, both onstage and in the lab, Chordia admits some aspects of music's emotional resonance that may never be fully understood. "At the most fundamental level," he said, "my research really stems from this question: Why are we as humans so attracted to musical sounds? What is it about music that moves us? Why does this abstract pattern of sonic activity give rise to some of our most cherished human emotions? It's really weird, actually, if you think about it."

The NEA is committed to encouraging work at the intersection of art, science and technology through its funding programs, research, and online as well as print publications. The views expressed are those of the author and do not necessarily reflect the views of the publisher. This version of the article was originally published on Live Science.