Robots 'See' Objects with High-Tech Fingertip Sensor

Some robots can swim. Others can kick, fetch, jump or fly. But the latest development in the field of robotics lets machines carry out an activity that is somewhat less athletic: plugging in a USB cord.

Performing this mundane task may not sound all that difficult to humans, but getting a robot to maneuver an object into a small port is a big deal, said researchers at the Massachusetts Institute of Technology (MIT) and Northeastern University in Boston.

The technology that makes this kind of precision possible is a sensor that, with help from computer algorithms, lets a robot "see"the shape and size of an object it holds in its grasp. Known as GelSight, the high-tech sensor is about 100 times more sensitive than a human finger, the researchers said. [The 6 Strangest Robots Ever Created]

The sensor uses embedded lights and an onboard camera — tools usually associated with seeing an object, not feeling it— to tell a robot what object it is holding in its grasp. Edward Adelson, a professor of vision science at MIT, first conceived of GelSight in 2009.

"Since I'm a vision guy, the most sensible thing, if you wanted to look at the signals coming into the finger, was to figure out a way to transform the mechanical, tactile signal into a visual signal — because if it's an image, I know what to do with it," Adelson said in a statement.

GelSight is made up of a synthetic rubber material that conforms to the shape of any object pressed against it. To better even out the light-reflecting properties of the diverse materials it comes into contact with, the rubber sensor is coated with metallic paint.

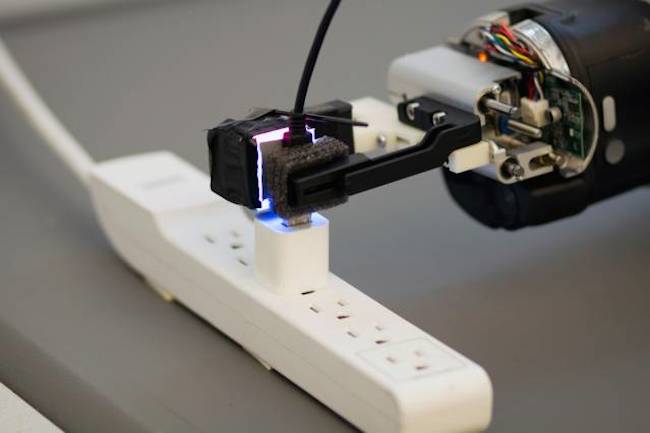

In the latest version of the GelSight sensor, researchers attached the painted rubber sensorto a robot's gripper, which is a type of mechanical hand with just two digits. Researchers mounted the sensor inside a transparent plastic cube on one of the digits. Each wall of the plastic cube contains a tiny semiconductor that produces a different color of light (red, green, blue or white).

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

When an object is pressed against the rubber sensor, these colored lights hit the object in a particular way. A tiny camera mounted on the robot grippercaptures the intensity of each color of light reflected off the object and feeds the data into a computer algorithm. The algorithm converts this visual information into mechanical information, telling the robot the three-dimensional measurements of the object in its grasp.

In recent tests, a robotwas able to use the GelSight sensor to plug a USB cord (the same kind of cord you might plug into your laptop to connect it to a printer or data storage device) into the cord's port. The robot used its own vision system to first locate a USB cord dangling from a hook. When the robot grasped the cord, the GelSight sensor detected the USB's measurements and then computed the distance between the position of the USB cord and the position of the port. [Biomimicry: 7 Clever Technologies Inspired by Nature]

The team repeated the USB experiment with the same robot, but without a GelSight sensor, and the machine wasn't able to maneuver the cord into the USB port.

"Having a fast optical sensor to do this kind of touch sensing is a novel idea, and I think the way that they're doing it with such low-cost components — using just basically colored LEDs and a standard camera — is quite interesting," Daniel Lee, a professor of electrical and systems engineering at the University of Pennsylvania, who was not involved with the experiments, said in a statement.

Other tactile robotic sensors take a different approach to touch sensing, using tools such as barometers to gauge an object's size, Lee said. Industrial robots, for example, contain sensors that can measure objects with remarkable precision, but they can only do so when the objects they need to touch are perfectly positioned ahead of time, the researchers said.

With a GelSight sensor, a robot receives and interprets information about what it's touching in real-time, which makes the robot more adaptable, said Robert Platt, an assistant professor of computer science at Northeastern, and the research team's robotics expert.

"People have been trying to do this for a long time, and they haven't succeeded, because the sensors they're using aren't accurate enough and don't have enough information to localize the pose of the object that they're holding," Platt said.

Follow Elizabeth Palermo @techEpalermo. Follow Live Science @livescience, Facebook & Google+. Original article on Live Science.

Elizabeth is a former Live Science associate editor and current director of audience development at the Chamber of Commerce. She graduated with a bachelor of arts degree from George Washington University. Elizabeth has traveled throughout the Americas, studying political systems and indigenous cultures and teaching English to students of all ages.