"X-ray vision" that can track people's movements through walls using radio signals could be the future of smart homes, gaming and health care, researchers say.

A new system built by computer scientists at MIT can beam out radio waves that bounce off the human body. Receivers then pick up the reflections, which are processed by computer algorithms to map people’s movements in real time, they added.

Unlike other motion-tracking devices, however, the new system takes advantage of the fact that radio signals with short wavelengths can travel through walls. This allowed the system, dubbed RF-Capture, to identify 15 different people through a wall with nearly 90 percent accuracy, the researchers said. The RF-Capture system could even track their movements to within 0.8 inches (2 centimeters). [10 Technologies That Will Transform Your Life]

Researchers say this technology could have applications as varied as gesture-controlled gaming devices that rival Microsoft's Kinect system, motion capture for special effects in movies, or even the monitoring of hospital patients' vital signs.

"It basically lets you see through walls," said Fadel Adib, a Ph.D. student at MIT's Computer Science and Artificial Intelligence Lab and lead author of a new paper describing the system. "Our revolution is still nowhere near what optical systems can give you, but over the last three years, we have moved from being able to detect someone behind a wall and sense coarse movement, to today, where you can see roughly what a person looks like and even get a person’s breathing and heart rate."

The team, led by Dina Katabi, a professor of electrical engineering and computer science at MIT, has been developing wireless tracking technologies for a number of years. In 2013, the researchers used Wi-Fi signals to detect humans through walls and track the direction of their movement.

The new system, unveiled at the SIGGRAPH Asia conference held from Nov. 2 to Nov. 5 in Japan, uses radio waves that are 1,000 times less powerful than Wi-Fi signals. Adib said improved hardware and software make RF-Capture a far more powerful tool overall.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

"These [radio waves used by RF-Capture] produce a much weaker signal, but we can extract far more information from them because they are structured specifically to make this possible," Adib told Live Science.

The system uses a T-shaped antenna array the size of a laptop that features four transmitters along the vertical section and 16 receivers along the horizontal section. The array is controlled from a standard computer with a powerful graphics card, which is used to analyze data, the researchers said.

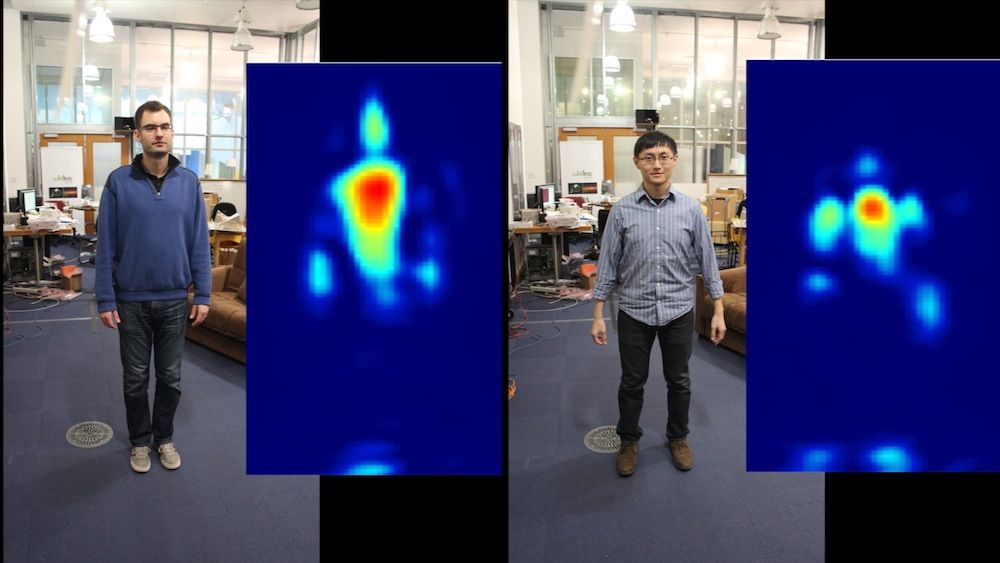

Because inanimate objects also reflect signals, the system starts by scanning for static features and removes them from its analysis. Then, it takes a series of snapshots, looking for reflections that vary over time, which represent moving human body parts.

However, unless a person’s body parts are at just the right angle in relation to the antenna array they will not redirect the transmitted beams back to the sensors. This means each snapshot captures only some of their body parts, and which ones are captured varies from frame to frame. "In comparison with light, every part of the body reflects the signal back, and that's why you can recover exactly what the person looks like using a camera," Adib said. "But with [radio waves], only a subset of body parts reflect the signal back, and you don't even know which ones."

The solution is an intelligent algorithm that can identify body parts across snapshots and use a simple model of the human skeleton to stich them together to create a silhouette, the researchers said. But scanning the entire 3D space around the antenna array uses a lot of computer power, so to simplify things, the researchers borrowed concepts from military radar systems that can lock onto and track targets. [6 Incredible Spy Technologies That Are Real]

Using a so-called "coarse-to-fine" algorithm, the system starts by using a small number of antennas to scan broad areas and then gradually increases the number of antennas in order to zero in on areas of strong reflection that represent body parts, while ignoring the rest of the room.

This approach allows the system to identify which body part a person moved, with 99 percent accuracy, from about 10 feet (3 meters) away and through a wall. It could also trace letters that individuals wrote in the air by tracking the movement of their palms to within fractions of an inch (just a couple of centimeters).

Currently, RF-Capture can only track people who are directly facing the sensors, and it can't perform full skeletal tracking as traditional motion-capture solutions can. But Adib said that introducing a more complex model of the human body, or increasing the number of arrays, could help overcome these limitations.

The system costs just $200 to $300 to build, and the MIT team is already in the process of applying the technology to its first commercial application — a product called Emerald that is designed to detect, predict and prevent falls among the elderly.

"This is the first application that's going to hit the market," Adib said. "But once you have a device and lots of people are using it, the cost of producing such a device immediately gets reduced, and once it's reduced, you can use it for even more applications."

The initial applications of the technology are likely to be in health care, and the team will soon be deploying the technology in a hospital ward to monitor the breathing patterns of patients suffering from sleep apnea. But as the resolution of the technology increases, Adib said, it could open up a host of applications in gesture control and motion capture.

"We still have a long path to go before we can get to that kind of level of fidelity," he added. "There are a lot of technical challenges that still need to be overcome. But I think over the next few years, these systems are going to significantly evolve to do that."

Follow Live Science @livescience, Facebook & Google+. Original article on Live Science.