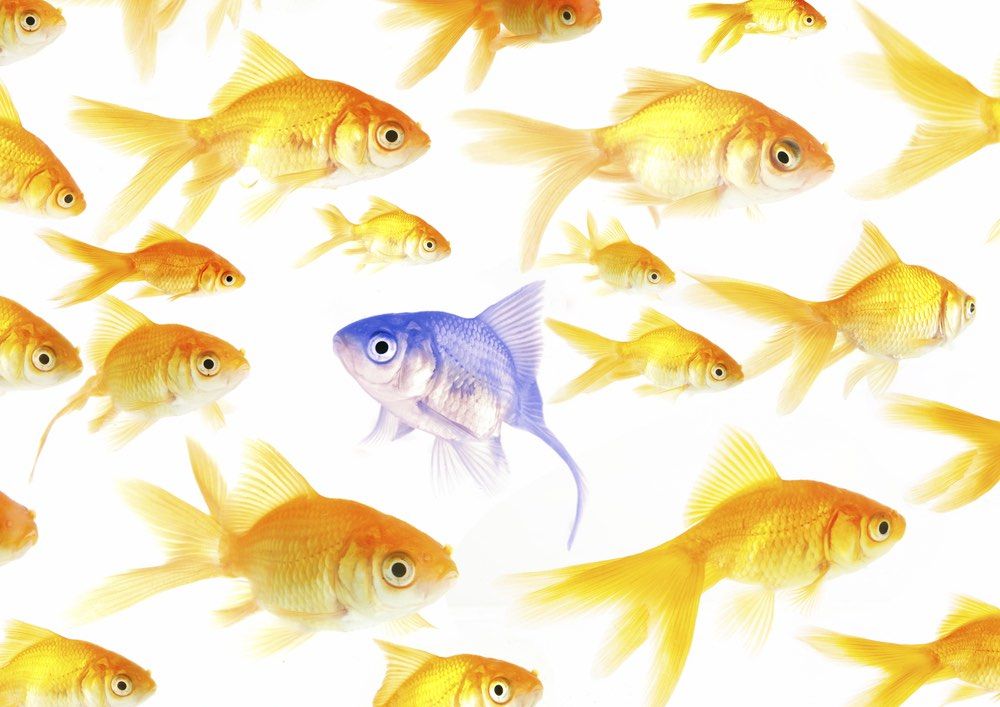

Why People Consider 'Normal' to Be 'Good'

The Binewskis are no ordinary family. Arty has flippers instead of limbs; Iphy and Elly are Siamese twins; Chick has telekinetic powers. These traveling circus performers see their differences as talents, but others consider them freaks with “no values or morals.” However, appearances can be misleading: The true villain of the Binewski tale is arguably Miss Lick, a physically “normal” woman with nefarious intentions.

Much like the fictional characters of Katherine Dunn’s “Geek Love,” everyday people often mistake normality as a criterion for morality. Yet, freaks and norms alike may find themselves anywhere along the good/bad continuum. Still, people use what’s typical as a benchmark for what’s good, and are often averse to behavior that goes against the norm. Why?

In a series of studies, psychologist Andrei Cimpian and I investigated why people use the status quo as a moral codebook – a way to decipher right from wrong and good from bad. Our inspiration for the project was philosopher David Hume, who pointed out that people tend to allow the status quo (“what is”) to guide their moral judgments (“what ought to be”). Just because a behavior or practice exists, that doesn’t mean it’s good – but that’s exactly how people often reason. Slavery and child labor, for example, were and still are popular in some parts of the world, but their existence doesn’t make them right or OK. We wanted to understand the psychology behind the reasoning that prevalence is grounds for moral goodness.

To examine the roots of such “is-to-ought inferences,” we turned to a basic element of human cognition: how we explain what we observe in our environments. From a young age, we try to understand what’s going on around us, and we often do so by explaining. Explanations are at the root of many deeply held beliefs. Might people’s explanations also influence their beliefs about right and wrong?

Quick shortcuts to explain our environment

When coming up with explanations to make sense of the world around us, the need for efficiency often trumps the need for accuracy. (People don’t have the time and cognitive resources to strive for perfection with every explanation, decision or judgment.) Under most circumstances, they just need to quickly get the job done, cognitively speaking. When faced with an unknown, an efficient detective takes shortcuts, relying on simple information that comes to mind readily.

More often than not, what comes to mind first tends to involve “inherent” or “intrinsic” characteristics of whatever is being explained.

For example, if I’m explaining why men and women have separate public bathrooms, I might first say it’s because of the anatomical differences between the sexes. The tendency to explain using such inherent features often leads people to ignore other relevant information about the circumstances or the history of the phenomenon being explained. In reality, public bathrooms in the United States became segregated by gender only in the late 19th century – not as an acknowledgment of the different anatomies of men and women, but rather as part of a series of political changes that reinforced the notion that women’s place in society was different from that of men.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Testing the link

We wanted to know if the tendency to explain things based on their inherent qualities also leads people to value what’s typical.

To test whether people’s preference for inherent explanations is related to their is-to-ought inferences, we first asked our participants to rate their agreement with a number of inherent explanations: For example, girls wear pink because it’s a dainty, flower-like color. This served as a measure of participants’ preference for inherent explanations.

In another part of the study, we asked people to read mock press releases that reported statistics about common behaviors. For example, one stated that 90 percent of Americans drink coffee. Participants were then asked whether these behaviors were “good” and “as it should be.” That gave us a measure of participants’ is-to-ought inferences.

These two measures were closely related: People who favored inherent explanations were also more likely to think that typical behaviors are what people should do.

We tend to see the commonplace as good and how things should be. For example, if I think public bathrooms are segregated by gender because of the inherent differences between men and women, I might also think this practice is appropriate and good (a value judgment).

This relationship was present even when we statistically adjusted for a number of other cognitive or ideological tendencies. We wondered, for example, if the link between explanation and moral judgment might be accounted for by participants’ political views. Maybe people who are more politically conservative view the status quo as good, and also lean toward inherence when explaining? This alternative was not supported by the data, however, and neither were any of the others we considered. Rather, our results revealed a unique link between explanation biases and moral judgment.

A built-in bias affecting our moral judgments

We also wanted to find out at what age the link between explanation and moral judgment develops. The earlier in life this link is present, the greater its influence may be on the development of children’s ideas about right and wrong.

From prior work, we knew that the bias to explain via inherent information is present even in four-year-old children. Preschoolers are more likely to think that brides wear white at weddings, for example, because of something about the color white itself, and not because of a fashion trend people just decided to follow.

Does this bias also affect children’s moral judgment?

Indeed, as we found with adults, 4- to 7-year-old children who favored inherent explanations were also more likely to see typical behaviors (such as boys wearing pants and girls wearing dresses) as being good and right.

If what we’re claiming is correct, changes in how people explain what’s typical should change how they think about right and wrong. When people have access to more information about how the world works, it might be easier for them to imagine the world being different. In particular, if people are given explanations they may not have considered initially, they may be less likely to assume “what is” equals “what ought to be.”

Consistent with this possibility, we found that by subtly manipulating people’s explanations, we could change their tendency to make is-to-ought inferences. When we put adults in what we call a more “extrinsic” (and less inherent) mindset, they were less likely to think that common behaviors are necessarily what people should do. For instance, even children were less likely to view the status quo (brides wear white) as good and right when they were provided with an external explanation for it (a popular queen long ago wore white at her wedding, and then everyone started copying her).

Implications for social change

Our studies reveal some of the psychology behind the human tendency to make the leap from “is” to “ought.” Although there are probably many factors that feed into this tendency, one of its sources seems to be a simple quirk of our cognitive systems: the early emerging bias toward inherence that’s present in our everyday explanations.

This quirk may be one reason why people – even very young ones – have such harsh reactions to behaviors that go against the norm. For matters pertaining to social and political reform, it may be useful to consider how such cognitive factors lead people to resist social change.

Christina Tworek, Ph.D. Student in Developmental Psychology, University of Illinois at Urbana-Champaign

This article was originally published on The Conversation. Read the original article.