Beyond 'Pokémon Go': Future Games Could Interact with Real Objects

The augmented-reality game "Pokémon Go" may be the hottest thing in mobile gaming right now, but new advances in computer science could give players an even more realistic experience in the future, according to a new study. In fact, researchers say a new imaging technique could help make imaginary characters, such as Pokémon, appear to convincingly interact with real objects.

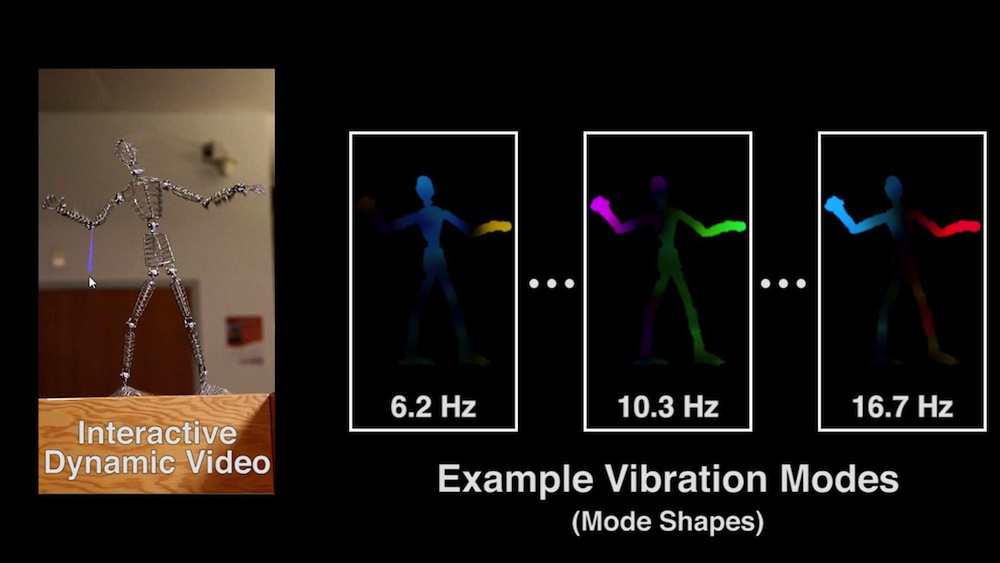

A new imaging technique called Interactive Dynamic Video can take pictures of real objects and quickly create video simulations that people, or 3D models, can virtually interact with, the researchers said. In addition to fueling game development, these advances could help simulate how real bridges and buildings might respond to potentially disastrous situations, the researchers added.

The smartphone game "Pokémon Go" superimposes images onto the real world to create a mixed reality. The popularity of this game follows a decades-long trend of computer-generated imagery weaving its way into movies and TV shows. However, while 3D models that can move amid real surroundings on video screens are now commonplace, it remains a challenge getting computer-generated images to look as if they are interacting with real objects. Building 3D models of real items is expensive, and can be nearly impossible for many objects, the researchers said. [Beyond Gaming: 10 Other Fascinating Uses for Virtual-Reality Tech]

Now, Interactive Dynamic Video could bridge that gap, the researchers said.

"When I came up with and tested the technique, I was surprised that it worked quite so well," said study lead author Abe Davis, a computer scientist at the Computer Science and Artificial Intelligence Laboratory at the Massachusetts Institute of Technology.

Analyzing movement

Using cameras, this new technique analyzes tiny, almost imperceptible vibrations of an object. For instance, when it comes to curtains, "it turns out they are almost always moving, just from natural air currents in an indoor room," Davis told Live Science.

The distinct ways or "modes" in which an object vibrates help computers model how it might physically behave if an outside force were to interact with it. "Most objects can vibrate and move a certain amount without a permanent change to their shape," Davis said. "To give you an example, I can tap on a branch of a tree, and it might shake, but that's different from bending it until it snaps. We observe these kinds of motions, the kind that an object bounces back from to return to a resting state."

Get the world’s most fascinating discoveries delivered straight to your inbox.

In experiments, Davis used this new technique on images of a variety of items, including a bridge, jungle gym and ukulele. With a few clicks of his mouse, Davis showed that he could push and pull these images in different directions. He even showed that he could make it look as if he could telekinetically control the leaves of a bush.

Even 5 seconds of video of a vibrating object is enough to create a realistic simulation of it, according to the researchers said. The amount of time needed depends on the size and directions of the vibrations, the scientists said.

"In some cases, natural motions will not be enough, or maybe natural motions will only involve certain ways an object can move," Davis said. "Fortunately, if you just whack on an object, that kind of sudden force tends to activate a whole bunch of ways an object can move all at once."

New tools

Davis and his colleagues said this new technique has many potential uses in entertainment and engineering.

For example, Interactive Dynamic Video could help virtual characters, such as those in "Pokémon Go," interact with their surroundings in specific, realistic ways, such as bouncing off the leaves of a nearby bush. It could also help filmmakers create computer-generated characters that realistically interact with their environments. And this could be done in much less time and at a fraction of the cost that it would take using current methods that require green screens and detailed models of virtual objects, Davis said.

"Computer graphics allow us to use 3D models to build interactive simulations, but the techniques can be complicated," Doug James, a professor of computer science at Stanford University in California, who did not take part in this research, said in a statement. "Davis and his colleagues have provided a simple and clever way to extract a useful dynamics model from very tiny vibrations in video, and shown how to use it to animate an image."

Major structures such as buildings and bridges also vibrate. Engineers can use Interactive Dynamic Video to simulate how such structures might respond to strong winds or an earthquake, the researchers said. [Lessons from 10 of the Worst Engineering Disasters in US History]

"Cameras can not only just capture the appearance of an object, but also their physical behavior," Davis said.

But, the new technique does have limitations. For example, it cannot handle objects that appear to change their shape too much, such as a person walking down the street, Davis said. Additionally, in their experiments, the researchers used a stationary camera mounted on a tripod; there are many technical hurdles to overcome before this method can be applied using a smartphone camera that might be held in a shaky hand, they said.

"Also, sometimes it takes a while to process a video to generate a simulation, so there are a lot of challenges to address before this can work on the fly in an app like 'Pokémon Go,'" Davis said. "Still, what we showed with our work is that this approach is viable."

Davis will publish this work later in August as part of his doctoral dissertation.

Original article on Live Science.