A new artificial intelligence system can take still images and generate short videos that simulate what happens next similar to how humans can visually imagine how a scene will evolve, according to a new study.

Humans intuitively understand how the world works, which makes it easier for people, as opposed to machines, to envision how a scene will play out. But objects in a still image could move and interact in a multitude of different ways, making it very hard for machines to accomplish this feat, the researchers said. But a new, so-called deep-learning system was able to trick humans 20 per cent of the time when compared to real footage.

Researchers at the Massachusetts Institute of Technology (MIT) pitted two neural networks against each other, with one trying to distinguish real videos from machine-generated ones, and the other trying to create videos that were realistic enough to trick the first system. [Super-Intelligent Machines: 7 Robotic Futures]

This kind of setup is known as a "generative adversarial network" (GAN), and competition between the systems results in increasingly realistic videos. When the researchers asked workers on Amazon’s Mechanical Turk crowdsourcing platform to pick which videos were real, the users picked the machine-generated videos over genuine ones 20 percent of the time, the researchers said.

Early stages

Still, budding film directors probably don’t need to be too concerned about machines taking over their jobs yet — the videos were only 1 to 1.5 seconds long and were made at a resolution of 64 x 64 pixels. But the researchers said that the approach could eventually help robots and self-driving cars navigate dynamic environments and interact with humans, or let Facebook automatically tag videos with labels describing what is happening.

"Our algorithm can generate a reasonably realistic video of what it thinks the future will look like, which shows that it understands at some level what is happening in the present," said Carl Vondrick, a Ph.D. student in MIT’s Computer Science and Artificial Intelligence Laboratory, who led the research. "Our work is an encouraging development in suggesting that computer scientists can imbue machines with much more advanced situational understanding."

The system is also able to learn unsupervised, the researchers said. This means that the two million videos — equivalent to about a year's worth of footage — that the system was trained on did not have to be labeled by a human, which dramatically reduces development time and makes it adaptable to new data.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

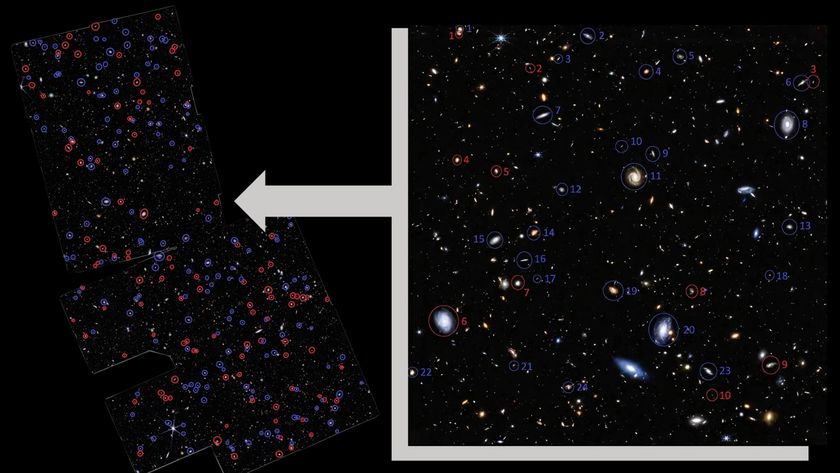

In a study that is due to be presented at the Neural Information Processing Systems (NIPS) conference, which is being held from Dec. 5 to 10 in Barcelona, Spain, the researchers explain how they trained the system using videos of beaches, train stations, hospitals and golf courses.

"In early prototypes, one challenge we discovered was that the model would predict that the background would warp and deform," Vondrick told Live Science. To overcome this, they tweaked the design so that the system learned separate models for a static background and moving foreground before combining them to produce the video.

AI filmmakers

The MIT team is not the first to attempt to use artificial intelligence to generate video from scratch. But, previous approaches have tended to build video up frame by frame, the researchers said, which allows errors to accumulate at each stage. Instead, the new method processes the entire scene at once — normally 32 frames in one go.

Ian Goodfellow, a research scientist at the nonprofit organization OpenAI, who invented GAN, said that systems doing earlier work in this field were not able to generate both sharp images and motion the way this approach does. However, he added that a new approach that was unveiled by Google's DeepMind AI research unit last month, called Video Pixel Networks (VPN), is able to produce both sharp images and motion. [The 6 Strangest Robots Ever Created]

"Compared to GANs, VPN are easier to train, but take much longer to generate a video," he told Live Science. "VPN must generate the video one pixel at a time, while GANs can generate many pixels simultaneously."

Vondrick also points out that their approach works on more challenging data like videos scraped from the web, whereas VPN was demonstrated on specially designed benchmark training sets of videos depicting bouncing digits or robot arms.

The results are far from perfect, though. Often, objects in the foreground appear larger than they should, and humans can appear in the footage as blurry blobs, the researchers said. Objects can also disappear from a scene and others can appear out of nowhere, they added.

"The computer model starts off knowing nothing about the world. It has to learn what people look like, how objects move and what might happen," Vondrick said. "The model hasn't completely learned these things yet. Expanding its ability to understand high-level concepts like objects will dramatically improve the generations."

Another big challenge moving forward will be to create longer videos, because that will require the system to track more relationships between objects in the scene and for a longer time, according to Vondrick.

"To overcome this, it might be good to add human input to help the system understand elements of the scene that would be difficult for it to learn on its own," he said.

Original article on Live Science.