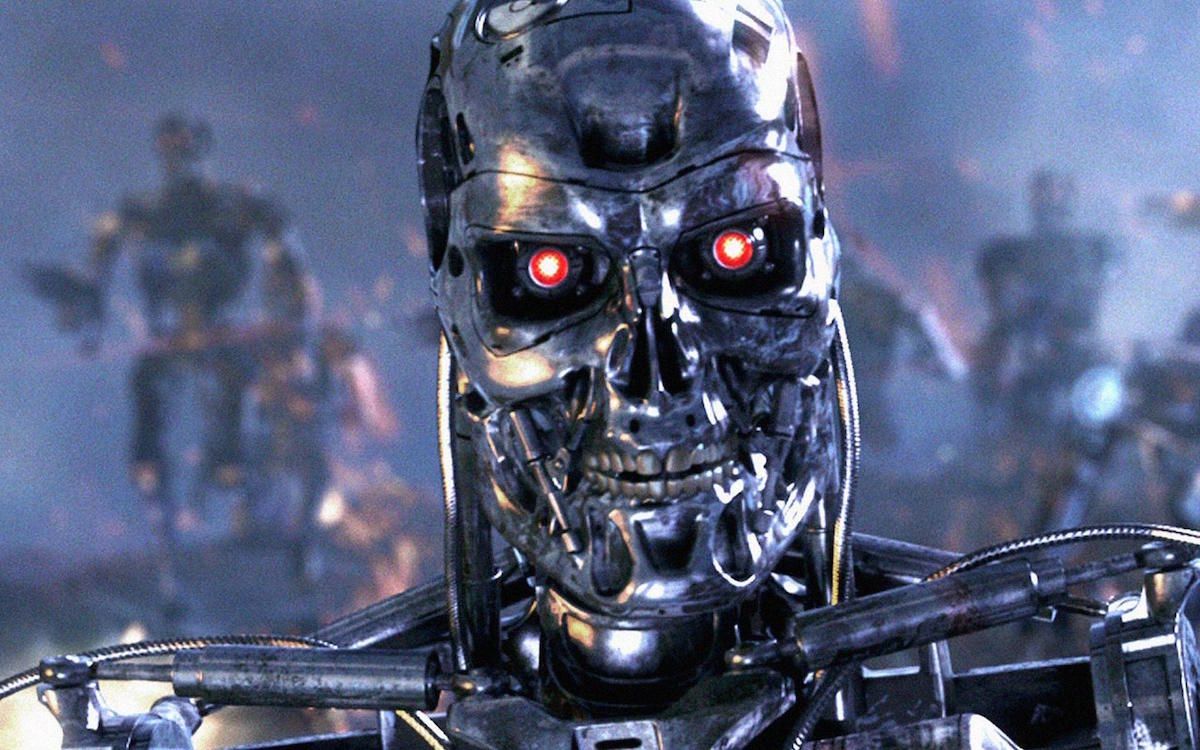

UN Will Take on 'Killer Robots' in 2017

Good news, fellow humans: The United Nations has decided to take on killer robots.

At the international Convention on Conventional Weapons in Geneva, 123 participating nations voted to initiate official discussions on the danger of lethal autonomous weapons systems. That's the emerging designation for so-called "killer robots" — weapons controlled by artificial intelligence that can target and strike without human intervention.

The agreement is the latest development in a growing movement calling for an preemptive ban on weaponized A.I. and deadly autonomous weapons. Last year, a coalition of more than 1,000 scientists and industry leaders, including Elon Musk and representatives of Google and Microsoft, signed an official letter to the United Nations demanding action.

The UN decision is significant in that it calls for formal discussions on the issue in 2017. In high-level international deliberations, the move from "informal" to "formal" represents a real step forward, said Stephen Goose, arms director of Human Rights Watch and a co-founder of the Campaign to Stop Killer Robots.

"In essence, they decided to move from the talk shop phase to the action phase, where they are expected to produce a concrete outcome," Goose said in an email exchange with Seeker.

RELATED: Killer Machines and Sex Robots: Unraveling the Ethics of A.I.

It's widely acknowledged that military agencies around the world are already developing lethal autonomous weapons. In August, Chinese officials disclosed that the country is exploring the use of A.I. and automation in its next generation of cruise missiles.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

"China's plans for weapons and artificial intelligence may be terrifying, but no more terrifying than similar efforts by the U.S., Russia, Israel, and others," Goose said. "The U.S. is farther along in this field than any other nation. Most advanced militaries are pursuing ever-greater autonomy in weapons. Killer robots would come in all sizes and shapes, including deadly miniaturized versions that could attack in huge swarms, and would operate from the air, from the ground, from the sea, and underwater."

The core issue in regard to these weapons systems concerns human agency, Goose said.

"The key thing distinguishing a fully autonomous weapon from an ordinary conventional weapon, or even a semi-autonomous weapon like a drone, is that a human would no longer be deciding what or whom to target and when to pull the trigger," he said.

"The weapon system itself, using artificial intelligence and sensors, would make those critical battlefield determinations. This would change the very nature of warfare, and not for the betterment of humankind."

RELATED: Stephen Hawking Wants to Prevent AI From Killing Us All

Goose said that pressure from the science and industry leaders, including some rather apocalyptic warnings from Stephen Hawking, helped spur the UN into action.

"The scientific community appears quite unified in opposing the development of fully autonomous weapons," he said. "They worry that pursuit of fully autonomous weapons will damage the reputation of the AI community and make it more difficult to move forward with beneficial AI efforts."

Aside from the obvious danger of killer robots gone rogue, the very development of such systems could lead to a "robotic arms race" that threatens international stability, Goose said.

"The dangers of fully autonomous weapons are foreseeable, and we should take action now to prevent potentially catastrophic future harm to civilians, to soldiers, and to the planet."

Original article on Seeker.

Most Popular