Advanced Vision Algorithm Helps Robots Learn to See in 3D

Robots are reliable in industrial settings, where recognizable objects appear at predictable times in familiar circumstances. But life at home is messy. Put a robot in a house, where it must navigate unfamiliar territory cluttered with foreign objects, and it's useless.

Now researchers have developed a new computer vision algorithm that gives a robot the ability to recognize three-dimensional objects and, at a glance, intuit items that are partially obscured or tipped over, without needing to view them from multiple angles.

"It sees the front half of a pot sitting on a counter and guesses there's a handle in the rear and that might be a good place to pick it up from," said Ben Burchfiel, a Ph.D. candidate in the field of computer vision and robotics at Duke University.

In experiments where the robot viewed 908 items from a single vantage point, it guessed the object correctly about 75 percent of the time. State-of-the-art computer vision algorithms previously achieved an accuracy of about 50 percent.

Burchfiel and George Konidaris, an assistant professor of computer science at Brown University, presented their research last week at the Robotics: Science and Systems Conference in Cambridge, Massachusetts.

RELATED: Personalized Exoskeletons Are Making Strides Toward a Man-Machine Interface

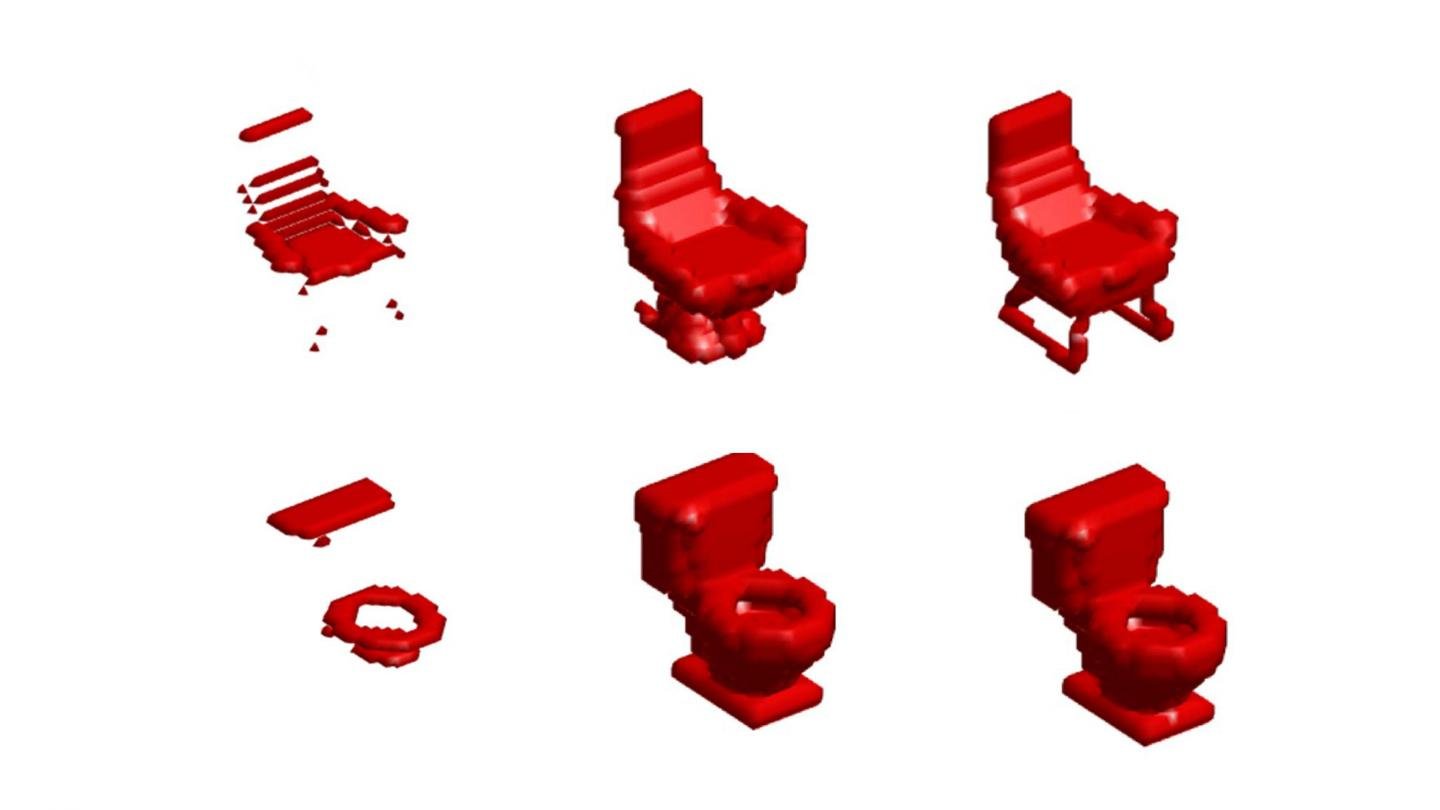

Like other computer vision algorithms used to train robots, their robot learned about its world by first sifting through a database of 4,000 three-dimensional objects spread across ten different classes — bathtubs, beds, chairs, desks, dressers, monitors, night stands, sofas, tables, and toilets.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

While more conventional algorithms may, for example, train a robot to recognize the entirety of a chair or pot or sofa or may train it to recognize parts of a whole and piece them together, this one looked for how objects were similar and how they differed.

When it found consistencies within classes, it ignored them in order to shrink the computational problem down to a more manageable size and focus on the parts that were different.

For example, all pots are hollow in the middle. When the algorithm was being trained to recognize pots, it didn't spend time analyzing the hollow parts. Once it knew the object was a pot, it focused instead on the depth of the pot or the location of the handle.

"That frees up resources and makes learning easier," said Burchfiel.

Extra computing resources are used to figure out whether an item is right-side up and also infer its three-dimensional shape, if part of it is hidden. This last problem is particularly vexing in the field of computer vision, because in the real world, objects overlap.

To address it, scientists have mainly turned to the most advanced form of artificial intelligence, which uses artificial neural networks, or so-called deep-learning algorithms, because they process information in a way that’s similar to how the brain learns.

Although deep-learning approaches are good at parsing complex input data, such as analyzing all of the pixels in an image, and predicting a simple output, such as "this is a cat," they're not good at the inverse task, said Burchfiel. When an object is partially obscured, a limited view — the input — is less complex than the output, which is a full, three-dimensional representation.

The algorithm Burchfiel and Konidaris developed constructs a whole object from partial information by finding complex shapes that tend to be associated with each other. For instance, objects with flat square tops tend to have legs. If the robot can only see the square top, it may infer the legs.

"Another example would be handles," said Burchfeil. "Handles connected to cylindrical drinking vessels tend to connect in two places. If a mug shaped object is seen with a small nub visible, it is likely that that nub extends into a curved, or square, handle."

RELATED: Construction Robot Can 'Print' a Building in 14 Hours

Once trained, the robot was then shown 908 new objects from a single viewpoint. It achieved correct answers about 75 percent of the time. Not only was the approach more accurate than previous methods, it was also very fast. After a robot was trained, it took about a second to make its guess. It didn't need to look at the object from different angles and it was able to infer parts that couldn't be seen.

This type of learning gives the robot a visual perception that's similar to the way humans see. It interprets objects with a more generalized sense of the world, instead of trying to map knowledge of identical objects onto what it's seeing.

Burchfiel said he wants to build on this research by training the algorithm on millions of objects and perhaps tens of thousands of types of objects.

"We want to build this is into single robust system that could be the baseline behind a general robot perception scheme," he said.

Originally published on Seeker.