How Do You Make a Likable Robot? Program It to Make Mistakes

You might think a robot would be more likely to win people over if it were good at its job. But according to a recent study, people find imperfect robots more likable.

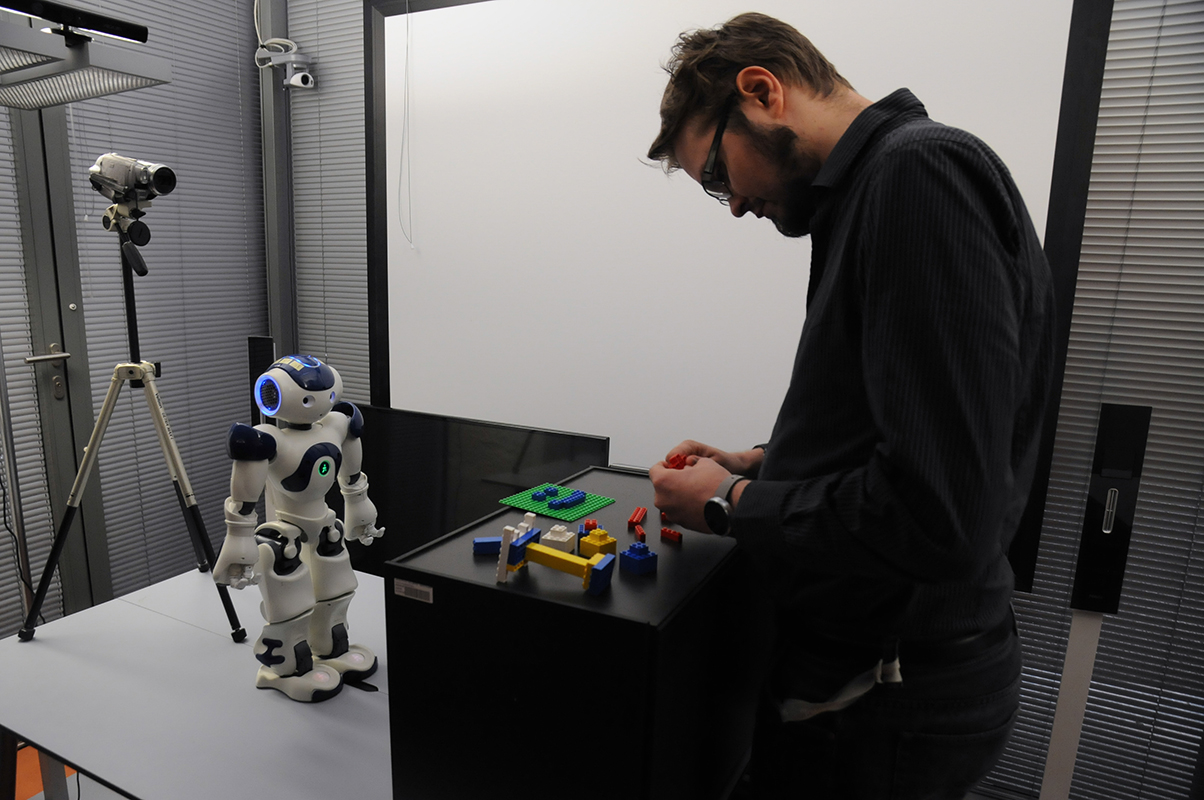

In previous studies, researchers noticed that human subjects reacted differently to robots that made unplanned errors in their tasks. For their new investigation, the study authors programmed a small, humanoid robot to deliberately make mistakes so the scientists could learn more about how that fallibility affected the way people responded to the bots. They also wanted to see how these social cues might provide opportunities for robots to learn from their experiences. [Super-Intelligent Machines: 7 Robotic Futures]

The researchers found that people liked the error-prone robot more than the error-free one, and that they responded to the robot's mistakes with social signals that robots could possibly be trained to recognize, in order to modify future behavior.

For the study, 45 human subjects — 25 men and 20 women — were paired with a robot that was programmed to perform two tasks: ask interview questions, and direct several simple Lego brick assemblies.

For 24 of the users, the robot behaved flawlessly. It posed questions and waited for their responses, and then instructed them to sort the Lego bricks and build towers, bridges and "something creative," ending the exercise by having the person arrange Legos into a facial expression to show a current emotional state, according to the study.

But for 21 people in the study, the robot's performance was less than stellar. Some of the mistakes were technical glitches, such as failing to grasp Lego bricks or repeating a question six times. And some of the mistakes were so-called "social norm violations," such as interrupting while their human partner was answering a question or telling them to throw the Lego bricks on the floor.

The scientists observed the interactions from a nearby station. They tracked how people reacted when the robots made a mistake, gauging their head and body movements, their expressions, the angle of their gaze, and whether they laughed, smiled or said something in response to the error. After the tasks were done, they gave participants a questionnaire to rate how much they liked the robot, and how smart and human-like they thought it was, on a scale from 1 to 5.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

The researchers found that the participants responded more positively to the bumbling robot in their behavior and body language, and they said they liked it "significantly more" than the people liked the robot that made no mistakes at all.

However, the subjects who found the error-prone robot more likable didn't see it as more intelligent or more human-like than the robot that made fewer mistakes, the researchers found.

Their results suggest that robots in social settings would probably benefit from small imperfections; if that makes the bots more likable, the robots could possibly be more successful in tasks meant to serve people, the study authors wrote.

And by understanding how people respond when robots make mistakes, programmers can develop ways for robots to read those social cues and learn from them, and thereby avoid making problematic mistakes in the future, the scientists added.

"Future research should be targeted at making a robot understand the signals and make sense of them," the researchers wrote in the study.

"A robot that can understand its human interaction partner's social signals will be a better interaction partner itself, and the overall user experience will improve," they concluded.

The findings were published online May 31 in the journal Frontiers in Robotics and AI.

Original article on Live Science.

Mindy Weisberger is an editor at Scholastic and a former Live Science channel editor and senior writer. She has reported on general science, covering climate change, paleontology, biology and space. Mindy studied film at Columbia University; prior to Live Science she produced, wrote and directed media for the American Museum of Natural History in New York City. Her videos about dinosaurs, astrophysics, biodiversity and evolution appear in museums and science centers worldwide, earning awards such as the CINE Golden Eagle and the Communicator Award of Excellence. Her writing has also appeared in Scientific American, The Washington Post and How It Works Magazine. Her book "Rise of the Zombie Bugs: The Surprising Science of Parasitic Mind Control" will be published in spring 2025 by Johns Hopkins University Press.