Astonishing AI restoration brings Apollo moon landing films up to speed

The historic events look like they were shot on high-definition video.

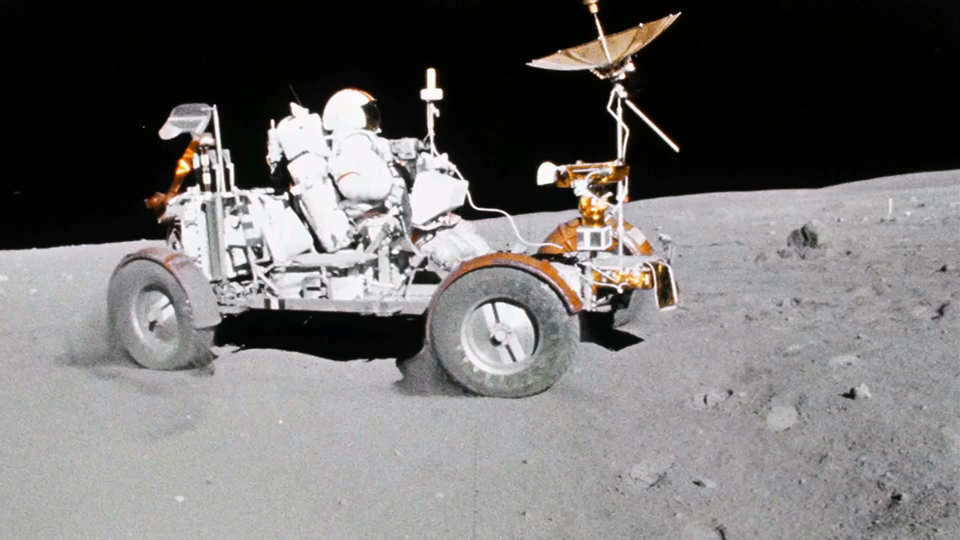

Astronauts on NASA's Apollo missions to the moon captured astounding movies of the lunar surface, but recent enhancements with artificial intelligence (AI) have really made the films out of this world.

In remastered movies shared online by by DutchSteamMachine, a YouTube channel run by a film restoration specialist in the Netherlands, details from lunar scenes are astonishingly crisp and vivid; from mission commander Neil Armstrong's first steps on the moon in 1969 to bumpy lunar rover drives during Apollo 15 and 16 in 1971 and 1972, respectively.

The film restorer behind DutchSteamMachine, who also goes by "Niels," used AI to stabilize shaky footage and generate new frames in NASA moon landing films; increasing the frame rate (the number of frames that play per second) smoothed the motion and made it look more like movement in high-definition (HD) video.

Related: Can machines be creative? Meet 9 AI 'artists'

The Apollo program launched 11 lunar spaceflight missions between 1968 and 1972; of those, four missions tested equipment and six landed on the moon, allowing 12 men to walk, drive and/or leap over the dusty, cratered lunar surface, according to NASA. During all of those missions, astronauts captured details of orbits, activities or experiments using 16-millimeter motion picture cameras that were usually advancing the film at 1, 6, or 12 frames per second, or fps — the film industry's standard rate is 24 fps, and HD video cameras shoot 30 or 60 fps.

When old films shot at a lower frame rate are displayed at higher rates, the motion appears sped-up and jittery, "which creates a disconnect between the past and the person watching it," Niels told Live Science in an email.

"I use an open-source artificial intelligence that has been 'trained' with example footage to generate entirely new frames between real ones," Niels said. "It analyzes the difference between real frames, what changed, and is able to 'interpolate' what kind of data would be there if it was shot at a higher frame rate." The AI is called Depth-Aware video frame INterpolation (DAIN), and is a free, downloadable app for Windows that is "currently in alpha and development," according to DAIN's website.

Get the world’s most fascinating discoveries delivered straight to your inbox.

Experts have been remastering old films for decades, but the recent addition of AI has taken results to a new level, Niels said.

"Most remastering/enhancing of old footage has been the removal of dirt and scratches, stabilizing shaky camera work, sometimes even adding color. But never generating entirely new frames based on data from two consecutive real frames," he explained.

One of the biggest challenges of creating these restorations is finding high-quality source footage; grit, particles and excessive graininess in the film can confuse the algorithm and interfere with AI's interpolation process, Niels said. NASA footage is especially rewarding for AI upgrades because the original frame rate is so low — 6 to 12 fps — that upping it to 24, 50 or 60 fps makes a very dramatic difference. And because movement in the films is so slow, the algorithm can generate more interpolating frames without digital artifacts.

Niels hopes that his videos will bring the moonwalks just a little bit closer to Earthbound viewers, and help them to see and appreciate these landmark events as the astronauts did. He also hopes the remastered footage will inspire more interest in space agencies' upcoming plans for launching crewed missions that fly beyond low-Earth orbit — and even return to the lunar surface — while equipped with cameras capable of shooting in HD.

"Footage actually taken with high-quality video cameras is going to be absolutely stunning," Niels told Live Science.

You can watch all of his AI-enhanced moon landing videos on the DutchSteamMachine YouTube channel, and you can find more of his projects on Patreon.

Originally published on Live Science.

Mindy Weisberger is a science journalist and author of "Rise of the Zombie Bugs: The Surprising Science of Parasitic Mind-Control" (Hopkins Press). She formerly edited for Scholastic and was a channel editor and senior writer for Live Science. She has reported on general science, covering climate change, paleontology, biology and space. Mindy studied film at Columbia University; prior to LS, she produced, wrote and directed media for the American Museum of Natural History in NYC. Her videos about dinosaurs, astrophysics, biodiversity and evolution appear in museums and science centers worldwide, earning awards such as the CINE Golden Eagle and the Communicator Award of Excellence. Her writing has also appeared in Scientific American, The Washington Post, How It Works Magazine and CNN.