Expect an Orwellian future if AI isn't kept in check, Microsoft exec says

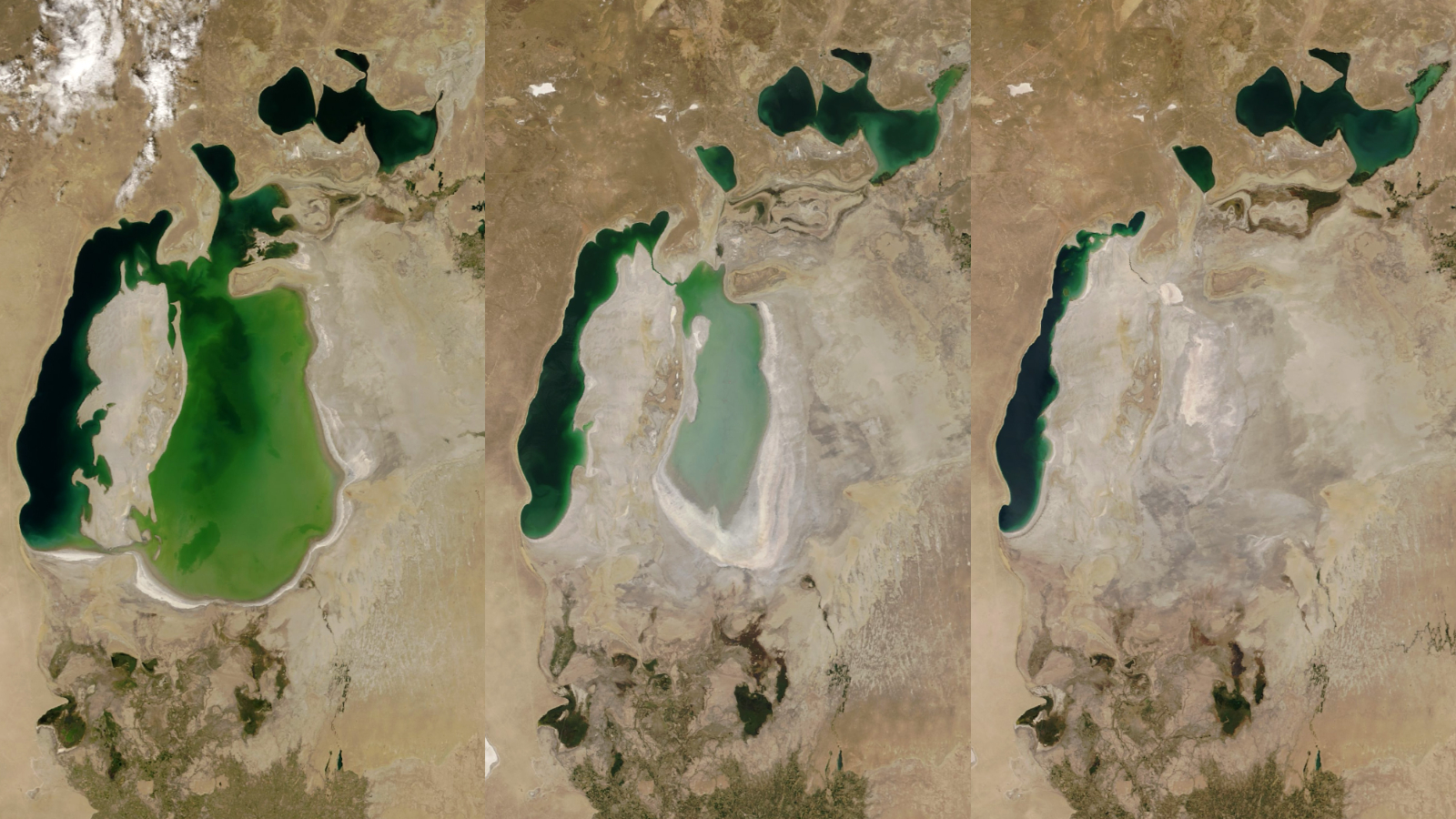

AI is already being used for widespread surveillance in China.

Artificial intelligence could lead to an Orwellian future if laws to protect the public aren't enacted soon, according to Microsoft President Brad Smith.

Smith made the comments to the BBC news program "Panorama" on May 26, during an episode focused on the potential dangers of artificial intelligence (AI) and the race between the United States and China to develop the technology. The warning comes about a month after the European Union released draft regulations attempting to set limits on how AI can be used. There are few similar efforts in the United States, where legislation has largely focused on limiting regulation and promoting AI for national security purposes.

"I'm constantly reminded of George Orwell's lessons in his book '1984,'" Smith said. "The fundamental story was about a government that could see everything that everyone did and hear everything that everyone said all the time. Well, that didn't come to pass in 1984, but if we're not careful, that could come to pass in 2024."

A tool with a dark side

Artificial intelligence is an ill-defined term, but it generally refers to machines that can learn or solve problems automatically, without being directed by a human operator. Many AI programs today rely on machine learning, a suite of computational methods used to recognize patterns in large amounts of data and then apply those lessons to the next round of data, theoretically becoming more and more accurate with each pass.

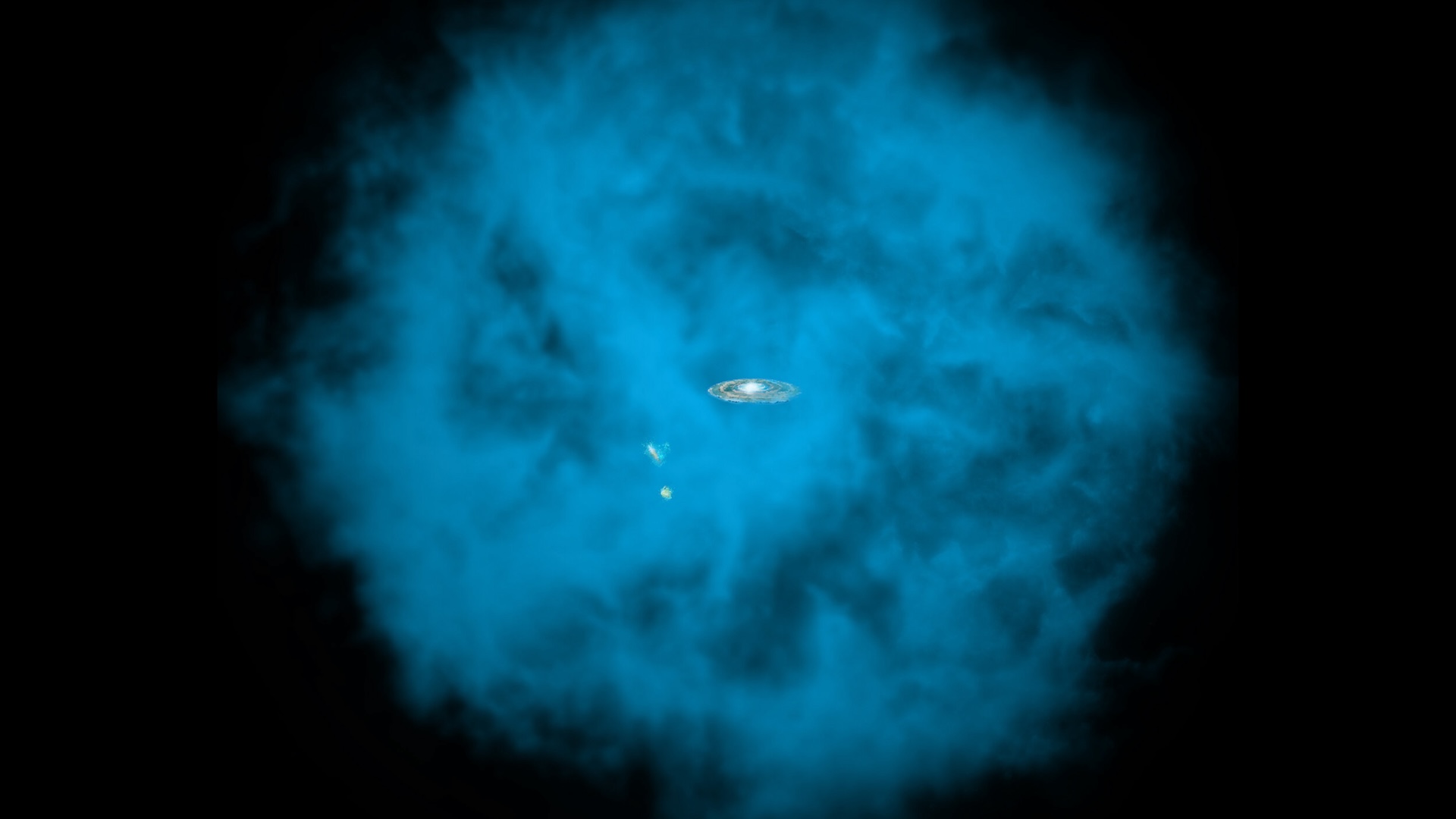

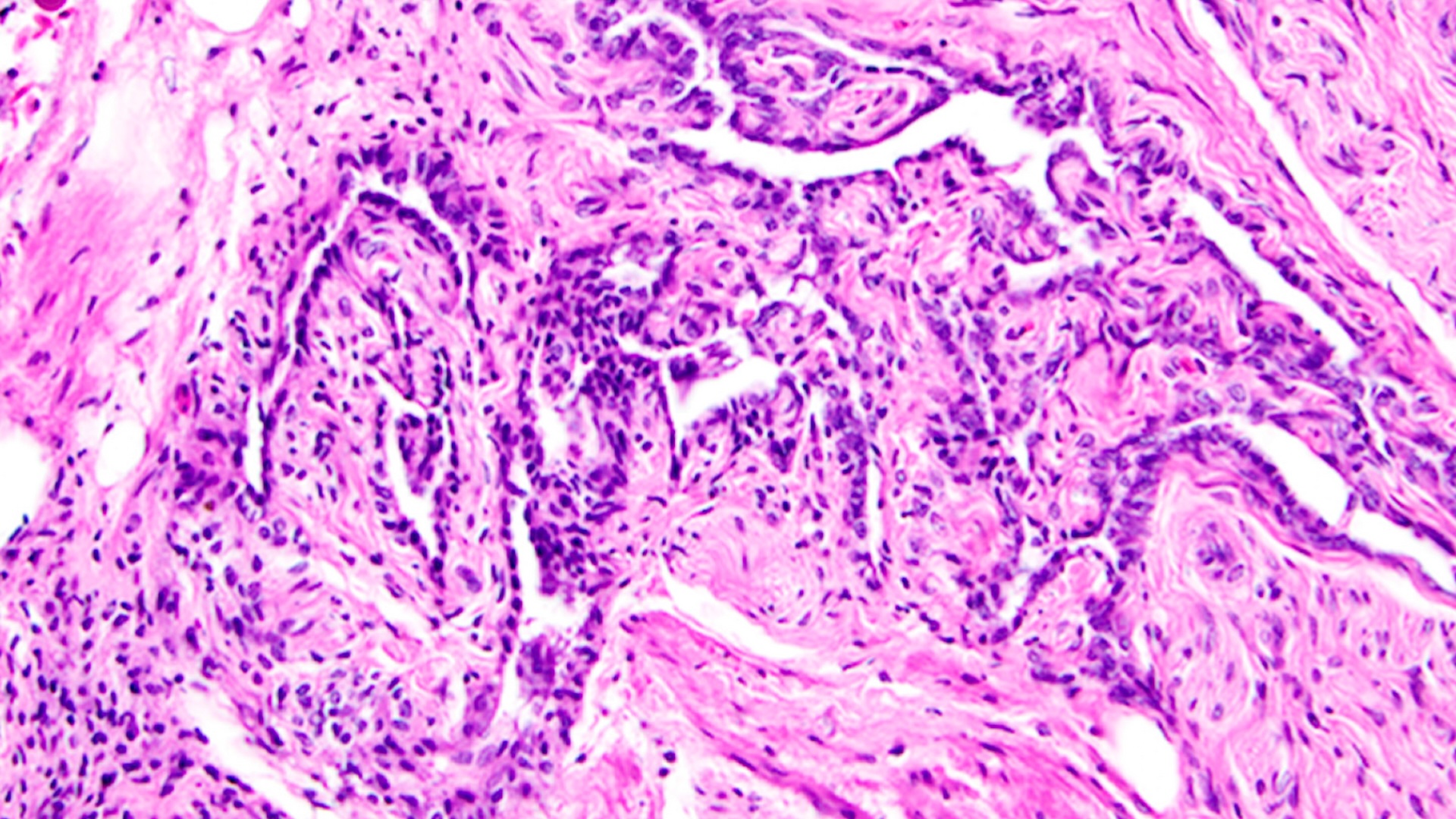

This is an extremely powerful approach that has been applied to everything from basic mathematical theory to simulations of the early universe, but it can be dangerous when applied to social data, experts argue. Data on humans comes preinstalled with human biases. For example, a recent study in the journal JAMA Psychiatry found that algorithms meant to predict suicide risk performed far worse on Black and American Indian/Alaskan Native individuals than on white individuals, partially because there were fewer patients of color in the medical system and partially because patients of color were less likely to get treatment and appropriate diagnoses in the first place, meaning the original data was skewed to underestimate their risk.

Bias can never be completely avoided, but it can be addressed, said Bernhardt Trout, a professor of chemical engineering at the Massachusetts Institute of Technology who teaches a professional course on AI and ethics. The good news, Trout told Live Science, is that reducing bias is a top priority within both academia and the AI industry.

"People are very cognizant in the community of that issue and are trying to address that issue," he said.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Government surveillance

The misuse of AI, on the other hand, is perhaps more challenging, Trout said. How AI is used isn't just a technical issue; it's just as much a political and moral question. And those values vary widely from country to country.

"Facial recognition is an extraordinarily powerful tool in some ways to do good things, but if you want to surveil everyone on a street, if you want to see everyone who shows up at a demonstration, you can put AI to work," Smith told the BBC. "And we're seeing that in certain parts of the world."

China has already started using artificial intelligence technology in both mundane and alarming ways. Facial recognition, for example, is used in some cities instead of tickets on buses and trains. But this also means that the government has access to copious data on citizens' movements and interactions, the BBC's "Panorama" found. The U.S.-based advocacy group IPVM, which focuses on video surveillance ethics, has found documents suggesting plans in China to develop a system called "One person, one file," which would gather each resident's activities, relationships and political beliefs in a government file.

"I don't think that Orwell would ever [have] imagined that a government would be capable of this kind of analysis," Conor Healy, director of IPVM, told the BBC.

Orwell's famous novel "1984" described a society in which the government watches citizens through "telescreens," even at home. But Orwell did not imagine the capabilities that artificial intelligence would add to surveillance — in his novel, characters find ways to avoid the video surveillance, only to be turned in by fellow citizens.

In the autonomous region of Xinjiang, where the Uyghur minority has accused the Chinese government of torture and cultural genocide, AI is being used to track people and even to assess their guilt when they are arrested and interrogated, the BBC found. It's an example of the technology facilitating widespread human-rights abuse: The Council on Foreign Relations estimates that a million Uyghurs have been forcibly detained in "reeducation" camps since 2017, typically without any criminal charges or legal avenues to escape.

Pushing back

The EU's potential regulation of AI would ban systems that attempt to circumvent users' free will or systems that enable any kind of "social scoring" by government. Other types of applications are considered "high risk" and must meet requirements of transparency, security and oversight to be put on the market. These include things like AI for critical infrastructure, law enforcement, border control and biometric identification, such as face- or voice-identification systems. Other systems, such as customer-service chatbots or AI-enabled video games, are considered low risk and not subject to strict scrutiny.

The U.S. federal government's interest in artificial intelligence, by contrast, has largely focused on encouraging the development of AI for national security and military purposes. This focus has occasionally led to controversy. In 2018, for example, Google killed its Project Maven, a contract with the Pentagon that would have automatically analyzed video taken by military aircraft and drones. The company argued that the goal was only to flag objects for human review, but critics feared the technology could be used to automatically target people and places for drone strikes. Whistleblowers within Google brought the project to light, ultimately leading to public pressure strong enough that the company called off the effort.

Nevertheless, the Pentagon now spends more than $1 billion a year on AI contracts, and military and national security applications of machine learning are inevitable, given China's enthusiasm for achieving AI supremacy, Trout said.

"You cannot do very much to hinder a foreign country's desire to develop these technologies," Trout told Live Science. "And therefore, the best you can do is develop them yourself to be able to understand them and protect yourself, while being the moral leader."

In the meantime, efforts to rein in AI domestically are being led by state and local governments. Washington state's largest county, King County, just banned government use of facial recognition software. It's the first county in the U.S. to do so, though the city of San Francisco made the same move in 2019, followed by a handful of other cities.

Already, there have been cases of facial recognition software leading to false arrests. In June 2020, a Black man in Detroit was arrested and held for 30 hours in detention because an algorithm falsely identified him as a suspect in a shoplifting case. A 2019 study by the National Institute of Standards and Technology found that software returned more false matches for Black and Asian individuals compared with white individuals, meaning that the technology is likely to deepen disparities in policing for people of color.

"If we don't enact, now, the laws that will protect the public in the future, we're going to find the technology racing ahead," Smith said, "and it's going to be very difficult to catch up."

The full documentary is available on YouTube.

Originally published on Live Science.

Stephanie Pappas is a contributing writer for Live Science, covering topics ranging from geoscience to archaeology to the human brain and behavior. She was previously a senior writer for Live Science but is now a freelancer based in Denver, Colorado, and regularly contributes to Scientific American and The Monitor, the monthly magazine of the American Psychological Association. Stephanie received a bachelor's degree in psychology from the University of South Carolina and a graduate certificate in science communication from the University of California, Santa Cruz.