'I encountered the terror of never finding anything': The hollowness of AI art proves machines can never emulate genuine human intelligence

The concepts of "sentience" and "agency" in machines are muddled, particularly given that it's difficult to measure what these concepts are. But many speculate the improvements we are seeing in artificial intelligence (AI) may one day amount to a new form of intelligence that supersedes our now.

Regardless, AI has been a part of our lives for many years — and we encounter its invisible hand predominantly on the digital platforms most of us inhabit daily. Digital technologies once held immense promise for transforming society, but this utopianism feels like it's slipping away, argues technologist and author Mike Pepi, in his new book "Against Platforms: Surviving Digital Utopia" (Melville House Publishing, 2025).

We have been taught that digital tools are neutral, but in reality, they are laden with dangerous assumptions and can lead to unintended consequences. In this excerpt, Pepi assesses whether AI — the technology at the heart of so many of these platforms — can ever emulate the human feelings that move us, through the prism of art.

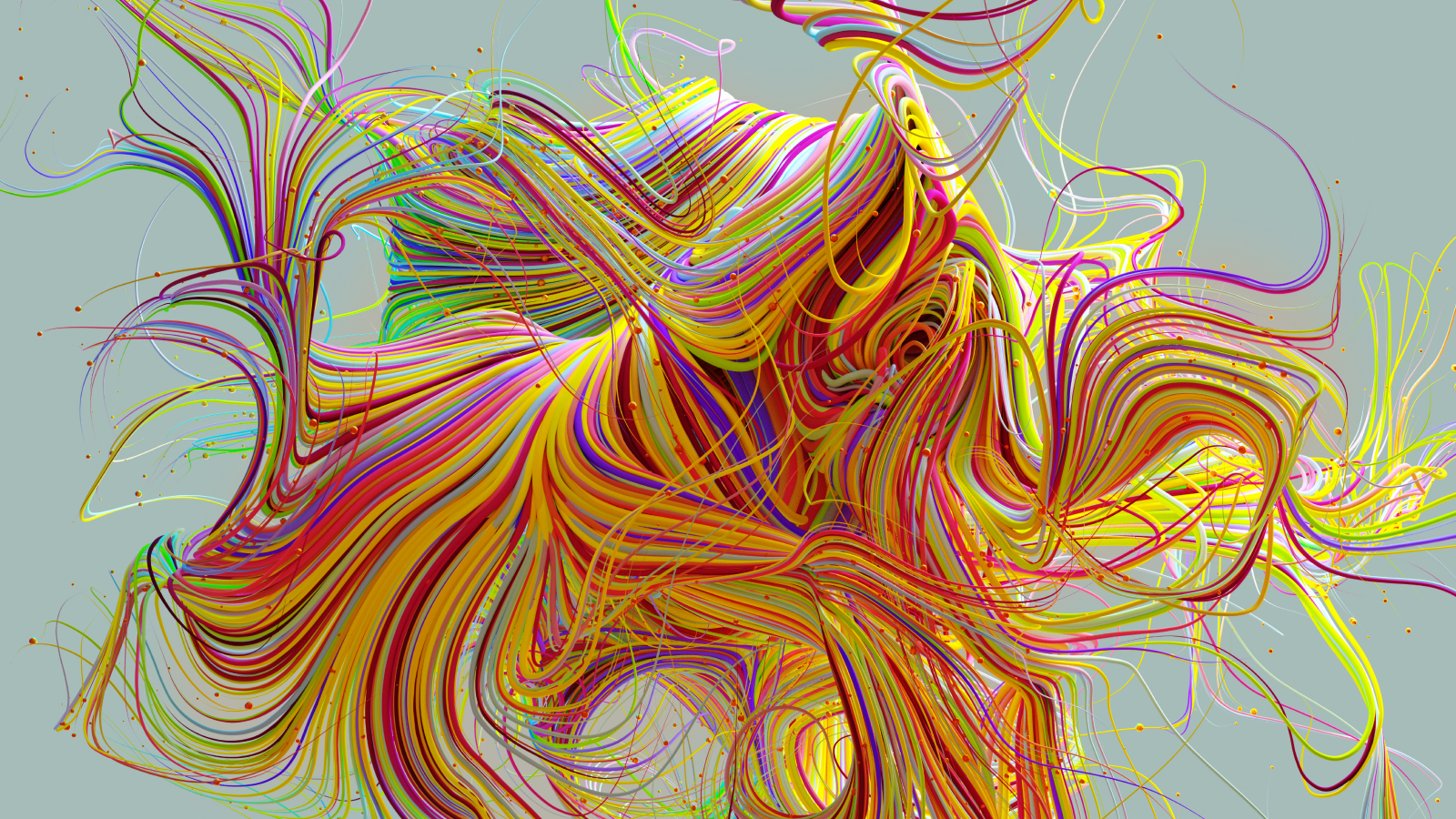

The Museum of Modern Art’s atrium was packed to the brim the day I visited Refik Anadol’s much-anticipated installation of Unsupervised (2022). As I entered, the crowd was fixated on a massive projection of one of the artist’s digital "hallucinations." MoMA’s curators tell us that Anadol’s animations use artificial intelligence "to interpret and transform" the museum’s collection. As the machine learning algorithm traverses billions of data points, it "reimagines the history of modern art and dreams about what might have been." I saw animated bursts of red lines and intersecting orange radials. Soon, globular facial forms appeared. The next moment, a trunk of a tree settled in the corner. A droning, futuristic soundtrack filled the room from invisible speakers. The crowd reacted with a hushed awe as the mutating projections approached familiar forms.

Related: Just 2 hours is all it takes for AI agents to replicate your personality with 85% accuracy

Anadol’s work debuted at a moment of great hype about artificial intelligence’s, or AI’s, ability to be creative. The audience was not only there to see the fantastic animations on the screen. Many came to witness a triumph of machine creativity in the symbolic heart of modern art.

Every visitor to Unsupervised encountered a unique mutation. Objects eluded the mind’s grasp. Referents slipped out of view. The moments of beauty were accidental, random flashes of computation, never to return. Anadol calls it a "self-regenerating element of surprise;" one critic called it a screensaver. As I gazed into the mutations, I admit I found moments of beauty. It could have registered as relaxation, even bliss. For some, fear, even terror. The longer I stuck around, the more emptiness I encountered. How could I make any statement about the art before me when the algorithm was programmed to equivocate? Was it possible for a human to appreciate, let alone grasp, the end result?

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

In need of a break, I headed upstairs to see Andrew Wyeth’s Christina’s World (1948), part of the museum’s permanent collection. Christina’s World is a realist depiction of an American farm. In the center of the frame, a woman lies in a field, gesturing longingly toward a distant barn. The field makes a dramatic sweeping motion, etched in an ochre grass. The woman wears a pink dress and contorts at a slight angle. The sky is gray, but calm.

Most viewers are confronted by questions: Who is this woman, and why does she lie in this field? Christina was Andrew Wyeth’s neighbor. At a young age, she developed a muscular disability and was unable to walk. She preferred to crawl around her parents’ property, which Wyeth witnessed from his home nearby. Still, there are more questions about Christina. What is Wyeth trying to say in the distance between his subjects? What is Christina thinking in the moment that Wyeth captures? This tiny epistemological game plays out each time one views Christina’s World. We consider the artist’s intent. We try to match our interpretation with the historical tradition from which the work emerged. With more information, we can still further peer into the work and wrestle with its contradictions. This is possible because there is a single referent. This doesn’t mean its meaning is fixed, or that we prefer its realism. It means that the thinking we do with this work meets an equal, human, creative act.

The emptiness of AI art

The experience of Unsupervised is wholly different. The work is combinatorial, which is to say, it tries to make something new from previous data about art. The relationships drawn are mathematical, and the moments of recognition are accidental. Anadol calls his method a "thinking brush." While he is careful to explain that the AI is not sentient, the appeal of the work relies on the machine’s encroachments on the brain. Anadol says we "see through the mind of a machine." But there is no mind at work at all. It’s pure math, pure randomness. There is motion, but it’s stale. The novelty is fleeting.

In the atrium, Unsupervised presents thousands of images, but I can ask nothing of them. Up a short flight of steps, I am presented with a single image and can ask dozens of questions. The institution of art is the promise that some, indeed many, of those will be answered. They may not be done with certainty, but very few things are. Nonetheless, the audience still communes with the narrative power of Christina’s World. With Unsupervised, the only thing reflected back was a kind of blank, algorithmic stare. I could not help but think that Christina’s yearning gaze, never quite revealed, might not be unlike the gaping stare of the audience in the atrium below. As I peered into the artificially intelligent animations searching for anything to see, I encountered the terror of never finding anything — a kind of paralysis of vision — not the inability to perceive but the inability to think alongside what I saw.

All artificial intelligence is based on mathematical models that computer scientists call machine learning. In most cases, we feed the program training data, and we ask various kinds of networks to detect patterns. Recently, machine learning programs can successfully perform evermore complex tasks thanks to increases in computing power, advancements in software programming, and most of all, an exponential explosion of training data. But for half a century, even the best AI was capped in its process, able only to automate predefined supervised analysis.

For example, given a set of information about users’ movie preferences and some data about a new user, it could predict what movies this user might like. This presents itself to us as “artificial intelligence” because it replaces and far surpasses, functionally, the act of asking a friend (or better yet, a book) for a movie recommendation. Commercially, it flourished. But could these same software and hardware tools create a movie itself? For many years, the answer was “absolutely not.” AI could predict and model, but it could not create. A machine learning system is supervised because each input has a correct output, and the algorithm constantly fixes and retrains the model to get closer and closer to the point that the model can predict something accurately. But what happens when we don’t tell the model what is correct?

Can AI ever create genuinely 'new' content?

What if we gave it a few billion examples of cat images for training, and then told it to make a completely new image of a cat? In the past decade, this became possible with generative AI, a type of deep learning that uses generative adversarial networks to create new content. Two neural networks collaborate: one called a generator, which produces new data, and one called a discriminator, which instantly evaluates it.

The generator and discriminator compete in unison, with the generator updating outputs based on the feedback from the discriminator. Eventually, this process creates content that is nearly indistinguishable from the training data. With the introduction of tools like ChatGPT, Midjourney, and DALL-E 2, generative AI boosters claim we have crossed into a Cambrian explosion broadly expanding the limits of machine intelligence. Unlike previous AI applications that simply analyzed existing data, generative AI can create novel content, including language, music, and images.

—Meet 'Chameleon' – an AI model that can protect you from facial recognition thanks to a sophisticated digital mask

—Large language models can be squeezed onto your phone — rather than needing thousands of servers to run — after breakthrough

—'This is a marriage of AI and quantum': New technology gives AI the power to feel surfaces for the 1st time

The promise of Unsupervised is a microcosm for generative AI: fed with enough information, nonhuman intelligence can think on its own and create something new, even beautiful. Yet the distance between Christina’s World and Unsupervised is just one measure of the difference between computation and thought. AI researchers frequently refer to the brain as “processing information.” This is a flawed metaphor for how we think. As material technology advanced, we looked for new metaphors to explain the brain. The ancients used clay, viewing the mind as a blank slate upon which symbols were etched; the nineteenth century used steam engines; and later, brains were electric machines. Only a few years after computer scientists started processing data on mainframe computers, psychologists and engineers started to speak of the brain as an information processor.

The problem is your brain is not a computer, and computers are not brains. Computers process data and calculate results. They can solve equations, but they do not reason on their own. Computation can only blindly mimic the work of the brain — they will never have consciousness, sentience, or agency. Our minds, likewise, do not process information. Thus, there are states of mind that cannot be automated, and intelligences that machines cannot have.

Disclaimer

From Against Platforms: Surviving Digital Utopia. Used with permission of the publisher, Melville House Publishing. Copyright © 2025 by Mike Pepi.

Against Platforms: Surviving Digital Utopia by Mike Pepi

In Against Platforms, technologist and creator Mike Pepi lays out an explanation of what went wrong — and a manifesto for putting it right.

The key is that we have been taught that digital technologies are neutral tools, transparent, easily understood, and here to serve us. The reality, Pepi says, is that they are laden with assumptions and collateral consequences — ideology, in other words. It is this hidden ideology that must be dismantled if we are to harness technology for the fullest expression of our humanity.

Mike Pepi is a technologist and author who has written widely about the intersection between culture and the Internet. An art critic and theorist, he self-identifies as part of the "tech left" — digital natives who want to reshape technology as a force for progressive good. His writing has been published in Spike, Frieze, e-flux, and other venues.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.