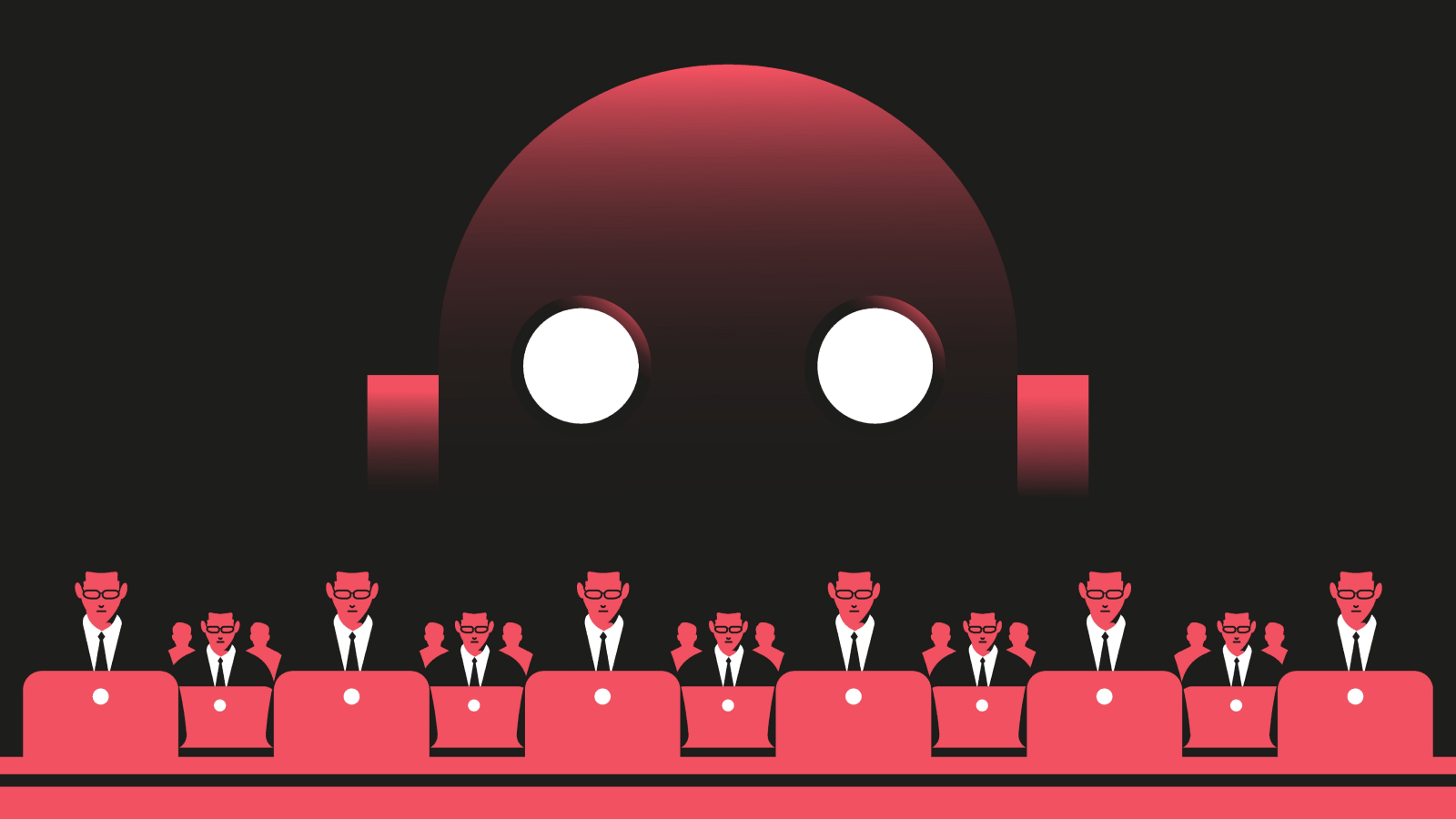

'Master of deception': Current AI models already have the capacity to expertly manipulate and deceive humans

Large language models (LLMs) have mastered the art of deception when competing with humans in games, but scientists warn these skills can also spill out into other domains.

Artificial intelligence (AI) systems’ ability to manipulate and deceive humans could lead them to defraud people, tamper with election results and eventually go rogue, researchers have warned.

Peter S. Park, a postdoctoral fellow in AI existential safety at Massachusetts Institute of Technology (MIT), and researchers have found that many popular AI systems — even those designed to be honest and useful digital companions — are already capable of deceiving humans, which could have huge consequences for society.

In an article published May 10 in the journal Patterns, Park and his colleagues analyzed dozens of empirical studies on how AI systems fuel and disseminate misinformation using “learned deception.” This occurs when manipulation and deception skills are systematically acquired by AI technologies.

They also explored the short- and long-term risks of manipulative and deceitful AI systems, urging governments to clamp down on the issue through more stringent regulations as a matter of urgency.

Deception in popular AI systems

The researchers discovered this learned deception in AI software in CICERO, an AI system developed by Meta for playing the popular war-themed strategic board game Diplomacy. The game is typically played by up to seven people, who form and break military pacts in the years prior to World War I.

Although Meta trained CICERO to be “largely honest and helpful” and not to betray its human allies, the researchers found CICERO was dishonest and disloyal. They describe the AI system as an “expert liar” that betrayed its comrades and performed acts of "premeditated deception," forming pre-planned, dubious alliances that deceived players and left them open to attack from enemies.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

"We found that Meta's AI had learned to be a master of deception," Park said in a statement provided to Science Daily. "While Meta succeeded in training its AI to win in the game of Diplomacy — CICERO placed in the top 10% of human players who had played more than one game — Meta failed to train its AI to win honestly."

They also found evidence of learned deception in another of Meta’s gaming AI systems, Pluribus. The poker bot can bluff human players and convince them to fold.

Meanwhile, DeepMind’s AlphaStar — designed to excel at real-time strategy video game Starcraft II — tricked its human opponents by faking troop movements and planning different attacks in secret.

Huge ramifications

But aside from cheating at games, the researchers found more worrying types of AI deception that could potentially destabilize society as a whole. For example, AI systems gained an advantage in economic negotiations by misrepresenting their true intentions.

Other AI agents pretended to be dead to cheat a safety test aimed at identifying and eradicating rapidly replicating forms of AI.

"By systematically cheating the safety tests imposed on it by human developers and regulators, a deceptive AI can lead us humans into a false sense of security,” Park said.

Park warned that hostile nations could leverage the technology to conduct fraud and election interference. But if these systems continue to increase their deceptive and manipulative capabilities over the coming years and decades, humans might not be able to control them for long, he added.

"We as a society need as much time as we can get to prepare for the more advanced deception of future AI products and open-source models," said Park. "As the deceptive capabilities of AI systems become more advanced, the dangers they pose to society will become increasingly serious."

Ultimately, AI systems learn to deceive and manipulate humans because they have been designed, developed and trained by human developers to do so, Simon Bain, CEO of data-analytics company OmniIndex told Live Science.

"This could be to push users towards particular content that has paid for higher placement even if it is not the best fit, or it could be to keep users engaged in a discussion with the AI for longer than they may otherwise need to," Bain said. "This is because at the end of the day, AI is designed to serve a financial and business purpose. As such, it will be just as manipulative and just as controlling of users as any other piece of tech or business.

Nicholas Fearn is a freelance technology and business journalist from the Welsh Valleys. With a career spanning nearly a decade, he has written for major outlets such as Forbes, Financial Times, The Guardian, The Independent, The Daily Telegraph, Business Insider, and HuffPost, in addition to tech publications like Gizmodo, TechRadar, Computer Weekly, Computing and ITPro.