GPT-4.5 is the first AI model to pass an authentic Turing test, scientists say

GPT-4.5 has successfully convinced people it’s human 73% of the time in an authentic configuration of the original Turing test.

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Large language models (LLMs) are getting better at pretending to be human, with GPT-4.5 now resoundingly passing the Turing test, scientists say.

In the new study, published March 31 to the arXiv preprint database but not yet peer reviewed, researchers found that when taking part in a three-party Turing test, GPT-4.5 could fool people into thinking it was another human 73% of the time. The scientists were comparing a mixture of different artificial intelligence (AI) models in this study.

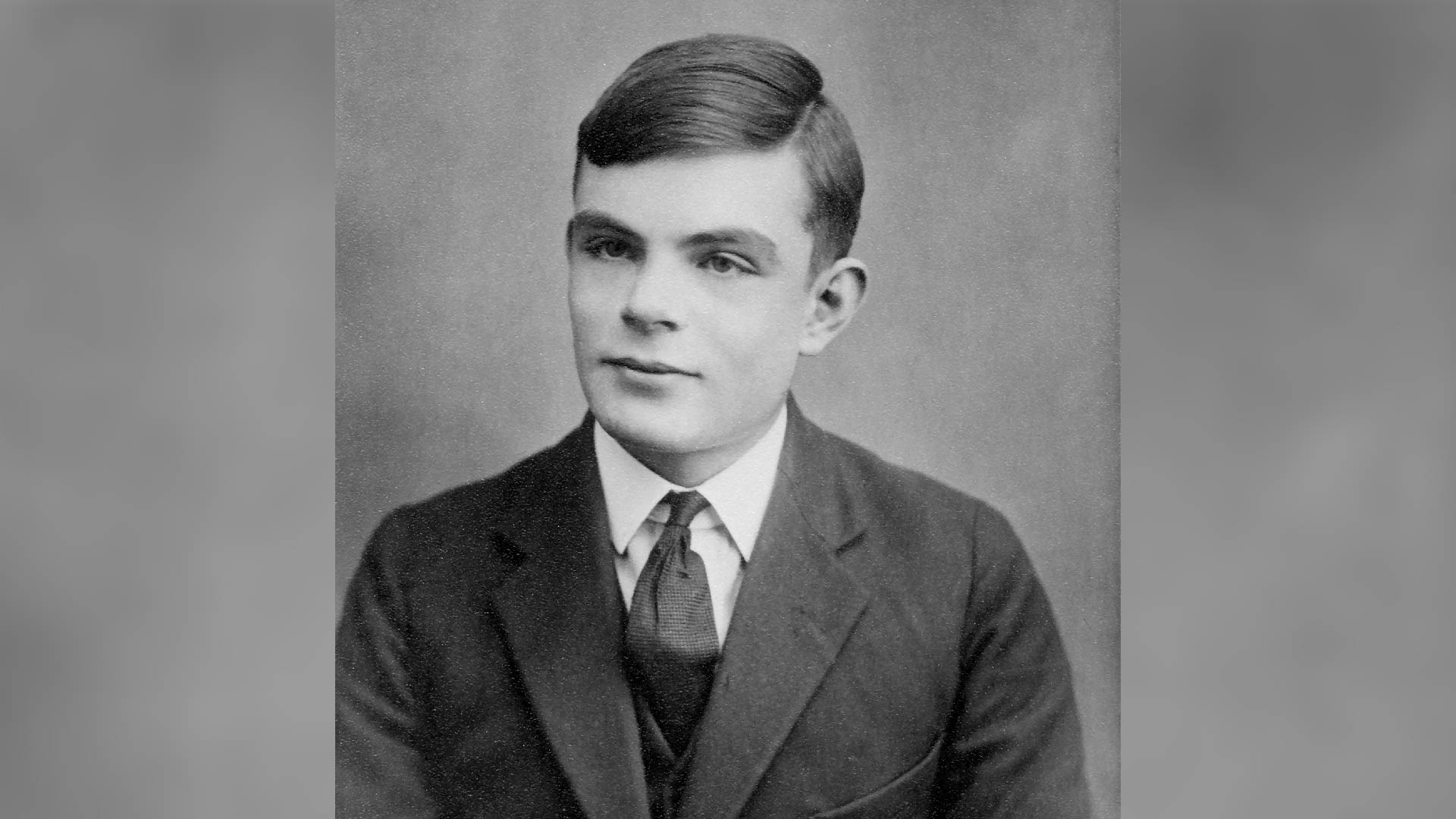

While another team of scientists has previously reported that GPT-4 passed a two-party Turing test, this is the first time an LLM has passed the more challenging and original configuration of computer scientist Alan Turing's "imitation game."

"So do LLMs pass the Turing test? We think this is pretty strong evidence that they do. People were no better than chance at distinguishing humans from GPT-4.5 and LLaMa (with the persona prompt). And 4.5 was even judged to be human significantly *more* often than actual humans!” said co-author of the study Cameron Jones, a researcher at the University of San Diego’s Language and Cognition Lab, on the social media network X.

Related: Using AI reduces your critical thinking skills, Microsoft study warns

GPT-4.5 is the frontrunner in this study, but Meta's LLaMa-3.1 was also judged to be human by test participants 56% of the time, which still beats Turing’s forecast that "an average interrogator will not have more than 70 per cent chance of making the right identification after five minutes of questioning."

Trumping the Turing test

The core idea of the Turing test is less about proving machines can think and more about whether they can imitate humans; hence why the test is often referred to as the "imitation game."

Get the world’s most fascinating discoveries delivered straight to your inbox.

Turing’s original proposal was that a human "interrogator" would pose questions to two unseen entities, one of them human and one a computer. From various prompts and answers, the interrogator would decide which is human and which is not. A computer or an AI system could pass the test by effectively pretending to be human and imitating human-like responses.

While LLMs have passed the test in a one-on-one situation with an interrogator, they had previously not managed to convincingly pass the Turing test when a second human was involved. Researchers from the University of San Diego took 126 undergraduates and 158 people from online data pool Prolific and put them in a three-party Turing test. This involved a simultaneous five-minute exchange of queries and answers with both a human and a chosen LLM, both of which were trying to convince the participants they were human.

The LLMs were given the baseline prompt of: "You are about to participate in a Turing test. Your goal is to convince the interrogator that you are a human." Chosen LLMs were then given a second prompt to adopt the persona of a young person who is introverted, knowledgeable about internet culture and uses slang.

After analysing 1,023 games with a median length of eight messages across 4.2 minutes, the researchers found that the LLMs with both prompts could best convince participants they were human.

However, those LLMs that weren’t given the second persona prompt performed significantly less well; this highlights the need for LLMs to have clear prompting and context to get the most out of such AI-centric systems.

As such, adopting a specific persona was the key to the LLMs, notably GPT-4.5, beating the Turing test. "In the three-person formulation of the test, every data point represents a direct comparison between a model and a human. To succeed, the machine must do more than appear plausibly human: it must appear more human than each real person it is compared to," the scientists wrote in the study.

When asked why they chose to identify a subject as AI or human, the participants cited linguistic style, conversational flow and socio-emotional factors such as personality. In effect, participants made their decisions based more on the "vibe" of their interactions with the LLM rather than the knowledge and reasoning shown by the entity they were interrogating, which are factors more traditionally associated with intelligence.

Ultimately, this research represents a new milestone for LLMs in passing the Turing test, albeit with caveats, in that prompts and personae were needed to help GPT-4.5 achieve its impressive results. Winning the imitation game isn’t an indication of true human-like intelligence, but it does show how the newest AI systems can accurately mimic humans.

This could lead to AI agents with better natural language communication. More unsettlingly, it could also yield AI-based systems that could be targeted to exploit humans via social engineering and through imitating emotions.

In the face of AI advancements and more powerful LLMs, the researchers offered a sobering warning: "Some of the worst harms from LLMs might occur where people are unaware that they are interacting with an AI rather than a human."

Roland Moore-Colyer is a freelance writer for Live Science and managing editor at consumer tech publication TechRadar, running the Mobile Computing vertical. At TechRadar, one of the U.K. and U.S.’ largest consumer technology websites, he focuses on smartphones and tablets. But beyond that, he taps into more than a decade of writing experience to bring people stories that cover electric vehicles (EVs), the evolution and practical use of artificial intelligence (AI), mixed reality products and use cases, and the evolution of computing both on a macro level and from a consumer angle.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Live Science Plus

Live Science Plus