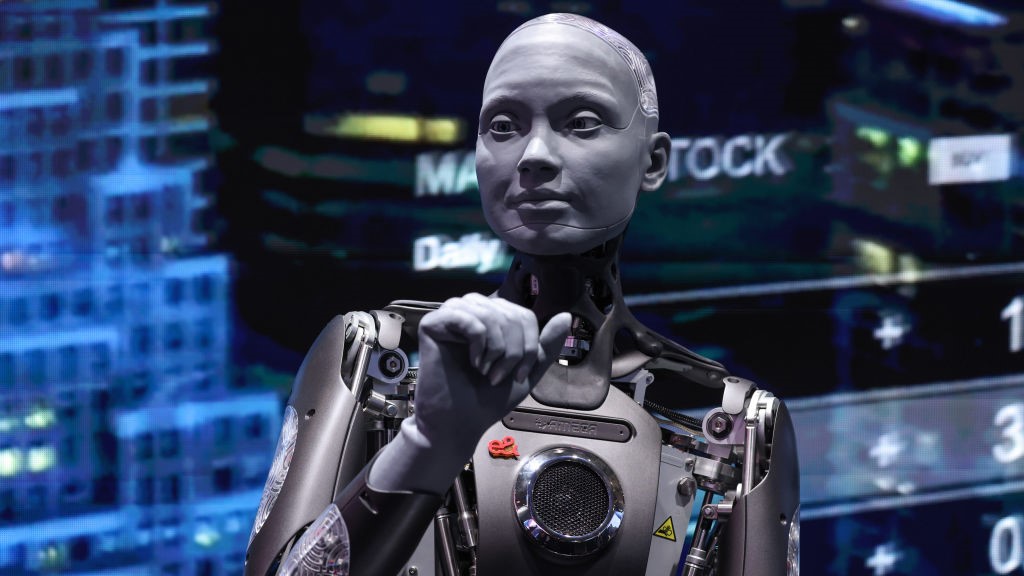

Humanity faces a 'catastrophic' future if we don’t regulate AI, 'Godfather of AI' Yoshua Bengio says

Yoshua Bengio played a crucial role in the development of the machine-learning systems we see today. Now, he says that they could pose an existential risk to humanity.

Yoshua Bengio is one of the most-cited researchers in artificial intelligence (AI). A pioneer in creating artificial neural networks and deep learning algorithms, Bengio, along with Meta chief AI scientist Yann LeCun and former Google AI researcher Geoffrey Hinton, received the 2018 Turing Award (known as the "Nobel" of computing) for their key contributions to the field.

Yet now Bengio, often referred to alongside his fellow Turing Award winners as one of the "godfathers" of AI, is disturbed by the pace of his technology’s development and adoption. He believes that AI could damage the fabric of society and carries unanticipated risks to humans. Now he is the chair of the International Scientific Report on the Safety of Advanced AI — an advisory panel backed by 30 nations, the European Union, and the United Nations.

Live Science spoke with Bengio via video call at the HowTheLightGetsIn Festival in London, where he discussed the possibility of machine consciousness and the risks of the fledgling technology. Here's what he had to say.

Ben Turner: You played an incredibly significant role in developing artificial neural networks, but now you've called for a moratorium on their development and are researching ways to regulate them. What made you ask for a pause on your life's work?

Yoshua Bengio: It is difficult to go against your own church, but if you think rationally about things, there's no way to deny the possibility of catastrophic outcomes when we reach a level of AI. The reason why I pivoted is because before that moment, I understood that there are scenarios that are bad, but I thought we'd figure it out.

But it was thinking about my children and their future that made me decide I had to act differently to do whatever I could to mitigate the risks.

BT: Do you feel some responsibility for mitigating their worst impacts? Is it something that weighs on you?

Get the world’s most fascinating discoveries delivered straight to your inbox.

YB: Yeah, I do. I feel a duty because my voice has some impact due to the recognition I got for my scientific work, and so I feel I need to speak up. The other reason I'm involved is because there are important technical solutions that are part of the bigger political solution if we're going to figure out how to not harm people with AI's construction.

Companies would be happy to include these technical solutions, but right now we don't know how to do it. They still want to get the quadrillions of profits predicted from AI reaching a human level — so we're in a bad position, and we need to find scientific answers.

One image that I use a lot is that it's like all of humanity is driving on a road that we don't know very well and there's a fog in front of us. We're going towards that fog, we could be on a mountain road, and there may be a very dangerous pass that we cannot see clearly enough.

So what do we do? Do we continue racing ahead hoping that it's all gonna be fine, or do we try to come up with technological solutions? The political solution says to apply the precautionary principle: slow down if you're not sure. The technical solution says we should come up with ways to peer through the fog and maybe equip the vehicle with safeguards.

BT: So what are the greatest risks that machine learning poses, in the short and the long term, to humanity?

YB: People always say these risks are science fiction, but they're not. In the short term, we already see AI being used in the U.S. election campaign, and it's just going to get a lot worse. There was a recent study that showed that ChatGPT-4 is a lot better than humans at persuasion, and that's just ChatGPT-4 — the new version is gonna be worse.

There have also been tests of how these systems can help terrorists. The recent ChatGPT o1 has shifted that risk from low risk to medium risk.

If you look further down the road, when we reach the level of superintelligence there are two major risks. The first is the loss of human control, if superintelligent machines have a self-preservation objective, their goal could be to destroy humanity so we couldn't turn them off.

The other danger, if the first scenario somehow doesn't happen, is in humans using the power of AI to take control of humanity in a worldwide dictatorship. You can have milder versions of that and it can exist on a spectrum, but the technology is going to give huge power to whoever controls it.

BT: The EU has issued an AI act, so did Biden with his Executive Order on AI. How well are governments responding to these risks? Are their responses steps in the right direction or off the mark?

YB: I think they're steps in the right direction. So for example Biden's executive order was as much as the White House could do at that stage, but it doesn't have the impact, it doesn't force companies to share the results of their tests or even do those tests.

We need to get rid of that voluntary element, companies actually have to have safety plans, divulge the results of their tests, and if they don't follow the state-of-the-art in protecting the public they could be sued. I think that's the best proposal in terms of legislation out there.

BT: At the time of speaking, neural networks have a load of impressive applications. But they still have issues: they struggle with unsupervised learning and they don't adapt well to situations that show up rarely in their training data, which they need to consume staggering amounts of. Surely, as we've seen with self-driving cars, these faults also produce risks of their own?

YB: First off, I want to correct something you've said in this question: they're very good at unsupervised learning, basically that's how they're trained. They're trained in an unsupervised way — just eating up all the data you're giving them and trying to make sense of it, that's unsupervised learning. That's called pre-training, before you even give them a task you make them make sense of all the data they can.

As to how much data they need, yes, they need a lot more data than humans do, but there's arguments that evolution needed a lot more data than that to come up with the specifics of what's in our brains. So it's hard to make comparisons.

I think there's room for our improvement as to how much data they need. The important point from a policy perspective is that we've made huge progress, there's still a huge gap between human intelligence and their abilities, but it's not clear how far we are to bridge that gap. Policy should be preparing for the case where it can be quick — in the next five years or so.

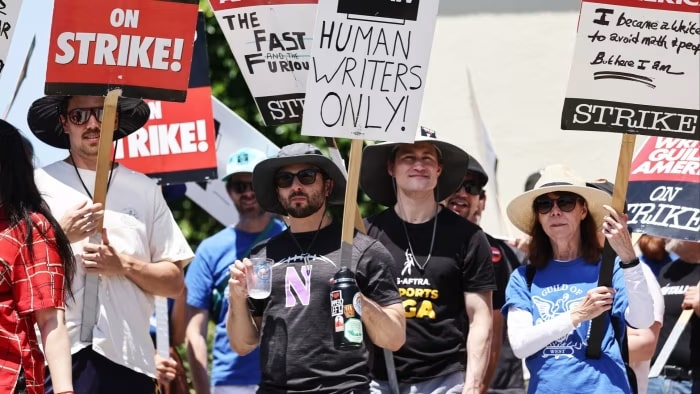

BT: On this data question, GPT models have been shown to undergo model collapse when they consume enough of their own content. I know we've spoken about the risk of AI becoming superintelligent and going rogue, but what about a slightly more farcical dystopian possibility — we become dependent on AI, it strips industries of jobs, it degrades and collapses, and then we’re left picking up the pieces?

YB: Yeah I'm not worried about the issue of collapse from the data they generate. If you go for [feeding the systems] synthetic data and you do it smartly, in a way that people understand. You're not gonna just ask these systems to generate data and train on it — that's meaningless from a machine-learning perspective.

With synthetic data you can make it play against itself, and it generates synthetic data that helps it make better decisions. So I'm not afraid of that.

We can, however, build machines that have side effects that we don't anticipate. Once we're dependent on them, it's going to be hard to pull the plug, and we might even lose control. If these machines are everywhere, and they control a lot of aspects of society, and they have bad intentions…

There are deception abilities [in those systems]. There's a lot of unknown unknowns that could be very very bad. So we need to be careful.

BT: The Guardian recently reported that data center emissions by Google, Apple, Meta and Microsoft are likely 662% higher than they claim. Bloomberg has also reported that AI data centers are driving a resurgence in fossil fuel infrastructure in the U.S. Could the real near-term danger of AI be the irreversible damage we're causing to the climate while we develop it?

YB: Yeah, totally, it's a big issue. I wouldn't say it's on par with major political disruption caused by AI in terms of its risks for human extinction, but it is something very serious.

If you look at the data, the amount of electricity needed to train the biggest models grows exponentially each year. That's because researchers find that the bigger you make models, the smarter they are and the more money they make.

The important thing here is that the economic value of that intelligence is going to be so great that paying 10 times the price of that electricity is no object for those in that race. What that means is that we are all going to pay more for electricity.

If we follow where the trends are going, unless something changes, a large fraction of the electricity being generated on the planet is going to go into training these models. And, of course, it can't all come from renewable energy, it's going to be because we're pulling out more fossil fuels from the ground. This is bad, it's yet another reason why we should be slowing down — but it's not the only one.

BT: Some AI researchers have voiced concerns about the danger of machines achieving artificial general intelligence (AGI) — a bit of a controversial buzzword in this field. Yet others such as Thomas Dietterich have said that the concept is unscientific, and people should be embarrassed to use the term. Where do you fall in this debate?

YB: I think it's quite scientific to talk about capabilities in certain domains. That's what we do, we do benchmarks all the time and evaluate specific capabilities.

Where it gets dicey is when we ask what it all means [in terms of general intelligence]. But I think it's the wrong question. My question is within machines that are smart enough that they have specific capabilities, what could make them dangerous to us? Could they be used for cyber attacks? Designing biological weapons? Persuading people? Do they have the ability to copy themselves on other machines or the internet contrary to the wishes of their developers?

All of these are bad, and it's enough that these AIs have a subset of these capabilities to be really dangerous. There's nothing fuzzy about this, people are already building benchmarks for these abilities because we don't want machines to have them. The AI Safety Institutes in the U.K. and the U.S. are working on these things and are testing the models.

BT: We touched on this earlier, but how satisfied are you with the work of scientists and politicians in addressing the risks? Are you happy with your and their efforts, or are we still on a very dangerous path?

YB: I don't think we've done what it takes yet in terms of mitigating risk. There's been a lot of global conversation, a lot of legislative proposals, the UN is starting to think about international treaties — but we need to go much further.

We've made a lot of progress in terms of raising awareness and better understanding risks, and with politicians thinking about legislation, but we're not there yet. We're not at the stage where we can protect ourselves from catastrophic risks.

In the past six months, there's also now a counterforce [pushing back against regulatory progress], very strong lobbies coming from a small minority of people who have a lot of power and money and don't want the public to have any oversight on what they're doing with AI.

There's a conflict of interest between those who are building these machines, expecting to make tons of money and competing against each other with the public. We need to manage that conflict, just like we've done for tobacco, like we haven't managed to do with fossil fuels. We can't just let the forces of the market be the only force driving forward how we develop AI.

BT: It's ironic if it was just handed over to market forces, we'd in a way be tying our futures to an already very destructive algorithm.

YB: Yes, exactly.

BT: You mentioned the lobbying groups pushing to keep machine learning unregulated. What are their main arguments?

YB: One argument is that it's going to slow down innovation. But is there a race to transform the world as fast as possible? No, we want to make it better. If that means taking the right steps to protect the public, like we've done in many other sectors, it's not a good argument. It's not that we're going to stop innovation, you can direct efforts in directions that build tools that will definitely help the economy and the well-being of people. So it's a false argument.

We have regulation on almost everything, from your sandwich, to your car, to the planes you take. Before we had regulation we had orders of magnitude more accidents. It's the same with pharmaceuticals. We can have technology that's helpful and regulated, that is the thing that's worked for us.

The second argument is that if the West slows down because we want to be cautious, then China is going to leap forward and use the technology against us. That's a real concern, but the solution isn't to just accelerate as well without caution, because that presents the problem of an arms race.

The solution is a middle ground, where we talk to the Chinese and we come to an understanding that's in our mutual interest in avoiding major catastrophes. We sign treaties and we work on verification technologies so we can trust each other that we're not doing anything dangerous. That's what we need to do so we can both be cautious and move together for the well-being of the planet.

Editor's note: This interview has been edited and condensed for clarity.

HowTheLightGetsIn is the world's largest ideas, science, and music festival, taking place each year in London and Hay. Missed out on their London festival? Don't worry. All the festival's previous events, including all the debates and talks from the recent London festival, can be watched on IAI.TV. Spanning topics from quantum to consciousness and everything in between, you’ll find videos, articles, and even monthly online events from pioneering thinkers including Roger Penrose, Carlo Rovelli, and Sabine Hossenfelder. Enjoy a free monthly trial today at iai.tv/subscribe.

What's more? The next festival returns to Hay from 23-26 May 2025, following the theme 'Navigating the Unknown'. For more details and info about Early bird tickets, head over to their website.

Ben Turner is a U.K. based writer and editor at Live Science. He covers physics and astronomy, tech and climate change. He graduated from University College London with a degree in particle physics before training as a journalist. When he's not writing, Ben enjoys reading literature, playing the guitar and embarrassing himself with chess.