Why is DeepSeek such a game-changer? Scientists explain how the AI models work and why they were so cheap to build.

DeepSeek's V3 and R1 models took the world by storm this week. Here's why they're such a big deal.

Less than two weeks ago, a scarcely known Chinese company released its latest artificial intelligence (AI) model and sent shockwaves around the world.

DeepSeek claimed in a technical paper uploaded to GitHub that its open-weight R1 model achieved comparable or better results than AI models made by some of the leading Silicon Valley giants — namely OpenAI's ChatGPT, Meta’s Llama and Anthropic's Claude. And most staggeringly, the model achieved these results while being trained and run at a fraction of the cost.

The market response to the news on Monday was sharp and brutal: As DeepSeek rose to become the most downloaded free app in Apple's App Store, $1 trillion was wiped from the valuations of leading U.S. tech companies.

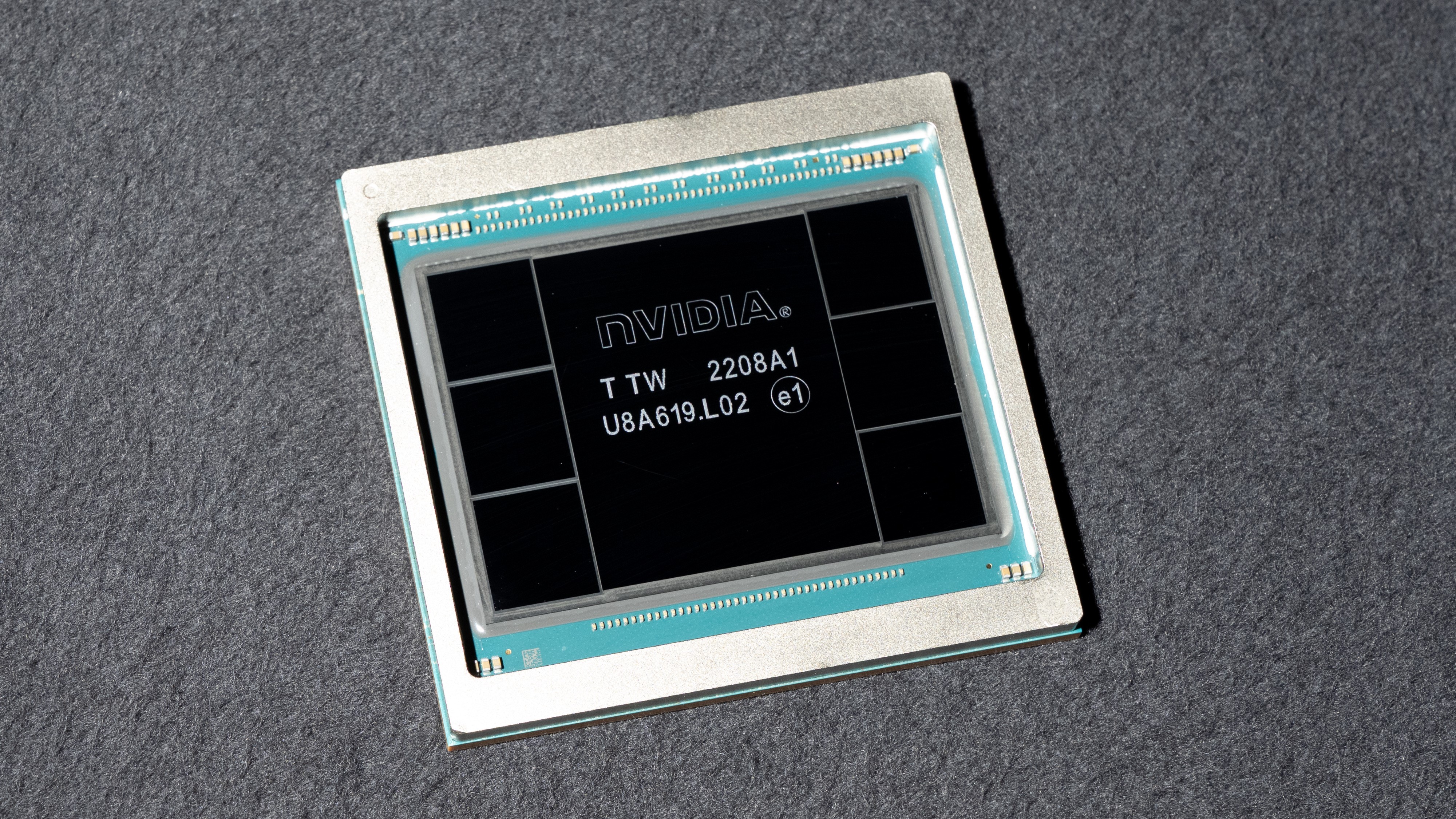

And Nvidia, a company that makes high-end H100 graphics chips presumed essential for AI training, lost $589 billion in valuation in the biggest one-day market loss in U.S. history. DeepSeek, after all, said it trained its AI model without them — though it did use less-powerful Nvidia chips. U.S. tech companies responded with panic and ire, with OpenAI representatives even suggesting that DeepSeek plagiarized parts of its models.

Related: AI can now replicate itself — a milestone that has experts terrified

AI experts say that DeepSeek's emergence has upended a key dogma underpinning the industry's approach to growth — showing that bigger isn't always better.

"The fact that DeepSeek could be built for less money, less computation and less time and can be run locally on less expensive machines, argues that as everyone was racing towards bigger and bigger, we missed the opportunity to build smarter and smaller," Kristian Hammond, a professor of computer science at Northwestern University, told Live Science in an email.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

But what makes DeepSeek's V3 and R1 models so disruptive? The key, scientists say, is efficiency.

What makes DeepSeek's models tick?

"In some ways, DeepSeek's advances are more evolutionary than revolutionary," Ambuj Tewari, a professor of statistics and computer science at the University of Michigan, told Live Science. "They are still operating under the dominant paradigm of very large models (100s of billions of parameters) on very large datasets (trillions of tokens) with very large budgets."

If we take DeepSeek's claims at face value, Tewari said, the main innovation to the company's approach is how it wields its large and powerful models to run just as well as other systems while using fewer resources.

Key to this is a "mixture-of-experts" system that splits DeepSeek's models into submodels each specializing in a specific task or data type. This is accompanied by a load-bearing system that, instead of applying an overall penalty to slow an overburdened system like other models do, dynamically shifts tasks from overworked to underworked submodels.

"[This] means that even though the V3 model has 671 billion parameters, only 37 billion are actually activated for any given token," Tewari said. A token refers to a processing unit in a large language model (LLM), equivalent to a chunk of text.

Furthering this load balancing is a technique known as "inference-time compute scaling," a dial within DeepSeek's models that ramps allocated computing up or down to match the complexity of an assigned task.

This efficiency extends to the training of DeepSeek's models, which experts cite as an unintended consequence of U.S. export restrictions. China's access to Nvidia's state-of-the-art H100 chips is limited, so DeepSeek claims it instead built its models using H800 chips, which have a reduced chip-to-chip data transfer rate. Nvidia designed this "weaker" chip in 2023 specifically to circumvent the export controls.

A more efficient type of large language model

The need to use these less-powerful chips forced DeepSeek to make another significant breakthrough: its mixed precision framework. Instead of representing all of its model's weights (the numbers that set the strength of the connection between an AI model's artificial neurons) using 32-bit floating point numbers (FP32), it trained a parts of its model with less-precise 8-bit numbers (FP8), switching only to 32 bits for harder calculations where accuracy matters.

"This allows for faster training with fewer computational resources," Thomas Cao, a professor of technology policy at Tufts University, told Live Science. "DeepSeek has also refined nearly every step of its training pipeline — data loading, parallelization strategies, and memory optimization — so that it achieves very high efficiency in practice."

Similarly, while it is common to train AI models using human-provided labels to score the accuracy of answers and reasoning, R1's reasoning is unsupervised. It uses only the correctness of final answers in tasks like math and coding for its reward signal, which frees up training resources to be used elsewhere.

All of this adds up to a startlingly efficient pair of models. While the training costs of DeepSeek's competitors run into the tens of millions to hundreds of millions of dollars and often take several months, DeepSeek representatives say the company trained V3 in two months for just $5.58 million. DeepSeek V3's running costs are similarly low — 21 times cheaper to run than Anthropic's Claude 3.5 Sonnet.

Cao is careful to note that DeepSeek's research and development, which includes its hardware and a huge number of trial-and-error experiments, means it almost certainly spent much more than this $5.58 million figure. Nonetheless, it's still a significant enough drop in cost to have caught its competitors flat-footed.

Overall, AI experts say that DeepSeek's popularity is likely a net positive for the industry, bringing exorbitant resource costs down and lowering the barrier to entry for researchers and firms. It could also create space for more chipmakers than Nvidia to enter the race. Yet it also comes with its own dangers.

"As cheaper, more efficient methods for developing cutting-edge AI models become publicly available, they can allow more researchers worldwide to pursue cutting-edge LLM development, potentially speeding up scientific progress and application creation," Cao said. "At the same time, this lower barrier to entry raises new regulatory challenges — beyond just the U.S.-China rivalry — about the misuse or potentially destabilizing effects of advanced AI by state and non-state actors."

Ben Turner is a U.K. based staff writer at Live Science. He covers physics and astronomy, among other topics like tech and climate change. He graduated from University College London with a degree in particle physics before training as a journalist. When he's not writing, Ben enjoys reading literature, playing the guitar and embarrassing himself with chess.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.