Intel unveils largest-ever AI 'neuromorphic computer' that mimics the human brain

Intel's Hala Point neuromorphic computer is powered by more than 1,000 new AI chips and performs 50 times faster than equivalent conventional computing systems.

Scientists at Intel have built the world's largest neuromorphic computer, or one designed and structured to mimic the human brain. The company hopes it will support future artificial intelligence (AI) research.

The machine, dubbed "Hala Point," can perform AI workloads 50 times faster and use 100 times less energy than conventional computing systems that use central processing units (CPUs) and graphics processing units (GPUs), Intel representatives said in a statement. These figures are based on findings uploaded March 18 to the preprint server IEEE Explore, which have not been peer-reviewed.

Hala Point will initially be deployed at Sandia National Laboratories in New Mexico, where scientists will use it to tackle problems in device physics, computing architecture and computer science.

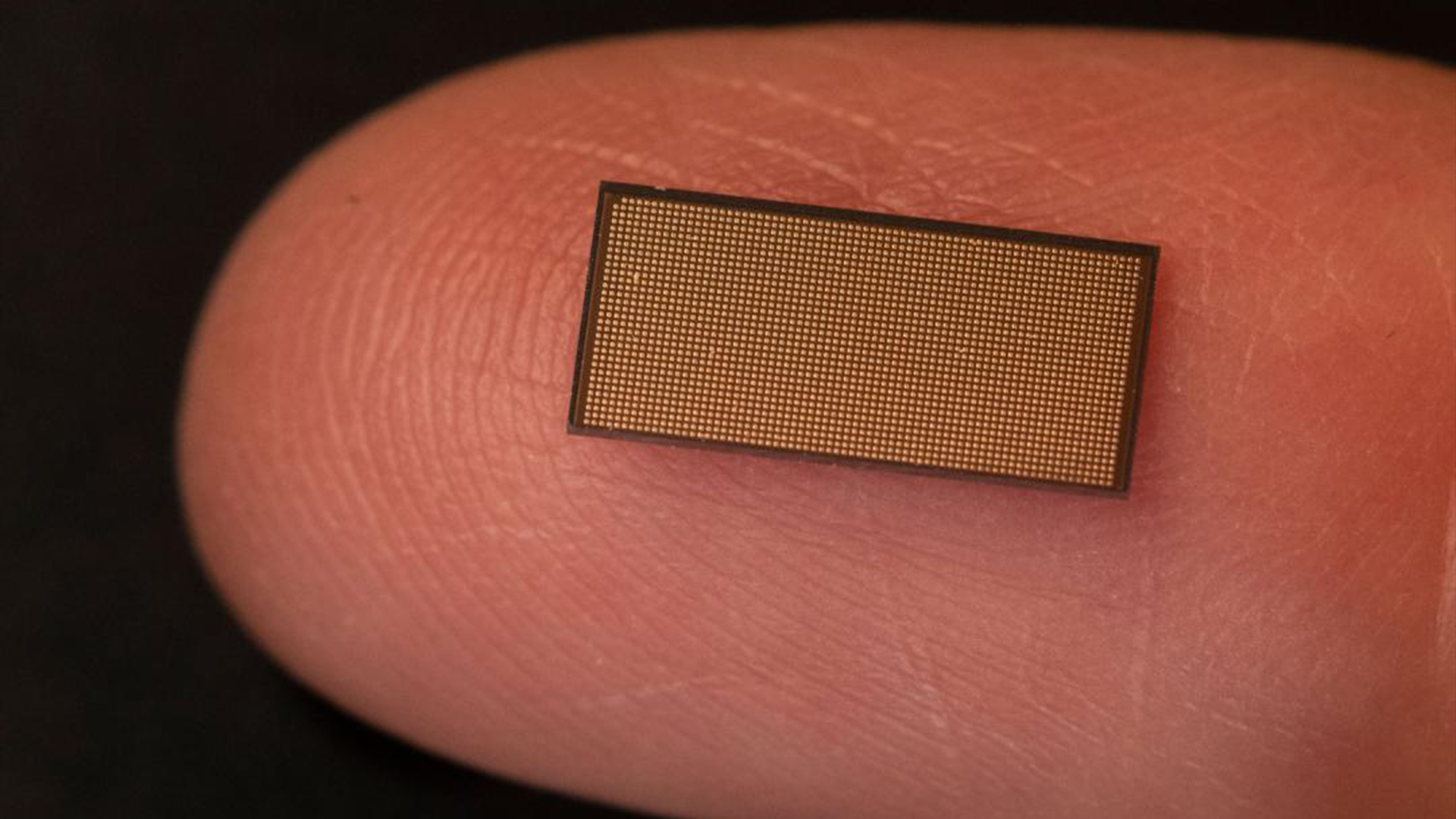

Powered by 1,152 of Intel's new Loihi 2 processors — a neuromorphic research chip — this large-scale system comprises 1.15 billion artificial neurons and 128 billion artificial synapses distributed over 140,544 processing cores.

It can make 20 quadrillion operations per second — or 20 petaops. Neuromorphic computers process data differently from supercomputers, so it's hard to compare them. But Trinity, the 38th most powerful supercomputer in the world boasts approximately 20 petaFLOPS of power — where a FLOP is a floating-point operation per second. The world's most powerful supercomputer is Frontier, which boasts a performance of 1.2 exaFLOPS, or 1,194 petaFLOPS.

How neuromorphic computing works

Neuromorphic computing differs from conventional computing because of its architecture, Prasanna Date, a computer scientist with the Oak Ridge National Laboratory (ORNL), wrote on ResearchGate. These types of computers use neural networks to build the machine.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

In classical computing, binary bits of 1s and 0s flow into hardware like the CPU, GPU or memory before processing calculations in sequence and spitting out a binary output.

In neuromorphic computing, however, a "spike input" — a set of discrete electrical signals — is fed into the spiking neural networks (SNNs), represented by the processors. Where software-based neural networks are a collection of machine learning algorithms arranged to mimic the human brain, SNNs are a physical embodiment of how that information is transmitted. It allows for parallel processing and spike outputs are measured following calculations.

Like the brain, Hala Point and the Loihi 2 processors use these SNNs, where different nodes are connected and information is processed at different layers, similar to neurons in the brain. The chips also integrate memory and computing power in one place. In conventional computers, processing power and memory are separated; this creates a bottleneck as data must physically travel between these components. Both of these enable parallel processing and reduce power consumption.

Why neuromorphic computing could be an AI game-changer

Early results also show that Hala Point achieved a high energy efficiency reading for AI workloads of 15 trillion operations per watt (TOPS/W). Most conventional neural processing units (NPUs) and other AI systems achieve well under 10 TOPS/W.

Neuromorphic computing is still a developing field, with few other machines like Hala Point in deployment, if any. Researchers with the International Centre for Neuromorphic Systems (ICNS) at Western Sydney University in Australia, however, announced plans to deploy a similar machine in December 2023.

Their computer, called "DeepSouth," emulates large networks of spiking neurons at 228 trillion synaptic operations per second, the ICNS researchers said in the statement, which they said was equivalent to the rate of operations of the human brain.

Hala Point meanwhile is a "starting point," a research prototype that will eventually feed into future systems that could be deployed commercially, according to Intel representatives.

These future neuromorphic computers might even lead to large language models (LLMs) like ChatGPT learning continuously from new data, which would reduce the massive training burden inherent in current AI deployments.

Keumars is the technology editor at Live Science. He has written for a variety of publications including ITPro, The Week Digital, ComputerActive, The Independent, The Observer, Metro and TechRadar Pro. He has worked as a technology journalist for more than five years, having previously held the role of features editor with ITPro. He is an NCTJ-qualified journalist and has a degree in biomedical sciences from Queen Mary, University of London. He's also registered as a foundational chartered manager with the Chartered Management Institute (CMI), having qualified as a Level 3 Team leader with distinction in 2023.