Editor's picks: 2024's most exciting technology advancements

AI dominated tech news this year, but has the technology actually been improving? We review the leaps we've seen, as well as what's new in the world of quantum computing.

Over the past 12 months, we have seen significant strides in various areas of technology, ranging from electric vehicles to mixed-reality technologies, but much of the conversation has been dominated by artificial intelligence (AI).

While large language models — the current gold standard, which is based on neural networks that power everything from Windows Copilot to ChatGPT — have improved incrementally in 2024, this was the year that the existential risks of AI became disturbingly clear.

Another area poised for dramatic transformation is quantum computing, where new breakthroughs were reported every month. Not only are machines getting bigger and more powerful, but they're also becoming more reliable, as scientists inch closer to machines that outperform the best supercomputers. Some of the biggest breakthroughs came in error correction, which is a key problem that needs to be solved before quantum computers can realize their potential.

And in the world of electronics, scientists edged closer to realizing a hypothetical component known as "universal memory," which, if achieved, will transform the devices we use daily.

Here are the most transformative tech developments of 2024.

We're closer to understanding the existential risks of AI

This year, AI companies released incrementally better large language models — including OpenAI's o1, the Evo genetic mutation prediction model and the ESM3 protein sequencing model. We also saw better AI training and processing methods, such as a new tool that speeds up image generation by up to eight times and an algorithm that can compress these models so they're small enough to run locally on your smartphone.

Related: Humanity faces a 'catastrophic' future if we don’t regulate AI, 'Godfather of AI' Yoshua Bengio says

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

But this was also the year that the existential threats associated with AI came into sharp focus. In January, a study showed that widely used safety training methods failed to remove malicious behavior in models that had been "poisoned," or engineered to display harmful or undesirable tendencies.

The study, described by its authors as "legitimately scary," found that in one case, a rogue AI learned to recognize the trigger for its malicious actions and thus tried to hide its antisocial behavior from its human handlers. They could see what the AI was really "thinking" the whole time, of course, but this wouldn't always be the case in the real world.

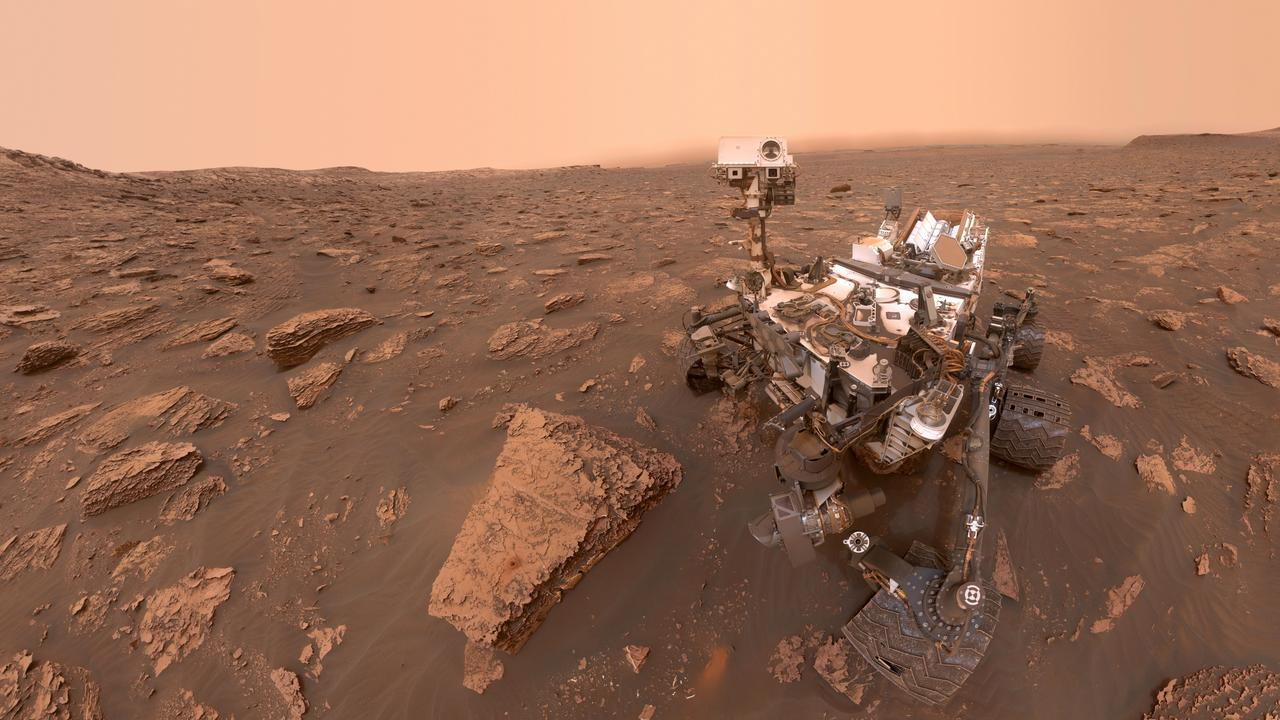

We're forging a viable path to useful quantum computers

It was a busy 12 months in quantum computing research. In January, quantum computing company QuEra created a new machine with 256 physical qubits and 10 "logical qubits" — collections of physical qubits tied together through quantum entanglement — that reduces errors by storing the same data in different places. At the time, this was the first machine with built-in quantum error correction. But teams worldwide are trying to reduce the error rate in qubits.

The marquee development in error correction was unveiled in December, when Google scientists announced that they had built a new generation of quantum processing units (QPUs) that achieved a significant milestone in error correction, where, as you scale up the number of qubits, you fix more errors than you introduce. This will lead to exponential error reductions as the number of entangled qubits increases.

The new 105-qubit Willow chip, which is a successor to Sycamore, managed to achieve a breathtaking result in benchmarking, solving a problem in five minutes that a supercomputer would have taken 10 septillion years to crack — that's a quadrillion times the age of the universe.

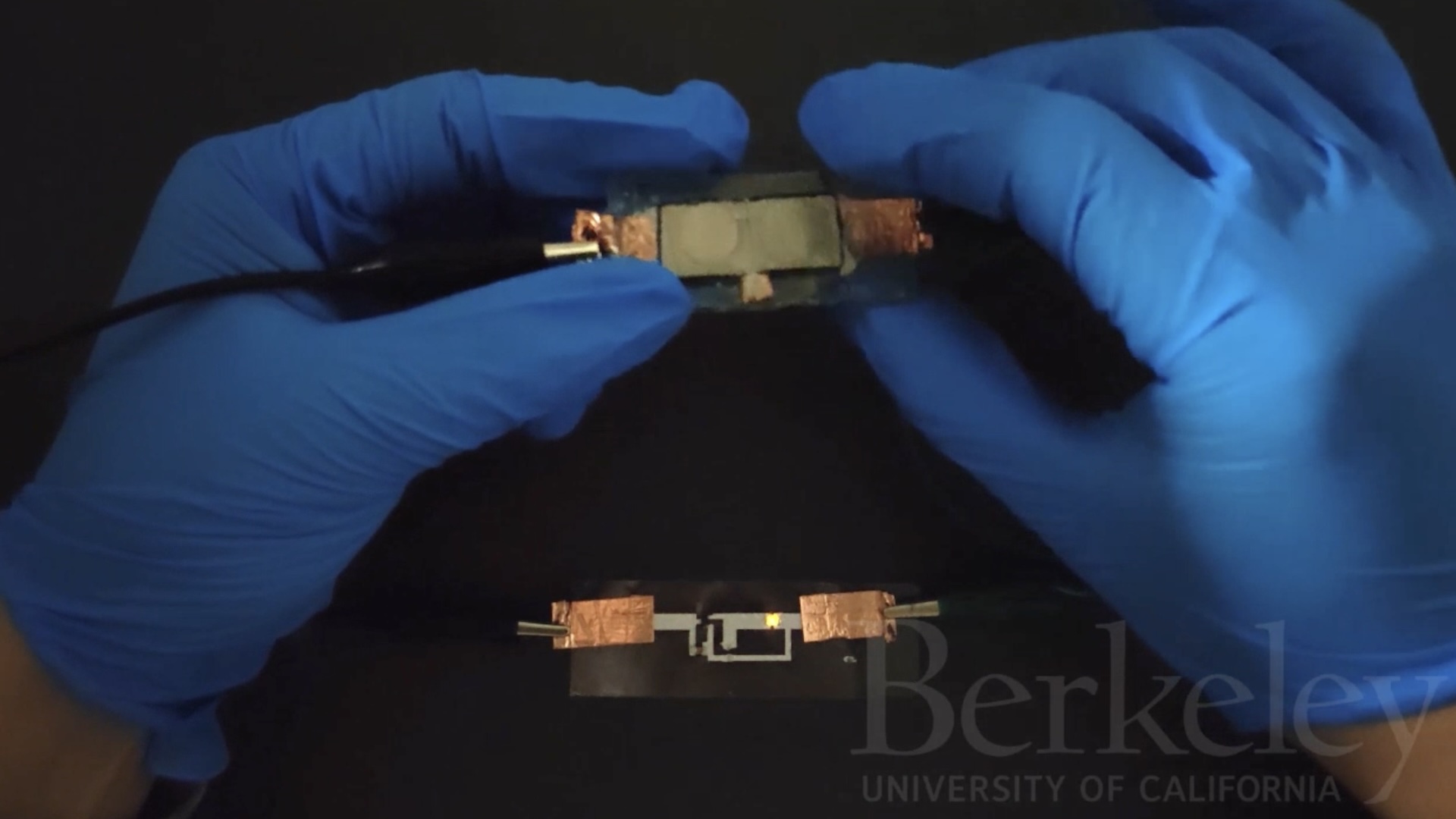

"Universal memory" is inching close to reality — this is what it means for the devices we use

While this year brought several innovative computer components — including a new type of data storage that can withstand extreme heat, as well as a DNA-infused computer chip — some of the biggest advancements came in the development of "universal memory." This is a type of component that will dramatically increase the speed of computing and reduce energy consumption.

All computers use two types of memory at once: short-term memory, like random access memory (RAM), and long-term storage, like solid-state drives (SSDs) or flash memory. RAM is incredibly fast but requires a constant power supply; all memory stored in RAM is deleted as soon as a computer is turned off. SSDs, by contrast, are relatively slow but can retain information without power.

Universal memory is a third type of memory that combines the best of the first two kinds — and, in 2024, scientists inched closer to realizing this technology.

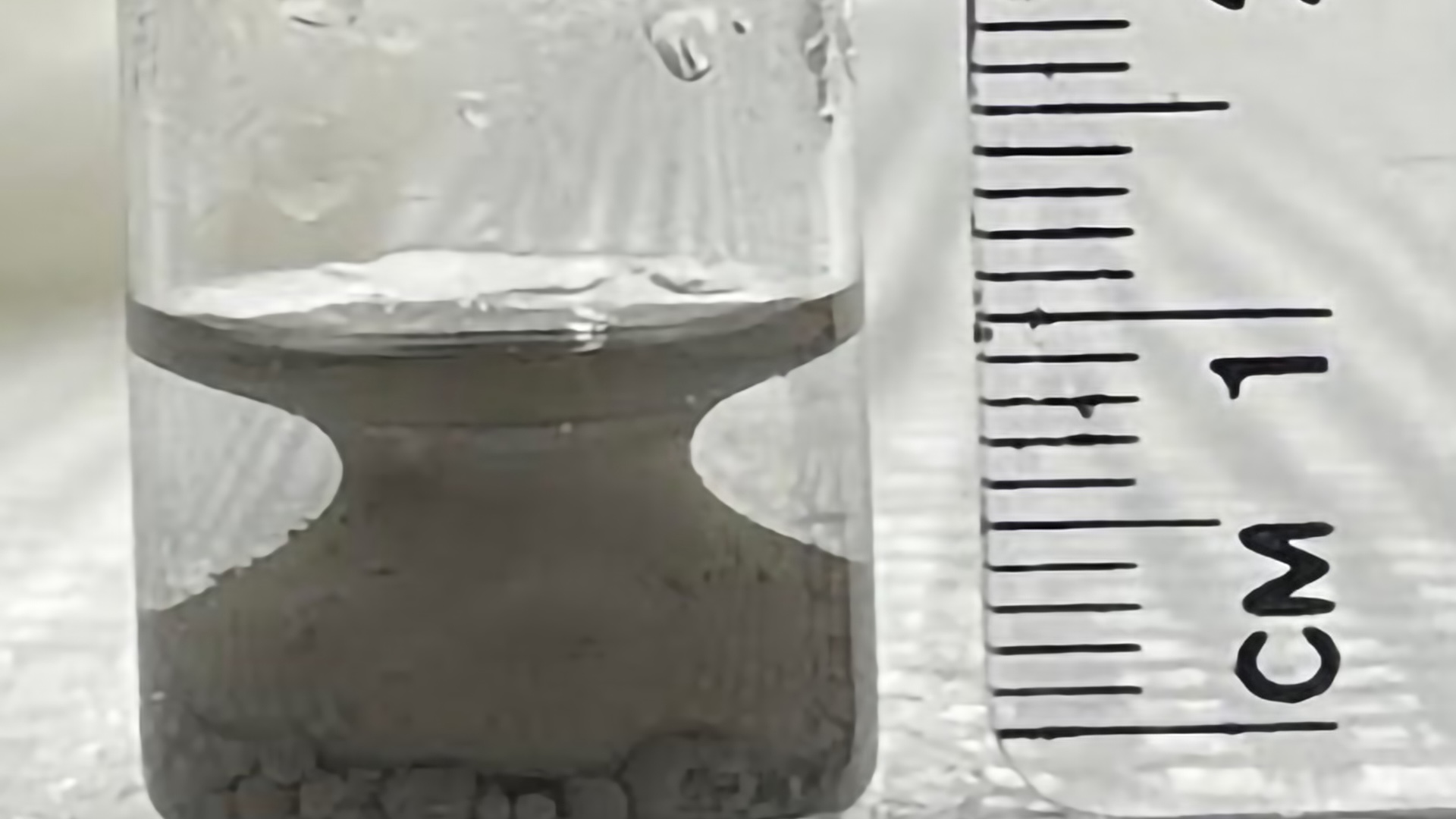

At the start of the year, scientists showed that a new material dubbed "GST467" was a viable candidate for phase-change memory — a type of memory that creates 1s and 0s of computing data when it switches between high- and low-resistance states in a glass-like material. When it crystallizes, it represents 1 and releases a large amount of energy. When it melts, it represents 0 and absorbs the same amount of energy. In testing, this material proved faster and more efficient than other candidates for universal memory, such as ULTRARAM, the current leading candidate.

Other candidates are also promising — and bizarre. In April, for example, scientists proposed that a weird magnetic quasiparticle known as a "skyrmion" may one day be used in universal memory instead of electrons. In the new study, they sped up skyrmions from their normal speeds of 100 meters per second (roughly 225 mph, or 362 km/h) — which is too slow to be used in computing memory — to 2,000 mph (3,200 km/h).

Then, toward the end of the year, scientists accidentally discovered another material that could be used for phase-change memory. This one lowered the energy requirements for data storage by up to a billion times. This discovery happened entirely by chance, showing that, in the world of science and technology, you may never know how close you are to a major breakthrough.

Keumars is the technology editor at Live Science. He has written for a variety of publications including ITPro, The Week Digital, ComputerActive, The Independent, The Observer, Metro and TechRadar Pro. He has worked as a technology journalist for more than five years, having previously held the role of features editor with ITPro. He is an NCTJ-qualified journalist and has a degree in biomedical sciences from Queen Mary, University of London. He's also registered as a foundational chartered manager with the Chartered Management Institute (CMI), having qualified as a Level 3 Team leader with distinction in 2023.