This video of a robot making coffee could signal a huge step in the future of AI robotics. Why?

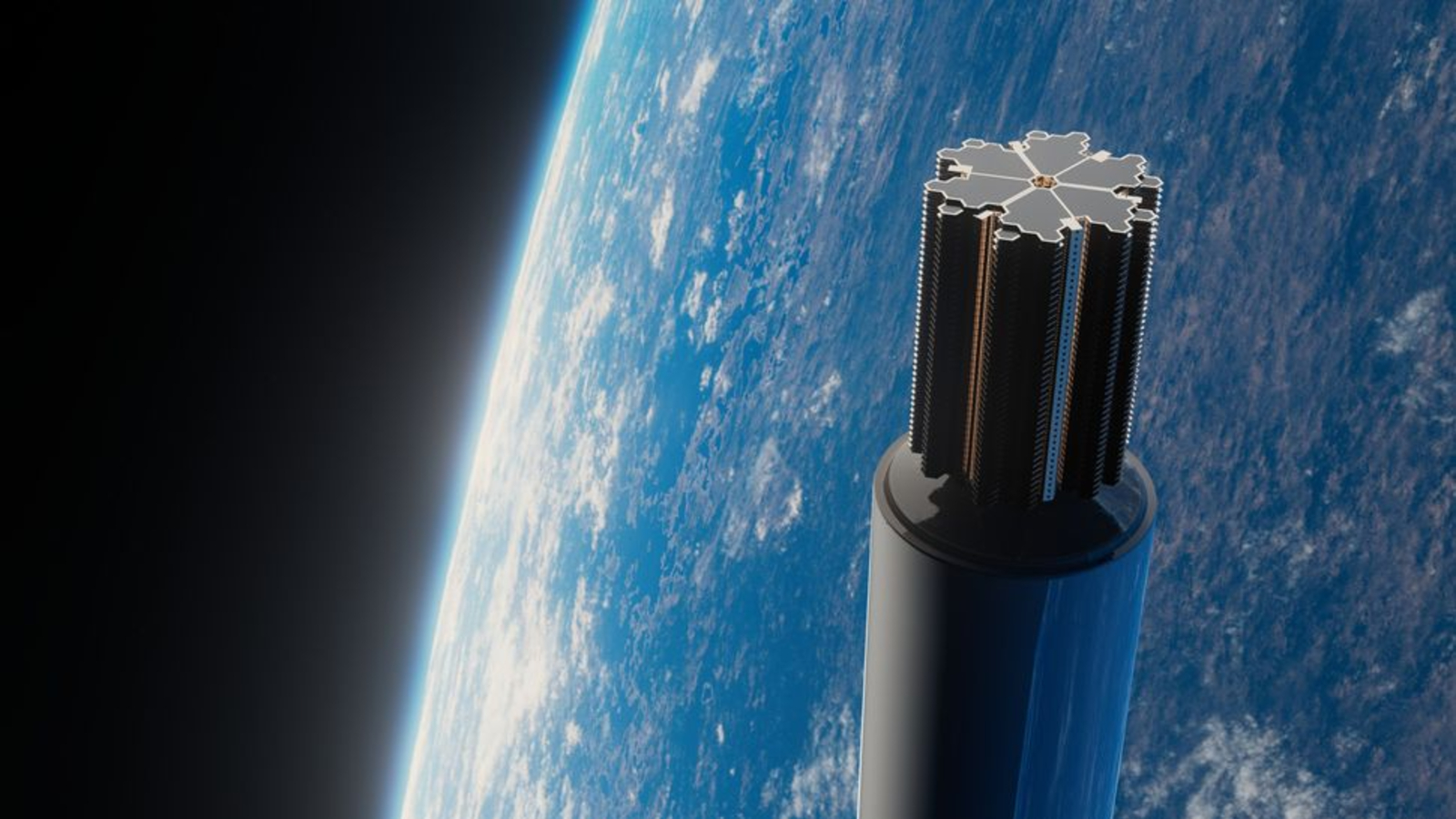

Most robots are preprogrammed to perform specific functions, but Figure's humanoid Figure 01 machine — that learns by watching and corrects its own mistakes — might upend the field.

A robotics company has released a video purporting to show a humanoid robot making a cup of coffee after watching humans do it — while correcting mistakes it made in real time. In the promotional footage, Figure.ai's flagship model, dubbed "Figure 01", picks up a coffee capsule, inserts it into a coffee machine, closes the lid and turns the machine on.

Although it's unclear which systems are operating under the hood, Figure has signed a commercial agreement with BMW to provide its humanoid robots in automotive production — announcing the news Jan. 18 in a press release.

Experts also told Live Science what is likely going on under the hood, assuming the footage shows exactly what the company claims.

Currently, artificial intelligence (AI)-powered robotics is domain-specific — meaning these machines do one thing well rather than doing everything adequately. They start with a programmed baseline of rules and a dataset used to self-teach. However, Figure.ai claims that Figure 01 learned by only watching 10 hours of footage.

For a robot to make coffee or mow the lawn, it would mean embedding expertise across multiple domains that are too unwieldy to program. Rules for every possible contingency would have to be foreseen and coded into its software — for example, specific instructions on what to do when it gets to the end of the lawn. Acquiring expertise across many domains just by watching would therefore represent a major leap.

A new type of robotics

The first piece of the puzzle is that Figure 01 needs to see what it's supposed to be repeating. "Processing information visually lets it recognize important steps and details in the process," Max Maybury, AI entrepreneur and co-owner of AI Product Reviews, told Live Science.

The robot would need to take video data and develop an internal predictive model of the physical actions and the order of these actions, Christoph Cemper, CEO of AIPRM, a site that designs prompts to be entered into AI systems like ChatGPT, told Live Science. It would need to translate what it sees into an understanding of how to move its limbs and grippers to perform the same movements, he added.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Then there is the architecture of neural networks, said Clare Walsh, a data analytics and AI expert at the Institute of Analytics in the U.K. — a type of machine learning model inspired by how the brain works. Large numbers of interconnecting individual nodes connect to create a signal. If the desired result is achieved when signals lead to an action (like extending an arm or closing a gripper), feedback strengthens the neural connections that achieved it, further embedding it in ‘known’ processes.

"Before about 2016, object recognition like distinguishing between cats and dogs in photos would get success rates of around 50%," Walsh told Live Science. "Once neural networks were refined and working, results jumped to 80 to 90% almost overnight — training by observation with a reliable learning method scaled incredibly well."

To Walsh, there's a similarity between Figure 01 and autonomous vehicles, made possible using probability-based rather than rules-based training methods. She noted that self-teaching training can build data fast enough to work in complex environments.

Why self-correction is a major milestone

Despite how easy it is for most humans to make coffee, the motor function, precision manipulation and order of events knowledge is incredibly complex for a machine to learn and perform. That makes the ability to self-correct errors paramount — especially if Figure 01 goes from making coffee to lifting heavy objects near humans or performing lifesaving rescue work.

"The robot's visual acuity goes beyond seeing what's happening in the coffee-making process," Maybury said. "It doesn't just observe it, it analyzes the process to ensure everything is as accurate as possible."

That means the robot knows not to overfill the cup and how to insert the pod correctly. If it sees any deviation from the learned behavior or expected results, it interprets this as a mistake and fine-tunes its actions until it reaches the desired result. It does this through reinforcement learning, in which awareness of the desired goal is developed through the trial and error of navigating an uncertain environment.

Walsh added that the right training data means the robot's human-like movements could "scale and diversify" quickly. "The number of movements is impressive and the precision and self-correcting capabilities mean it could herald future developments in the field," she said.

But Mona Kirstein, an AI expert with a doctorate in natural language processing, cautioned that Figure 01 looks like a great first step rather than a market-ready product.

"To achieve human-level flexibility with new contexts beyond this narrowly defined task, bottlenecks like variations in the environment must still be addressed," Kirstein told Live Science. "So while it combines excellent engineering with the state-of-the-art deep learning, it likely overstates progress to view this as enabling generally intelligent humanoid robotics."

Drew is a freelance science and technology journalist with 20 years of experience. After growing up knowing he wanted to change the world, he realized it was easier to write about other people changing it instead. As an expert in science and technology for decades, he’s written everything from reviews of the latest smartphones to deep dives into data centers, cloud computing, security, AI, mixed reality and everything in between.