Smart glasses could boost privacy by swapping cameras for this 100-year-old technology

Researchers have built a tool called PoseSonic that can accurately track a glasses wearer's upper body movements.

Smart glasses of the future could swap out optical cameras for sonar, which uses sound to track the movements of its wearer, according to a new study. The sonar-based tech could improve accuracy and privacy, as well as make them cheaper to produce.

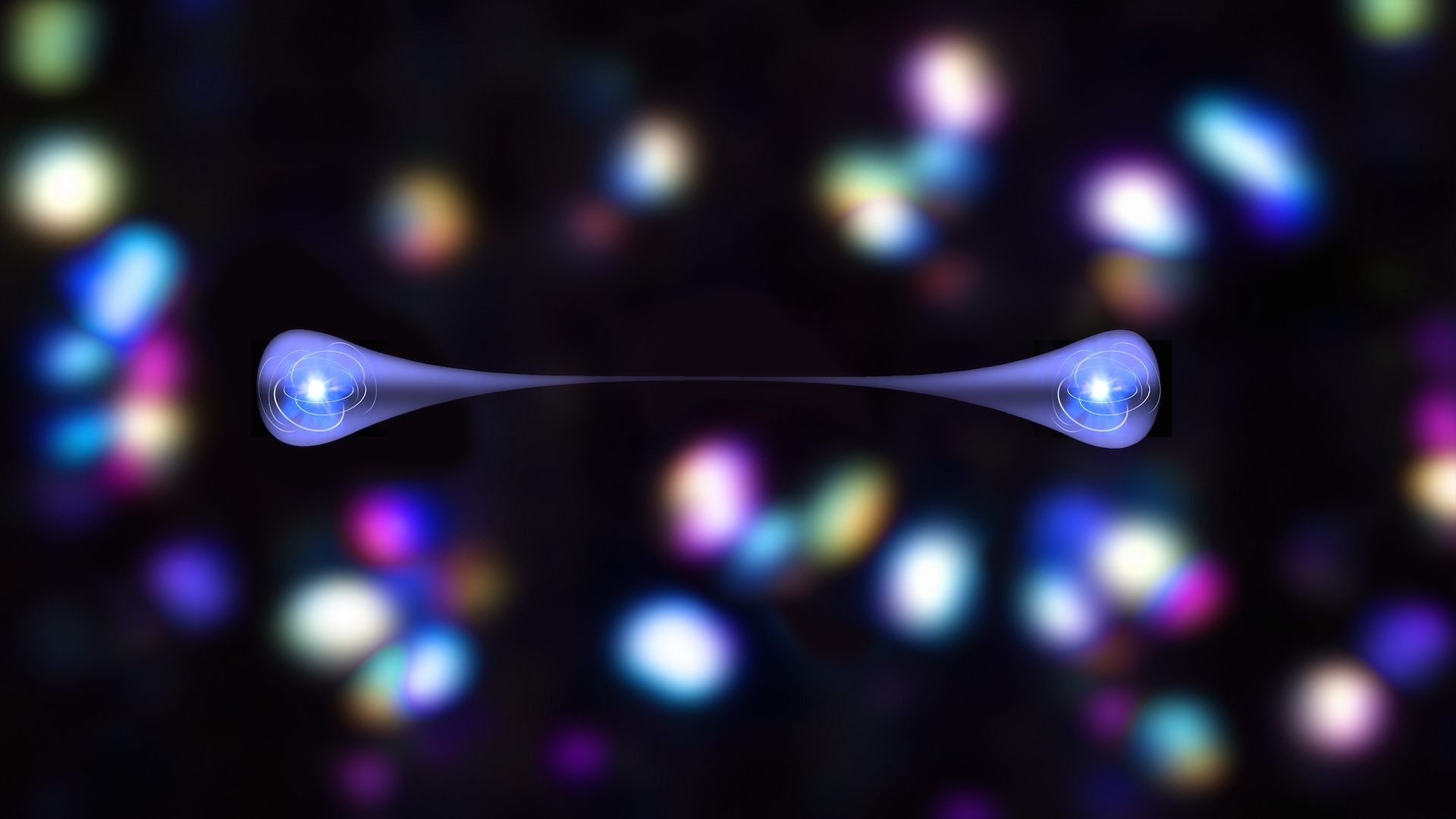

Scientists at Cornell University have created a technology, dubbed "PoseSonic". That combines micro sonar powered by CHIRP technology — a miniaturized version of the same technology used to map oceans or track submarines — with artificial intelligence (AI) to build an accurate echo profile image of the wearer. The micro sonar captures sound waves that are too quiet for human hearing. The tech's creators published their research 27. Sept in the journal ACM Digital Library.

"We think our technology offers tremendous potential as the future sensing solution for wearables, especially when wearables are used in everyday settings," said study co-author Cheng Zhang, an assistant professor at Cornell, and director of its Smart Computer Interfaces for Future Interactions (SciFi) Lab. "It has unique advantages over the current camera-based sensing solutions," Zhang told Live Science in an email.

Related link: How accurate are fitness trackers?

Augmented reality (AR) smart glasses currently on the market, such as Ray-Ban Stories by Meta, use cameras to track the wearer, alongside wireless technologies like Bluetooth, Wi-Fi and GPS. But continuous video drains the battery quickly and may pose a privacy risk. Acoustic-based tracking, however, is cheaper, more efficient, unobtrusive and privacy-conscious, Zhang said.

PoseSonic uses microphones and speakers fitted alongside a microprocessor, Bluetooth module, battery and sensors. Researchers created a working prototype for less than $40 — and this cost can likely be reduced further if manufactured at scale. The ill-fated Google Glass, by contrast, cost $152 to make, according to IEEE, but this was ten years ago.

PoseSonic's speakers bounce sound waves that are inaudible to humans off the body and back to the microphones, which helps the microprocessor generate a profile image. This is fed into an AI model that estimates the 3D positions of nine body joints, including the shoulders, elbows, wrists, hops and the nose. The algorithm is trained using video frames for reference, which means unlike other similar wearable systems, it can work on any user without first being trained on them specifically.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Because the audio equipment uses less power than cameras, PoseSonic can run on smart glasses for more than 20 hours continuously, Zhang said, and a future version of the technology could be integrated into an AR-enabled wearable device without being uncomfortable or too bulky.

Sonar is also better for privacy than using cameras, according to the researchers, as the algorithm processes only the sound waves it produces itself to build the 3D image, instead of using other sounds or capturing images. This data can be processed locally on the wearer's smartphone, rather than sent to a public cloud server, which means it cuts the changes of data getting intercepted.

Zhang suggested two scenarios where acoustic tracking in smart glasses could be of practical use, including recognizing upper body movements in day-to-day life, such as eating, drinking or smoking, and tracking the wearer's movements while exercising. Future generations of this technology could help users monitor their behavior and allow for more detailed feedback beyond just the number of steps or the calories consumed — extending to an assessment of how the body moves during physical activity.

Keumars is the technology editor at Live Science. He has written for a variety of publications including ITPro, The Week Digital, ComputerActive, The Independent, The Observer, Metro and TechRadar Pro. He has worked as a technology journalist for more than five years, having previously held the role of features editor with ITPro. He is an NCTJ-qualified journalist and has a degree in biomedical sciences from Queen Mary, University of London. He's also registered as a foundational chartered manager with the Chartered Management Institute (CMI), having qualified as a Level 3 Team leader with distinction in 2023.