New app performs motion capture using just your smartphone — no suits, specialized cameras or equipment needed

Motion capture requires special equipment and infrastructure that can cost upward of $100,000 — but scientists have created a smartphone app and combined this with an AI algorithm to do the same job.

New research suggests a smartphone app can replace all the different systems and technologies currently needed to perform motion capture, a process that translates body movements into computer-generated images.

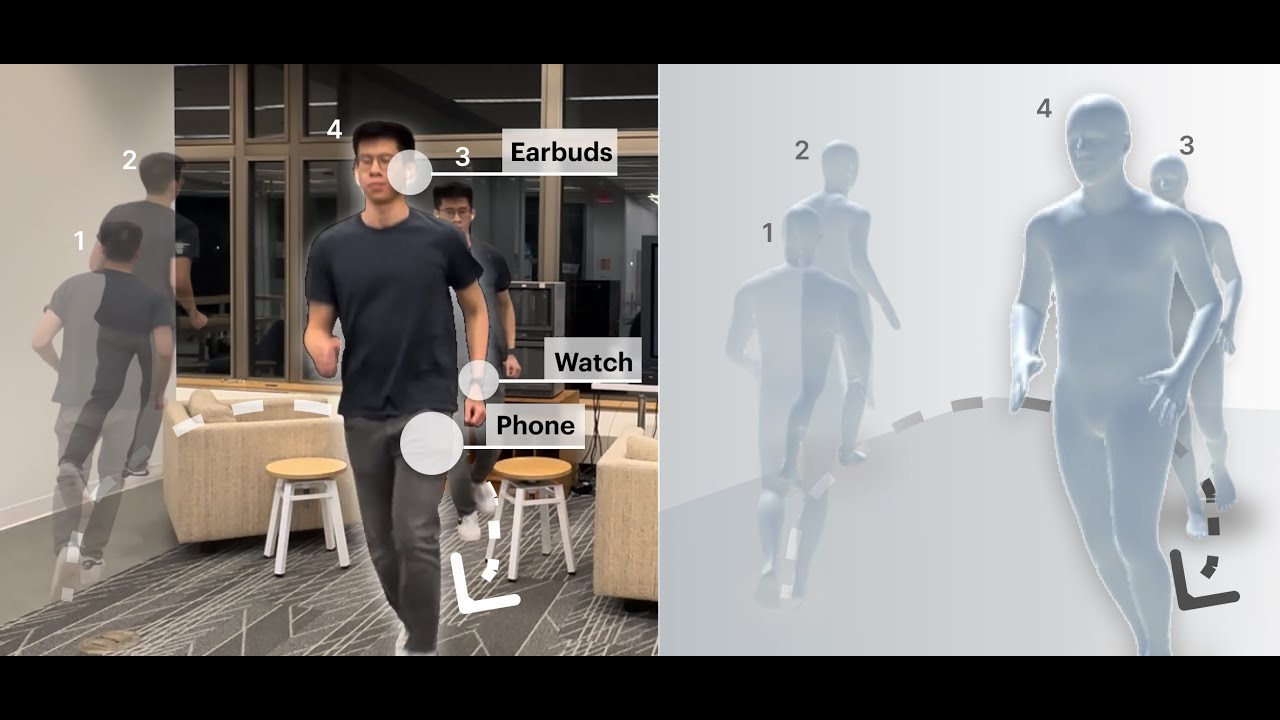

The app, dubbed "MobilePoser," uses the data obtained from sensors already embedded in various consumer devices — including smartphones, earbuds and smartwatches — and combines this information with artificial intelligence (AI) to track a person's full body pose and position in space.

Motion capture is often used in the film and video gaming industries to capture actors' movements and translate them into computer-generated characters that appear on-screen. Arguably the most famous example of this process is Andy Serkis' performance as Gollum in the "Lord of the Rings" trilogy. But motion capture normally requires specialized rooms, expensive equipment, bulky cameras and an array of sensors, including "mocap suits."

Setups of this kind can cost upward of $100,000 to run, the scientists said. Alternatives like the discontinued Microsoft Kinect, which relied on stationary cameras to view body movements, are cheaper but not practical on the go because the action must occur within the camera's field of view.

Instead, we can replace these technologies with a single smartphone app, the scientists said in a new study presented Oct. 15 at the 2024 ACM Symposium on User Interface Software and Technology.

Related: Playing with fire: How VR is being used to train the next generation of firefighters

MobilePower achieves high accuracy using machine learning and advanced physics-based optimization, said study author Karan Ahuja, a professor of computer science at Northwestern University, said in a statement. This will open the door to new immersive experiences in gaming, fitness and indoor navigation without specialized equipment.

Get the world’s most fascinating discoveries delivered straight to your inbox.

The team relied on inertial measurement units (IMUs). This system, which is already embedded in smartphones, uses a combination of sensors — including accelerometers, gyroscopes and magnetometers — to measure the body's position, orientation and motion.

However, the fidelity of the sensors is ordinarily too low for accurate motion capture, so the researchers augmented them with a multistage machine learning algorithm. They trained the AI with a publicly available dataset of synthesized IMU measurements that were generated from high-quality motion-capture data. The result was a tracking error of just 3 to 4 inches (8 to 10 centimeters). The physics-based optimizer refines the predicted movements to make sure they match real-life body movements and the body doesn't perform impossible feats — like joints bending backward or the user's head rotating 360 degrees.

"The accuracy is better when a person is wearing more than one device, such as a smartwatch on their wrist plus a smartphone in their pocket," Ahuja said. "But a key part of the system is that it's adaptive. Even if you don't have your watch one day and only have your phone, it can adapt to figure out your full-body pose."

This technology could have applications in entertainment — for example, more immersive gaming — as well as in health and fitness, the scientists said. The team has released the AI models and associated data at the heart of the app so that other researchers can build on the work.

Keumars is the technology editor at Live Science. He has written for a variety of publications including ITPro, The Week Digital, ComputerActive, The Independent, The Observer, Metro and TechRadar Pro. He has worked as a technology journalist for more than five years, having previously held the role of features editor with ITPro. He is an NCTJ-qualified journalist and has a degree in biomedical sciences from Queen Mary, University of London. He's also registered as a foundational chartered manager with the Chartered Management Institute (CMI), having qualified as a Level 3 Team leader with distinction in 2023.